HFC Network

The Cable Security Experience

We’ve all adjusted the ways we work and play and socialize in response to COVID. This has increased awareness that our broadband networks are critical – and they need to be secure. The cable industry has long focused on delivering best-in-class network security and we continue to innovate as we move on towards a 10G experience for subscribers.

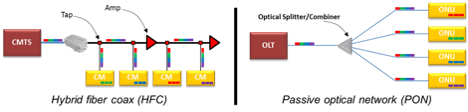

CableLabs® participates in both hybrid fiber coaxial (HFC) and passive optical network (PON) technology development. This includes the development and maintenance of the Data Over Cable Service Interface Specification (DOCSIS®) technology that enables broadband internet service over HFC networks. We work closely with network operators and network equipment vendors to ensure the security of both types of networks. Let’s review these two network architectures and then discuss the threats that HFC and PON networks face. We’ll see that the physical media (fiber or coax) doesn’t matter much to the security of the wired network. We’ll discuss the two architectures and conclude by briefly discussing the security of the DOCSIS HFC networks.

A Review of HFC and PON Architectures

The following diagram illustrates the similarities and differences between HFC and PON.

Both HFC and PON-based FTTH are point-to-multipoint network architectures, which means that in both architectures the total capacity of the network is shared among all subscribers on the network. Most critically, from a security perspective, all downlink subscriber communications in both architectures are present at the terminating network element at the subscriber – the cable modem (CM) or optical network unit (ONU). This necessitates protections for these communications to ensure confidentiality.

In an HFC network, the fiber portion is between a hub or headend that serves a metro area (or portion thereof) and a fiber node that serves a neighborhood. The fiber node converts the optical signal to radio frequency, and the signal is then sent on to each home in the neighborhood over coaxial cable. This hybrid architecture enables continued broadband performance improvements to support higher user bandwidths without the need to replace the coaxial cable throughout the neighborhood. It’s important to note that the communication channels to end users in the DOCSIS HFC network are protected, through encryption, on both the coaxial (radio) and fiber portions of the network.

FTTH is most commonly deployed using a passive optical networking (PON) architecture, which uses a shared fiber down to a point in the access network where the optical signal is split using one or more passive optical splitters and transmitted over fiber to each home. The network element on the network side of this connection is an Optical Line Terminal (OLT) and at the subscriber side is an ONU. There are many standards for PON. The two most common are Gigabit Passive Optical Networks (GPON) and Ethernet Passive Optical Networks (EPON). An interesting architecture option to note is that CableLabs developed a mechanism that allows cable operators to manage EPON technology the same way they manage services over the DOCSIS HFC network – DOCSIS Provisioning of EPON.

In both HFC and PON architectures, encryption is used to ensure the confidentiality of the downlink communications. In DOCSIS HFC networks, encryption is used bi-directionally by encrypting both the communications to the subscriber’s cable modem (downlink) and communications from the subscriber’s cable modem (uplink). In PON, bi-directional encryption is also available.

Attacker View

How might an adversary (a hacker) look at these networks? There are four attack vectors available to adversaries in exploiting access networks:

- Adversaries can directly attack the access network (e.g., tapping the coax or fiber cable).

- They may attack a customer premises equipment (CPE) device from the network side of the service, typically referred to as the wide area network (WAN) side.

- They may attack the CPE device from the home network side, or the local area network (LAN) side.

- And they may attack the network operator’s infrastructure.

Tapping fiber or coaxial cables are both practical. In fact, tools to allow legitimate troubleshooting and management by authorized technicians abound for both fiber and coaxial cables. An incorrect assumption is to believe that fiber tapping is difficult or highly technical, relative to tapping a coaxial cable. You can easily find several examples on the internet of how this is simply done. Depending where the media is accessed, all user communications may be available on both the uplink and downlink side. However, both HFC and PON networks support having those communications encrypted, as highlighted above. Of course, that doesn’t mean adversaries can’t disrupt the communications. They can do so in both cases. Doing so, however, is relegated only to houses passed on that specific fiber or coaxial cable; the attack is local and doesn’t scale.

For the other attack vectors, the risks to HFC or PON networks are equivalent. CPE and network infrastructure (such as OLTs or CMTSs) must be hardened against both local and remote attacks regardless of transport media (e.g., fiber, coax).

Security Tools Available to Operators

In both HFC and PON architectures, the network operator can provide the subscriber with an equivalent level of network security. The three primary tools to secure both architectures rely on cryptography. These tools are authentication, encryption, and message hashing.

- Authentication is conducted using a secret of some sort. In the case of HFC, challenge and response are used based on asymmetric cryptography as supported by public key infrastructure (PKI). In FTTH deployments, mechanisms may rely on pre-shared keys, PKI, EAP-TLS (IETF RFC 5216) or some other scheme. The authentication of endpoints should be repeated regularly, which is supported in the CableLabs DOCSIS specification. Regular re-authentication increases the assurance that all endpoints attached to the network are legitimate and known to the network operator.

- Encryption provides the primary tool for keeping communications private. User communications in HFC are encrypted using cryptographic keys negotiated during the authentication step, using the DOCSIS Baseline Privacy Interface Plus (BPI+) specifications. Encryption implementation for FTTH varies. In both HFC and PON, the most common encryption algorithm used today is AES-128.

- Message hashing ensures the integrity of messages in the system, meaning that a message cannot be changed without detection once it has been sent. Sometimes this capability is built into the encryption algorithm. In DOCSIS networks, all subscriber communications to and from the cable modem are hashed to ensure integrity, and some network control messages receive additional hashing.

It is important to understand where in the network these cryptography tools are applied. In DOCSIS HFC networks, user communications are protected between the cable modem and the CMTS. If the CMTS functionality is provided by another device such as a Remote PHY Device (RPD) or Remote MACPHY Device (RMD), DOCSIS terminates there. However, the DOCSIS HFC architecture provides authentication and encryption capabilities to secure the link to the hub as well. In FTTH, the cryptographic tools provide protection between the ONU and the OLT. If the OLT is deployed remotely as may be the case with RPDs or RMDs, the backhaul link should also be secured in a similar manner.

The Reality – Security in Cable

The specifications and standards that outline how HFC and PON should be deployed provide good cryptography-based tools to authenticate network access and keep both network and subscriber information confidential. The security of the components of the architecture at the management layer may vary per operator. However, operators are very adept at securing both cable modems and ONUs. And, as our adversaries innovate new attacks, we work on incorporating new capabilities to address those attacks – cybersecurity innovation is a cultural necessity of security engineering!

Building on more than two-decades of experience, CableLabs continues to advance the security features available in the DOCSIS specification, soon enabling new or updated HFC deployments to be even more secure and ready for 10G. The DOCSIS 4.0 specification has introduced several advanced security controls, including mutual authentication, perfect forward secrecy, and improved security for network credentials such as private keys. Given our strong interest in both optical and HFC network technologies, CableLabs will ensure its own specifications for PON architectures adopt these new security capabilities and will continue to work with other standards bodies to do the same.

Security

Revisiting Security Fundamentals Part 3: Time to Examine Availability

As I discussed in parts 1 and 2 of this series, cybersecurity is complex. Security engineers rely on the application of fundamental principles to keep their jobs manageable. In the first installment of this series, I focused on confidentiality, and in the second installment, I discussed integrity. In this third and final part of the series, I’ll review availability. The application of these three principles in concert is essential to ensuring excellent user experiences on broadband.

Defining Availability

Availability, like most things in cybersecurity, is complicated. Availability of broadband service, in a security context, ensures timely and reliable access to and use of information by authorized users. Achieving this, of course, can be challenging. In my opinion, the topic is under represented amongst security professionals and we have to rely on additional expertise to achieve our availability goals. The supporting engineering discipline for ensuring availability is reliability engineering. Many tomes are available to provide detailed insight on how to engineer systems to achieve desired reliability and availability.

How are the two ideas of reliability and availability different? Reliability focuses on how a system will function under specific conditions for a period of time. In contrast, availability focuses on how likely a system will function at a specified moment or interval of time. There are important additional terms to understand – quality, resiliency, and redundancy. These are addressed in the following paragraphs. Readers wanting more detail may consider reviewing some of the papers on reliability engineering at ScienceDirect.

Quality: We need to assure that our architectures and components are meeting requirements. We do that through quality assurance and reliability practices. Software and hardware vendors actually design, analyze, and test their solutions (both in development and then as part of shipping and integration testing) to assure they actually are meeting their reliability and availability requirements. When results aren’t sufficient, vendors apply process improvements (possibly including re-engineering) to bring their design, manufacturing, and delivery processes into line to hitting the reliability and availability requirements.

Resiliency: Again, however, this isn’t enough. We need to make sure our services are resilient – that is, even when something fails that our systems will recover (and something will fail – in fact, many things over time are going to fail, sometimes concurrently). There are a few key aspects we address when making our networks resilient. One is that when something fails, it does so loudly to the operator so they know something failed – either the failing element sends messages to the management system, or systems that it connects to or relies upon it tells the management system the element is failing or failed. Another is that the system can gracefully recover. It automatically restarts from the point it failed.

Redundancy: And, finally, we apply redundancy. That is to say we set up the architecture so that critical components are replicated (usually in parallel). This may happen within a network element (such as having two network controllers or two power supplies or two cooling units) with failover (and appropriate network management notifications) from one unit to another. Sometimes we’ll use clustering to both distribute load and achieve redundancy (sometimes referred to as M:N redundancy). Sometimes, we’ll have redundant network elements (often employed in data centers) or multiple routes of how network elements can connect through networks (using Ethernet, Internet, or even SONET). In cases where physical redundancy is not reasonable, we can introduce redundancy across other dimensions including time, frequency, channel, etc. How much redundancy a network element should imply depends on the math that balances reliability and availability to achiever your service requirements.

I’ve mentioned requirements several times. What requirements do I mean? A typical one, but not the only one and not even necessarily the most important one is the Mean Time Between Failure (MTBF). This statistic represents the statistical or expected average length of time between failures of a given element of concern, typically many thousands (even millions for some critical well understood components) of hours. There are variations. Seagate, for example, switched to Annualized Failure Rate (AFR) which is the “probable percent of failures per year, based on the [measured or observed failures] on the manufacturer’s total number of installed units of similar type (see Seagate link here). The key thing here, though, is to remember that MTBF and AFR are statistical predictions based on analysis and measured performance. It’s also important to estimate and measure availability – at the software, hardware, and service layers. If your measurements aren’t hitting the targets you set for a service, then something needs to improve.

A parting note, here. Lots of people talk about availability in terms of the percentage of time a service (or element) is up in a year. These are thrown around as “how many 9’s is your availability?” For example, “My service is available at 4x9s (99.99%)?” This is often a misused estimate because the user typically doesn’t know what is being measured, what it applies to (e.g., what’s included in the estimate), or even the basis for how the measurement is made. Never-the-less, it can be useful when backed by evidence, especially with statistical confidence.

Warnings about Availability

Finally, things ARE going to fail. Your statistics will, sometimes, be found wanting. Therefore, it’s critical to also consider how long it will take to RECOVER from a failure. In other words, what is your repair time? There are, of course, statistics for estimating this as well. A common one is Mean Time to Repair (MTTR). This seems like a simple term, but it isn’t. Really, MTTR is a statistic representing that measures how maintainable or repairable systems are. And measuring and estimating repair time is critical. Repair time can be the dominant contributor to unavailability.

So… why don’t we just make everything really reliable and all of our services highly available. Ultimately, this comes down to two things. One, you can’t predict all things sufficiently. This weakness is particularly important in security and is why availability is included as one of the three security fundamentals. You can’t predict well or easily how an adversary is going to attack your system and disrupt service. When the unpredictable happens, you figure out how to fix it and update your statistical models and analysis accordingly; and you update how you measure availability and reliability.

The second thing is simply economics. High availability is expensive. It can be really expensive. Years ago, I did a lot of work on engineering and architecting metropolitan, regional, and nationwide optical networks with Dr. Jason Rupe (one of my peers at CableLabs today). We found, through lots of research, that the general rule of thumb was for each “9” of availability, you could expect an increase of cost of service of around 2.5x on typical networks. Sounds extreme, doesn’t it? Typical availability of a private line or Ethernet circuit between two points (regional or national) is typically quoted at around 99%. That’s a lot of downtime (over 80 hours a year) – and it won’t all happen at a predictable time within a year. Getting that down to around 9 hours a year, or 99.9% availability, will cost 2.5x that, usually. Of course, architectures and technology does matter. This is just sharing my personal experience. What’s the primary driver of the cost? Redundancy. More equipment on additional paths of connectivity between that equipment.

Availability Challenges

There are lots of challenges in design of a highly available cost-effective access network. Redundancy is challenging. It’s implemented where economically reasonable, particularly at the CMTS’s, routers, switches, and other server elements at the hub, headend, or core network. It’s a bit harder to achieve in the HFC plant. So, designers and engineers tend to focus more on reliability of components and software and ensure that CMs, nodes, amplifiers, and all the other elements that make DOCSIS® work. Finally, we do measure our networks. A major tool for tracking and analyzing network failure causes and for maximizing the time between service failures is DOCSIS® Proactive Network Maintenance (PNM). PNM is used to identify problems in the physical RF plant including passive and active devices (taps, nodes, amplifiers), connectors, and the coax cable.

From a strictly security perspective, what can be done to improve the availability of services? Denial of service attacks are monitored and mitigated typically at the ingress points to networks (border routers) through scrubbing. Another major tool is ensuring authorized access through authentication and access controls.

It is important that we include consideration of availability in our security strategies. Security engineers often get too focused on threats caused by active adversaries. We must also include other considerations that disrupt the availability of experiences to subscribers. Availability is a fundamental security component and, like confidentiality and integrity, should be included in any security by design strategy.

Availability Strategies

What are the strategies and tools reliability and security engineers might apply?

- Model your system and assess availability diligently. Include traditional systems reliability engineering faults and conditions, but also include security faults and attacks.

- Execute good testing prior to customer exposure. Said another way, implement quality control and process improvement practices.

- When redundancy is impractical, reliability of elements becomes the critical availability design consideration.

- Measure and improve. PMN can significantly improve availability. But measure what matters.

- Partner with your suppliers to assure reliability, availability, and repairability throughout you network, systems, and supply chains.

- Leverage PNM fully. Solutions like DOCSIS® create a separation from a network problem and a service problem. PNM lets operators take advantage of that difference to fix network problems before they become service problems.

- Remember that repair time can be a major factor in the overall availability and customer experience.

Availability in Security

It’s important that we consider availability in our security strategies. Security engineers often get too focused on threats caused by active adversaries. We must also include other considerations that disrupt the availability of experiences to subscribers. Availability is a fundamental security component and—like confidentiality and integrity—should be included in any security-by-design strategy.

You can read more about 10G and security by clicking below.

Security

Revisiting Security Fundamentals Part 2: Integrity

Let’s revisit the fundamentals of security during this year’s security awareness month – part 2: Integrity.

As I discussed in Part 1 of this series, cybersecurity is complex. Security engineers rely on the application of fundamental principles to keep their jobs manageable. The first blog focused on confidentiality. This second part will address integrity. The third and final part of the serious will review availability. Application of these three principles in concert is essential to ensuring excellent user experiences on broadband.

Nearly everyone who uses broadband has some awareness of confidentiality, though most may think of it exclusively as enabled by encryption. That’s not a mystery – our browsers even tell us when a session is “secure” (meaning the session they have initiated with a given server is at least using HTTPS which is encrypted). Integrity is a bit more obscure and less known. It’s also less widely implemented and then not always well.

Defining Integrity

In their special publication, “An Introduction to Information Security,” NIST defines integrity as “a property whereby data has not been altered in an unauthorized manner since it was created, transmitted, or stored.” This definition is a good starting place, but it can be extended in today’s cybersecurity context. Integrity needs to be applied not only to data, but also to the hardware and software systems that store and process that data and the networks that connect those systems. Ultimately, integrity is about proving that things are as they should be and that they have not been changed, intentionally or inadvertently or accidentally (like magic), in unexpected or unauthorized ways.

How is this done? Well, that answer depends on what you are applying integrity controls to. (Again, this blog post isn’t intended to be a tutorial in-depth on the details but a simple update and overview.) The simplest and most well-known approach to ensuring integrity is to use a signature. Most people are familiar with this as they sign a document or write a check. And most people know that the bank, or whomever else we’re signing a document for, knows that signatures are not perfect so you often have to present an ID (passport, driver’s license, whatever) to prove that you are the party to which your signature attests on that document or check.

We can also implement similar steps in information systems, although, the process is a bit different. We produce a signature of data by using math; in fact, integrity is a field of cryptography that complements encryption. A signature is comprised of two parts, or steps. First, data is run through a mathematical function called hashing. Hashing is simply a one-way process which reduces a large piece of data to a few bits (128-256 bits is typical) in a way that is computationally difficult to reverse and unlikely to be copied using different data. This is often referred to as a digest and the digest is unlikely to be duplicated using a different source data (we call this collisions). This alone can be useful and is used in many ways in information systems. But it doesn’t attest the source of the data or the authenticity of the data. It just shows whether it has been changed. If we encrypt the digest, perhaps using asymmetric cryptography supported by a public key infrastructure, we produce a signature. That signature can now be validated through a cryptographic challenge and response. This is largely equivalent to being asked to show your ID when you sign a check.

One thing to be mindful of is that encryption doesn’t ensure integrity. Although an adversary who intercepts an encrypted message may not be able to read that message, they may be able to alter the encrypted text and send it on its way to the intended recipient. That alteration may be decrypted as valid. In practice this is hard because without knowledge of the original message, any changes are likely to just be gibberish. However, there are attacks in which the structure of the original message is known. Some ciphers do include integrity assurances, as well, but not all of them. So, implementors need to consider what is best for a given solution.

Approaches to Integrity

Integrity is applied to data at motion and at rest, and to systems, software, and even supply chains somewhat differently. Here’s a brief summary of the tools and approaches for each area:

- Information in motion: How is the signature scheme above applied to transmitting data? The most common means uses a process very similar to what is described above. Hash-based Message Authentication Codes are protocols that create a digest of a packet and then encrypt the digest with a secret key. A public key is used to prove that the digest was produced by an authorized source. One old but still relevant description of HMAC is RFC 2104 available from IETF here.

- Information at rest: In many ways, assuring integrity of files on a storage server or a workstation is more challenging than integrity of transmitted information. Usually, storage is shared by many organizations or departments. What secret keys should be used to produce a signature of those files? Sometimes, things are simplified. Access controls can be used to ensure only authorized parties can access a file. When access controls are effective, perhaps only hashing of the data file is sufficient to prove integrity. Again, some encryption schemes can include integrity protection. The key problem noted above still remains a challenge there. Most storage solutions, both proprietary and open source, provide a wide range of integrity protection options and it can be challenging for the security engineer to architect the best solution for a given application.

- Software: Software is, of course, a special type of information. And so, the ideas of how to apply integrity protections to information at rest can apply to protecting software. However, how software is used in modern systems with live builds adds additional requirements. Namely, this means that before a given system uses software, that software should be validated as being from an authorized source and that it has not been altered since being provided by that source. The same notion of producing a digest and then encrypting the digest to form a signature applies, but that signature needs to be validated before the software is loaded and used. In practice, this is done very well in some ecosystems and either done poorly or not at all in other systems. In cable systems, we use a process referred to as Secure Software Download to ensure the integrity of firmware downloaded to cable modems. (See section 14 of Data-Over-Cable Service Interface Specifications 3.1)

- Systems: Systems are comprised of hardware and software elements, yet the overall operation of the hardware tends to be governed by configurations and settings stored in files and software. If the files and software are changed, the operation of the system will be affected. Consequently, the integrity of the system should be tracked and periodically evaluated. Integrity of the system can be tracked through attestation – basically producing a digest of the entire system and then storing that in protected hardware and reporting it to secure attestation servers. Any changes to the system can be checked to ensure they were authorized. The processes for doing this are well documented by the Trusted Computing Group. Another process well codified by the Trusted Computing Group is Trusted Boot. Trusted boot uses secure modules included in hardware to perform a verified launch of an OS or virtual environment using attestation.

- Supply chain: A recent focus area for integrity controls has been supply chains. How do you know where your hardware or software is coming from? Is the system you ordered the system you received? Supply chain providence can be attested using a wide range of tools, and application of distributed ledger or blockchain technologies is a prevalent approach.

Threats to Integrity

What are the threats to integrity? One example that has impacted network operators and their users repeatedly is changing of the DNS settings on gateways. If an adversary can change the DNS server on a gateway to a server they control (incorporation of strong access controls minimizes this risk), then they can selectively redirect DNS queries to spoofed hosts that look like authentic parties (e.g., banks, credit card companies, charity sites) and get customer’s credentials. The adversary can then use those credentials to access the legitimate site and do whatever that allows (e.g., empty bank accounts). This can also be done by altering the DNS query in motion at a compromised router or other server through which the query traverses (HMAC or encryption with integrity protection would prevent this.) Software attacks can occur even at the source but also at intermediate points in the supply chain. Tampered software is a prevalent way malware is introduced to end-points and can be very hard to detect because the software appears legitimate. (Consider the Wired article, “Supply Chain Hackers Snuck Malware Into Videogames.”)

The Future of Integrity

Cable technology has already addressed many integrity threats. As mentioned above, DOCSIS technology already includes support for Secure Software Download to provide integrity verification of firmware. This addresses both software supply chain providence and tampering of firmware. Many of our management protocols are also supported by HMAC. Future controls will include trusted boot and hardened protection of our encryption keys (also used for signatures). We are designing solutions for virtualized service delivery and integrity controls are pervasively included across the container and virtual machine architectures being developed.

Addition of integrity controls to our tools to ensure confidentiality provides defense in depth. It is a fundamental security component and should include in any security by design strategy. You can read more about security by clicking below.

Security

Revisiting Security Fundamentals

It’s Cybersecurity Awareness Month—time to study up!

Cybersecurity is a complex topic. The engineers who address cybersecurity must not only be security experts; they must also be experts in the technologies they secure. In addition, they have to understand the ways that the technologies they support and use might be vulnerable and open to attack.

Another layer of complexity is that technology is always evolving. In parallel with that evolution, our adversaries are continuously advancing their attack methods and techniques. How do we stay on top of that? We must be masters of security fundamentals. We need to be able to start with foundational principals and extend our security tools, techniques and methods from there: Make things no more complex than necessary to ensure safe and secure user experiences.

In celebration of Cybersecurity Awareness Month, I’d like to devote a series of blog posts to address some basics about security and to provide a fresh perspective on why these concepts remain important areas of focus for cybersecurity.

Three Goals

At the most basic level, the three primary goals of security for cable and wireless networks are to ensure the confidentiality, integrity and availability of services. NIST documented these concepts well in its special publication, “An Introduction to Information Security.”

- Confidentiality ensures that only authorized users and systems can access a given resource (e.g., network interface, data file, processor). This is a pretty easy concept to understand: The most well-known confidentiality approach is encryption.

- Integrity, which is a little more obscure, guards against unauthorized changes to data and systems. It also includes the idea of non-repudiation, which means that the source of a given message (or packet) is known and cannot be denied by that source.

- Availability is the uncelebrated element of the security triad. It’s often forgotten until failures in service availability are recognized as being “a real problem.” This is unfortunate because engineering to ensure availability is very mature.

In Part 1 of this series, I want to focus on confidentiality. I’ll discuss integrity and availability in two subsequent blogs.

As I mentioned, confidentiality is a security function that most people are aware of. Encryption is the most frequently used method to assure confidentiality. I’m not going to go into a primer about encryption. However, it is worth talking about the principles. Encryption is about applying math using space, power and time to ensure that only parties with the right secret (usually a key) can read certain data. Ideally, the math used should require much greater space, power or time for an unauthorized party without the right secret to read that data. Why does this matter? Because encryption provides confidentiality only as long as the math used is sound and that the corresponding amount of space, power and time for adversaries to read the data is impractical. That is often a good assumption, but history has shown that over time, a given encryption solution will eventually become insecure. So, it’s a good idea to apply other approaches to provide confidentiality as well.

What are some of those approaches? Ultimately, the other solutions prevent access to the data being protected. The notion is that if you prevent access (either physically or logically) to the data being protected, then it can’t be decrypted by unauthorized parties. Solutions in this area fall primarily into two strategies: access controls and separation.

Access controls validate that requests to access data or use a resource (like a network) come from authorized sources (identified using network addresses and other credentials). For example, an access control list (ACL) is used in networks to restrict resource access to specific IP or MAC addresses. As another example, a cryptographic challenge and response (often enabled by public key cryptography) might be used to ensure that the requesting entity has the “right credentials” to access data or a resource. One method we all use every day is passwords. Every time we “log on” to something, like a bank account, we present our username (identification) and our (hopefully) secret password.

Separation is another approach to confidentiality. One extreme example of separation is to establish a completely separate network architecture for conveying and storing confidential information. The government often uses this tactic, but even large enterprises use it with “private line networks.” Something less extreme is to use some form of identification or tagging to encapsulate packets or frames so that only authorized endpoints can receive traffic. This is achieved in ethernet by using virtual LANs (VLANs). Each frame is tagged by the endpoint or the switch to which it connects with a VLAN tag, and only endpoints in the same VLAN can receive traffic from that source endpoint. Higher network layer solutions include IP Virtual Private Network (VPNs) or, sometimes, Multiprotocol Label Switching (MPLS).

Threats to Confidentiality

What are the threats to confidentiality? I’ve already hinted that encryption isn’t perfect. The math on which a given encryption approach is based can sometimes be flawed. This type of flaw can be discovered decades after the original math was developed. That’s why it’s traditionally important to use cipher suites approved by appropriate government organizations such as NIST or ENISA. These organizations work with researchers to develop, select, test and validate given cryptographic algorithms as being provably sound.

However, even when an algorithm is sound, the way it’s implemented in code or hardware may have systemic errors. For example, most encryption approaches require the use of random number generators to execute certain functions. If a given code library for encryption uses a random number generator that’s biased in some way (less than truly random), the space, power and time necessary to achieve unauthorized access to encrypted data may be much less than intended.

One threat considered imminent to current cryptography methods is quantum computing. Quantum computers enable new algorithms that reduce the power, space and time necessary to solve certain specific problems, compared with what traditional computers required. For cryptography, two such algorithms are Grover’s and Shor’s.

Grover’s algorithm. Grover’s quantum algorithm addresses the length of time (number of computations) necessary to do unstructured search. This means that it may take half the number of guesses necessary to guess the secret (the key) to read a given piece of encrypted data. Given current commonly used encryption algorithms, which may provide confidentiality against two decades’ worth of traditional cryptanalysis, Grover’s algorithm is only a moderate threat—until you consider that systemic weaknesses in some implementations of those encryption algorithms may result in less than ideal security.

Shor’s algorithm. Shor’s quantum algorithm is a more serious threat specifically to asymmetric cryptography. Current asymmetric cryptography relies on mathematics that assume it’s hard to factor integers down to primes (such as used by the Rivest-Shamir-Adleman algorithm) or to guess given numbers in a mathematical function or field (such as used in elliptical curve cryptography). Shor’s quantum algorithm makes very quick work of factoring; in fact, it may be possible to factor these mathematics nearly instantly given a sufficiently large quantum computer able to execute the algorithm.

It’s important to understand the relationship between confidentiality and privacy. They aren’t the same. Confidentiality protects the content of a communication or data from unauthorized access, but privacy extends beyond the technical controls that protect confidentiality and extends to the business practices of how personal data is used. Moreover, in practice, a security infrastructure may for some data require it to be encrypted while in motion across a network, but perhaps not when at rest on a server. Also, while confidentiality, in a security context, is pretty much a straight forward technical topic, privacy is about rights, obligations and expectations related to the use of personal data.

Why do I bring it up here? Because a breach of confidentiality may also be a breach of privacy. And because application of confidentiality tools alone does not satisfy privacy requirements in many situations. Security engineers – adversarial engineers – need to keep these things in mind and remember that today privacy violations result in real costs in fines and brand damage to our companies.

Wow! Going through all that was a bit more involved than I intended – lets finish this blog. Cable and wireless networks have implemented many confidentiality solutions. WiFi, LTE, and DOCSIS technology all use encryption to ensure confidentiality on the shared mediums they use to transport packets. The cipher algorithm DOCSIS technology typically uses AES128 which has stood the test of time. We can anticipate future advances. One is a NIST initiative to select a new light weight cipher – something that uses less processing resources than AES. This is a big deal. For just a slight reduction in security (measured using a somewhat obscure metric called “security bits”), some of the candidates being considered by NIST may use half the power or space as compared to AES128. That may translate to lower cost and higher reliability of end-points that use the new ciphers.

Another area the cable industry, including CableLabs, continues to track is quantum resistant cryptography. There are two approaches here. One is to use quantum technologies (to generate keys or transmit data) that may be inherently secure against quantum computer based cryptanalysis. Another approach is to use quantum resistant algorithms (e.g., new math that is resistant to cryptanalysis using Shor’s and Grover’s algorithms) implemented on traditional computing methods. Both approaches are showing great promise.

There’s a quick review of confidentiality. Next up? Integrity.

Want to learn more about cybersecurity? Register for our upcoming webinar: Links in the Chain: CableLabs' Primer on What's Happening in Blockchain. Block your calendars. Chain yourselves to your computers. You will not want to miss this webinar on the state of Blockchain and Distributed Ledger Technology as it relates to the Cable and Telecommunications industry.

Security

Blockchain Enters the Cable Industry

A version of this article appeared in Broadband Library.

Blockchain is one of today’s most discussed and visible technologies. Some technologists consider blockchain to be the most significant technological innovation since the dawn of the Internet. Many researchers have begun to see blockchain applied to Internet of Things (IoT) security, providing better consumer control and transparency of privacy rights and options, private and public sector voting, and more. And yet, to a significant segment of the population, blockchain remains a mystery. What is it? And how can it apply to the cable industry?

What Is Blockchain?

Finding a definition of blockchain that doesn’t involve a distributed database or a reference to Bitcoin can be difficult. Perhaps a simplistic but concise definition is that a blockchain is an immutable, distributed method of record-keeping for transactions—a ledger that is visible to the participating community.

- Immutable means that the information that a blockchain contains cannot be changed.

- Distributed means that the information is replicated among many participants (in Bitcoin terms, nodes).

- Ledger implies that the blockchain records transactions.

- Visible to the participating community means that every transaction recorded in the ledger is visible to every participant (user or implementer) of the blockchain.

In short, blockchain is a big deal. Its benefits are enabled through a synergy of cryptography—the application of math to protect data—and network algorithms that allow distributed systems to manage consensus. Combining these concepts, blockchain provides the ability to create a history of transactions that is significantly more expensive to change than it was to create. We’ve never had that ability before. Revisionist historians should be concerned!

Blockchain and Cable—Hype vs. Reality

To appreciate how blockchains can be applied to cable, we have to get past the hype. According to the hype:

- Blockchains are the best technology to solve every trust and security problem in existence. That’s simply not true.

- Blockchains are the secret to disintermediation, which allows the elimination of middlemen and the need for people to know who they’re dealing with. That’s certainly controversial, and it may be somewhat true. But how many people believe getting rid of the middleman works out well?

The reality is that blockchains allow us to create histories of transactions (which we used to call logs) with unprecedented integrity. Although that may seem somewhat boring, it is transformational. Transactions recorded on a blockchain become statements of fact. There are many use cases where this concept could build new types of relationships between operators and customers, between operators, and between regulators and the regulated. Information flows can now be synchronized with high fidelity. Transparency in business operations can be provided where legal and helpful.

2018—The Year of the Blockchain

Cable operators are developing capabilities now, but it’s too early to share successes and lessons learned. This year, 2018, is the year that cable starts to integrate blockchain solutions, but it will be quiet and subtle.

Should cable operators work together to create their own blockchains? Perhaps. Ensuring control of the software that enables a blockchain to work across multiple partners will be essential to the success of blockchain projects. Governance of the code base and the processes to develop consensus is at the heart of implementing blockchains. Although blockchain use cases are often subtle, they can also be business-critical once they’re mature.

Interested in learning more? Subscribe to our blog to stay current on blockchain and the cable industry.

Education

Diversity and Excellence – Investing in the Future with Colorado State University

Recently, I attended the Industry Advisory Board for the Computer Science Department at Colorado State University (CSU) and the Advisory Board for CSU’s participation in a National Science Foundation partnership on cybersecurity. As I prepared for these sessions, it gave me a chance to reflect on just how useful working with universities is to our industry.

CableLabs works closely with many of the best universities across the United States – from NYU to Georgia Tech to Carnegie Mellon University. With CableLabs headquarters located in Louisville, CO, we have particularly close relationships with regional institutions, including Colorado University and Colorado State University (CSU). Below, I talk about why working with higher education is so valuable and what it takes to create a great, productive relationship with a university.

How and Why CableLabs Works with Universities

A great deal of focus at CableLabs is on innovation. Working with universities can help us come up with ideas and solutions that the cable industry may never realize or consider. How? The answers: Diversity and leverage.

- Bringing together people that have different life experiences and perspectives ensures we go beyond the obvious and come up with creative, effective ways to solve hard problems. Universities have hugely diverse faculty and student bodies working on interesting problems. As we expose professors and students to the opportunities and challenges the cable industry is addressing, we inevitably get innovative ideas that radically diverge from the way cable industry professionals think.

- Each of the universities we engage is supported by a wide range of commercial entities and government institutions. This provides multiple opportunities to achieve leverage. We gain access to research funded by multiple organizations and develop the potential to achieve collaboration and synergy on challenges shared across industries.

In the process of doing this, we convey our own perspectives and experiences, exposing great minds to our industry and increase awareness of real-world problems. The synergy that results helps identify and foster new ideas that would rarely have developed any other way. Additionally, we help to create and maintain a talent pipeline that can provide well-developed professionals at entry and mid-level positions that can fuel broadband innovation for decades to come.

The security technologies team at CableLabs has worked closely with lead professors at CSU to realize these goals. We’ve developed close, continuous relationships with lead professors, who have, in turn, helped us foster great relationships with their researchers and students. By working closely together to understand problems and emerging technologies, CableLabs can very precisely target funds to help CSU develop resources and capabilities of unique value to the cable industry. Close collaboration ensures relevance and maximizes the chance of research success.

And, the story gets even better. Universities work with a wide range of government institutions, other universities, research laboratories and other businesses. Usually, a security idea relevant to broadband might have manifestations applicable to healthcare, manufacturing, transportation or other industry sectors. Consequently, CableLabs achieves great leverage. A little time and money can yield benefits that would cost millions if pursued in isolation.

What Results have we Achieved?

We funded CSU to join the National Science Foundation Industry/University Cooperative Research Center for Configuration Analytics and Automation (NSF I/UCRC CCAA – the government does like acronyms). The lead professor for CSU at CCAA is Dr. Indrakshi Ray. This program provides numerous benefits:

- Gives us access to and influence in three major research universities

- Leverages funding from many industry partners and the NSF. The security research of interest to cable includes IoT, Network Function Virtualization (NFV) and active network defenses (including deception technologies which makes it more expensive for hackers to attack networks)

We’ve contributed to two great projects, including funding infrastructure, ideas for implementation, and helping the lead professor, Dr. Christos Papadopoulos:

- BGPMon: Helps large network operators detect security problems on the Internet and some CableLabs members are working with CSU now on the project

- Netbrane: Uses big data analytics and some artificial intelligence strategies to detect and mitigate malware. Dr. Christos presented Netbrane last week to an audience at the SCTE ISBE Expo in Denver.

We’re also helping CSU create a lab for IoT security research. We’ve donated IoT devices and collaborated on IoT security considerations. Over the summer, we had an intern, Maalvika Bachani, who worked with Brian Scriber on IoT security to support our work with the Open Connectivity Foundation.

We’ve been collaborating with Dr. Indrajit Ray on trust systems. Dr. Ray is working on how we might extend the excellent public key infrastructure-based trust system used in DOCSIS further into the home and to better secure other verticals such as home automation, remote patient monitoring, managed security services and more.

What it Takes

Achieving this level of collaboration requires a focus on a long-term relationship that is about much more than money. It requires institutional support at the university and close collaborative relationships between researchers at both CableLabs and the university. This allows sustained support of projects that transcends individual personalities and provides the basis for co-authoring great papers that can influence our industry. Finally, it provides an opportunity for co-innovation with a technology transfer path that can get new ideas out into the market. All the while, capturing the imagination of students and building a talent pipeline that will continue to fuel innovation in the cable industry for decades to come.

You can find out more information about our university outreach in our blog post and video "Furthering CableLabs' Innovation Mission through University Research."

Security

ETSI Security Week: Securing Networks Requires a Global Perspective

Cyber attacks are on the rise and a threat to critical infrastructure around the globe. CableLabs along with other service providers and vendors are collaborating through European Telecommunications Standards Institute (ETSI) to ensure best practices are consistently deployed in regards to these attacks.

Take a look at any cyber attack and consider where the attacks come from and who their victims are. You’ll find that almost all attacks are international in scope, with both attackers and victims found across a transnational field devoid of boundaries. Securing our networks and services requires a global response and our evolving practices and strategies must have an international perspective. CableLabs does this by participating in multiple international organizations working hard to evolve our cyber security defenses. Last week, the ETSI hosted a series of focused workshops on network security at ETSI Security Week. CableLabs helped plan this event, and we contributed our insights in presentations and panels.

This annual event is attended by nearly 300 industry professionals and opens a dialogue to develop a common understanding in the industry of best practices. Workshops included public policy impacts on security practices, Machine to Machine/Internet of Things security challenges, securing Network Function Virtualization (NFV) architectures, and, no event is complete without some discussion of 5G. (For more information on 5G see Tetsuya Nakamura’s blog post here.) I presented our experiences in implementing NFV proof-of-concepts and Brian Scriber participated in a panel discussing operator perspectives. Materials shared at the event are available after registration on the ETSI portal here.

As shared here last fall, as well as introducing new security challenges, NFV also presents opportunities to improve the security of future networks relative to legacy infrastructure. Benefits of a well implemented NFV infrastructure enables:

- More consistent security processes and controls

- Easier and more rapid security upgrades and patching as threats evolve

- Improved support for pervasive encryption

- More cost-effective security and performance monitoring

With the correct implementation, NFV enhances security operations by enabling pervasive monitoring and more agile and flexible responses as cyber threats evolve.

NFV coupled with Software Defined Networking (SDN) enables the creation of an open and distributed architecture which enables operators to create “network factories”. Network factories are fully automated network architectures that are entire supply chains for exciting new services. We need to secure the network infrastructure, as well as secure the software supply chain from code creation to delivery as running code on the platform. This requires a different orientation from today’s operations. Fortunately, NIST has provided a framework for approaching the cyber security aspects of supply chains and it applies well to open and distributed architectures.

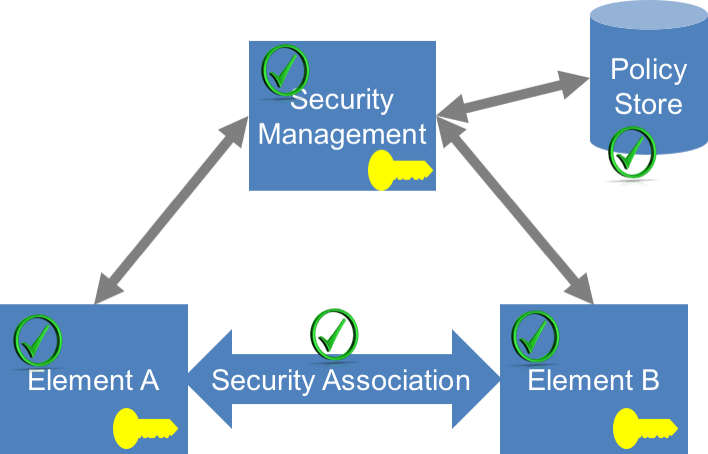

ETSI is a leader in providing foundational standards for NFV and is the single most influential body on NFV security best practices today. The ETSI NFV Architectural Framework sets the stage for what most other standards bodies and open source code projects are attempting to achieve. ETSI’s NFV reference architecture does not currently adequately identify all the supply chain cyber security aspects. Consequently, we haven’t yet defined a comprehensive approach to establishing security associations between all of the components (which may be hardware or software).

Every connection in the network should be considered as a security association. Certain security functions must be implemented for each security association. Each security association should be:

- Based on strong identity: This means there needs to be a persistent private key associated with a unique identifier and attested (signed) by a certificate or equivalent

- Authenticated: Using some form of cryptographic challenge

- Authorized: For both network and process access control and based on a network-wide policy

- Isolated: From other sub-networks and workloads on virtualized servers

- Confidential: Including encryption

- Attested: The infrastructure and communications links are proven to be untampered

Providing a basis for strong identity is proving to be challenging. CableLabs has used PKI-based certificates for strong identity for DOCSIS network now for 17 years with over 500M certificates issued. Yet, achieving consensus to replicate this success amongst the evolving solutions in NFV, IoT, and medical devices are taking time.

Security identity requires three components:

- The first element is a secret, which is usually a private key to support authentication and encryption.

- The second element is a unique identifier within the ecosystem. DOCSIS network security uses the MAC address for this purpose, but that is not applicable to all other domains.

- Thirdly, the identity must be attestable. This means creating a certificate or profile that is signed, which binds the certificate to the secret.

The path to success in implementing globally effective cyber security is to document best practices through specification or standardization with supporting code bases which actually implement those practices. CableLabs is proud to be a major contributor to ETSI’s NFV project. We lead both the ETSI NFV Operator Council and the Security Working Group and we are collaborating with other industry leaders to address these gaps. Further, we work closely with open source code groups such as OpenStack, OSM, OpenDaylight, OPNFV, and we watch emerging initiatives such as FD.io and ONAP. Through our SNAPSTM initiative, we are reinforcing standards work with practical experience. If these initiatives mature, we will adapt the practices to cable specific solutions.

--

CableLabs is hosting the next ETSI NFV plenary meeting in Denver,CO from September 11-15, 2017. Participation is open upon signing the ETSI NFV participant agreement. Leave a comment below if you’d like to connect with the CableLabs team. We’d love to meet you there!

Security

How The Dark Web Affects Security Readiness in the Cable Industry

The darknet, dark web, deep web, dark internet – exciting catch-phrases often referred to by analysts and reporters. But what are they? What is the dark web?

The dark web is a network of networks that overlays the Internet. One of the most common dark web networks is The Onion Routing Network, or Tor. Used properly, Tor provides anonymity and privacy to users. Anonymity is achieved when users’ identity is never revealed to others and their traffic cannot be traced back to their actual access accounts and associated Internet addresses. Privacy is achieved when users’ communications cannot be read by anybody other than the intended recipients. Anonymity and privacy are closely related but distinct ideas – privacy can be achieved without anonymity and vice versa.

CableLabs recently hosted a panel about the dark web at its Winter Conference. The panel brought in subject matter experts from across the industry including Andrew Lewman of OWL Cybersecurity. Andrew was previously the Executive Director for Tor from 2009 to 2015. The panel investigated the technology and social impacts of the dark web, and particularly highlighted why cable operators care about this technology area. The dark web is used by adversaries to sell and exchange malware and information used to attack networks, and also account information about employees and customers of companies. Cable operators monitor the dark web to see what is being sold and get indications and warnings of threats against them. This information is used to improve and augment the layers of security used to protect networks and customers.

The evening after the panel, Phil McKinney had the opportunity to talk with Andrew Lewman about the dark web – we are pleased to share that video.

How Does the Dark Web Work?

Tor provides an interesting case study. As stated above, Tor stands for “The Onion Routing.” The inspiration of the name is how The Onion Router protocol wraps packets of information in layers of security that must be successively peeled to reveal the underlying information. The method is, of course, a bit more convoluted in reality. Routes are defined by a proxy which makes an “onion” using layers of cryptography to encode packets. The packets from the initiator are forward packets. As a forward packet is moved through the network of Onion Routers, layers of the onion are successively removed. These layers can only be removed by routers with the correct private key to read that layer of the onion. To those that are router savvy, what is really happening is that the proxy creates a circuit using tunnels of tunnels until the endpoint is reached. If an intermediary device attempts to decrypt a layer of the onion with an incorrect key, all the other interior layers of the “onion” will be garbled.

Tor is, however, just one example technology. What other means do people use to achieve private and anonymous communications? The chat channels provided on popular console games are reportedly used by terrorists and criminals. An alternative technology solution that overlays the Internet is I2P. And there are many others.

Beyond the Dark Web

In addition to being aware of the dark web, CableLabs leads other security initiatives as they relate to device security and protecting the cable network. CableLabs participates in the Open Connectivity Foundation (OCF) which is spearheading network security and interoperability standards for IoT devices. CableLabs has a board position at OCF and chairs the OCF Security Working group. By ensuring that all IoT devices that join the cable network are secure, risks to both the network as well as the privacy of subscribers are taken into consideration.

CableLabs recognizes the importance that the cable industry will contribute to the larger ecosystem of IoT device manufacturers, security providers and system integrators. We are producing a two-day Inform[ED] Conference to bring together cable industry technologists with these stakeholders. April 12 will focus on IoT Security and April 13 will cover Connected Healthcare. Please join us in New York City and we look forward to having you join us in this important conversation.

Inform[ED] IoT Security

Event Details

Wednesday, April 12, 2017

8:00am to 6:00pm

InterContinental Times Square New York

300 W 44th St.

New York, NY 10036

REGISTER NOW

Consumer

Insights from the 50th Consumer Electronics Show #CES2017

This year’s CES was another record breaking event and was well attended by cable industry representatives. The event staff reports over 177,000 people attended to view nearly 2.5 million square feet of exhibit space. Over the next several weeks, analysts and pundits will contemplate the trends and shifts that are ongoing in the industry. In the meantime, here are some thoughts on a few key areas.

Everything is being connected in dozens of ways. Connected everything is going to drive huge bandwidth consumption while also presenting interesting challenges. Wireless connectivity options abound, from traditional WiFi and Bluetooth to a plethora of ecosystem scale consortia options such as ZigBee, ZWave, Thread, and ULE Alliance. Cellular based connectivity is expanding with companies using lightweight modems to easily connect new products such as health device hubs and pet monitors to cloud services. With so many options, however, providing a consistent and securable home and business environment will remain challenging — no one hub will seamlessly connect all the devices and services that are out there, and no one security appliance will keep consumer networks safe.

There is a huge focus on health and wellness, with several hundred companies exhibiting in the Health & Wellness and Fitness & Technology Marketplaces. These focus areas were well exhibited by the large manufacturers such as Samsung, Sony, Intel, and Qualcomm as well. In discussions with product managers, however, it’s clear that we might not have learned too many lessons about the need to secure medical and fitness devices and services. Many vendors continue to integrate minimal security, relying on unsecured Bluetooth connectivity to a hub that often does not leverage any form of strong identity for authentication. Fortunately, the Open Connectivity Foundation will continue to provide a path for addressing this shortfall, and membership in the Foundation significantly increased this week. Moreover, several vendors are leveraging IoTivity which will provide clean paths to secure implementations for connected environments.

Smart, highly connected homes were also a major theme, again with hundreds of vendors showing completely integrated solutions, hubs, and thousands of end devices. Connected lightbulbs remained a continuous and omnipresent idea, as were security systems. However, it’s clear there is not any winning market strategy here yet. With dozens of vendors offering complete solutions and even more offering different controllers, it seems the market is fragmented! On the other hand, Brian Markwalter of CTA advises they expect to see 63% CAGR for the smart home market in 2017. It seems this is a great opportunity for service providers to pave the way to some convergence and integration simplification for home owners.

It’s hard to go to CES and not leave very optimistic about the future. There is so much good stuff coming that is going to impact all of us. From better screens to more agile and secure health care devices to safer cars to anything else you can imagine. And, there are so many ways to add value to mundane items just by connecting them to a network. Given Metcalfe’s law (“the value of a telecommunications network is proportional to the square of the number of connected users of the system”), the value of the cable network appears to be headed for much higher with the growth of so many connected devices. And, it’s clear that we’re going to need all the bandwidth to the home that DOCSIS can bring! Our challenge is ensuring easy and flexible use through good strategies and standards for interoperability and security.

Security

Improving Infrastructure Security Through NFV and SDN

October was Cybersecurity Awareness Month in the US. We certainly were aware. In September, IoT cameras were hacked and used to create the largest denial of service attacks to date, well over 600Gbps. On October 21, the same devices were used in a modified attack against Dyn authoritative DNS services resulting in disruption of around 1200 websites. Consumer impacts were widely felt, as popular services such as Twitter and Reddit became unstable.

Open distributed architectures can be used to improve the security of network operators’ rapidly evolving networks, reducing the impacts of attacks and providing excellent customer experiences. Two key technologies enabling open distributed architectures are Network Function Virtualization (NFV) and Software Defined Networking (SDN). Don Clarke detailed NFV further in his blog post on ETSI NFV activities. Randy Levensalor also reviewed one of CableLabs’ NFV initiatives, SNAPS earlier this year.

Future networks based on NFV and SDN will enable simpler security processes and controls than we experience today. Networks using these technologies will be easier to upgrade and patch as security threats evolve. Encryption will be supported more easily and other security mechanisms more consistently than legacy technologies. And network monitoring to manage threats will be easier and more cost-effective.

Open distributed architectures provide the opportunity for more consistent implementation of fundamental features, process and protocols, including easier implementation of new, more secure protocols. This in turn may enable simpler implementation and deployment of security processes and controls. Legacy network infrastructure features and processes are largely characterized by proprietary systems. Even implementing basic access control lists from IP based interfaces varies widely, not only in the interfaces used to implement the control lists, but in the granularity and specificity of the controls. Some areas have improved but NFV and SDN can improve further. For example, BGP Flowspec has helped standardize blocking, rate limiting, and traffic redirection on routers. However, it has strict limits today on the number of rules practically supported on routers. NFV and SDN can provide improved scalability and greater functionality. NFV provides an opportunity to readdress this complexity by providing common methods to implement security controls. SDN offers a similar opportunity, providing standardized interfaces to implement flow tables to devices and configuration deployment through model-based configuration (e.g. using YANG and NETCONF).

Standardized features, processes, and protocols naturally lead to simpler and more rapid deployment of security tools and easier patching of applications. NFV enables the application of Develop Operations (DevOps) best practices to develop, deploy, and test software patches and updates. Physical and virtual routers and network appliances can be similarly programmatically updated using SDN. Such agile and automated reconfiguration of the network will likely make it easier to address security threats. Moreover, security monitors and sensors, firewalls, virtual private network instances, and more can be readily deployed or updated as security threats evolve.

Customer confidentiality can be further enhanced. In the past, encryption was not widely deployed for a wide range of very good economic and technical reasons. The industry has learned a great deal in deploying secure and encrypted infrastructure for DOCSIS® networks and also radio access networks (RANs). New hardware and software capabilities already used widely in data center and cloud solutions can be applied to NFV to enable pervasive encryption within core networks. Consequently, deployment of network infrastructure encryption may now be much more practical. This may dramatically increase the difficulty of conducting unauthorized monitoring, man-in-the-middle attacks and route hijacks.

A key challenge for network operators continues to be detection of malicious attacks against subscribers. Service providers use a variety of non-intrusive monitoring techniques to identify systems that have been infected by malware and are active participants in botnets. They also need to quickly identify large-scale denial of service attacks and try to limit the impacts those attacks have on customers. Unfortunately, such detection has been expensive. NFV promises to distribute monitoring functions more economically and more widely, enabling much more agile responses to threats to customers. In addition, NFV can harness specific virtualization techniques recommended by NIST (such as hypervisor introspection) to ensure active monitoring of applications. Moreover, SDN provides the potential to quickly limit or block malicious traffic flows much closer to the source of attacks.

Finally, NFV promises to allow us the opportunity to leap ahead on security practices in networks. Most of the core network technologies in place today (routing, switching, DNS, etc.) were developed over 20 years ago. The industry providing broadband services knows so much more today than when the initial broadband and enterprise networks were first deployed. NFV and SDN technologies provide an opportunity to largely clean the slate and remove intrinsic vulnerabilities. The Internet was originally conceived as an open environment – access to the Internet was minimally controlled and authentication never integrated at the protocol level. This has proven to be naïve, and open distributed architecture solutions enabled by NFV and SDN can help to provide a better, more securable infrastructure. Of course, there will continue to be vulnerabilities – and new ones will be discovered that are unique to NFV and SDN solutions.

As Cybersecurity Awareness Month closes and we start a new year focused on improving consumer experiences, CableLabs is pursuing several projects to leverage these technologies to improve the security of broadband services. We are working to define and enable key imperatives required to secure virtualized environments. We are using our expertise to influence key standards initiatives. For example, we participate in the ETSI NFV Industry Specification Group (ETSI NFV) which is the most influential NFV standards organization. In fact, CableLabs chairs the ETSI NFV Security Working Group which has advanced the security of distributed architectures substantially the past 4-years. Finally, we continue to innovate new open and distributed network solutions to create home networks that can adaptively support secure services, new methods of authentication and attestation in virtual infrastructures, and universal provisioning interfaces.