Technology Vision

Broadband Operators — Your Network Roadmap Starts at CableLabs Tech Summit 2026

Key Points

- The Tech Summit 2026 agenda focuses on aligning strategy and execution around the Technology Vision for the broadband industry, highlighting critical priorities for cable operators.

- This intimate networking and knowledge-sharing event is designed exclusively for CableLabs member operators and the exhibiting vendor community.

- Registration is open for the conference, taking place April 27–29, 2026, in Westminster, Colorado.

The future of broadband will be defined by the decisions we make now on access network evolution, customer experience, automation, security and spectrum.

At CableLabs Tech Summit 2026, industry leaders will come together to align on those decisions. Built around the Technology Vision, Tech Summit is where strategy meets execution, bringing member operators and vendor partners together for practical discussions that move innovation from roadmap to reality.

Why Attend CableLabs Tech Summit?

Tech Summit builds on the successful foundation of its predecessor, Winter Conference. Offering private, practical sessions designed to accelerate collaboration and scale what works, attendees will:

- Explore the Technology Vision for the industry and the technologies that define it.

- Connect with fellow experts in strategic, solutions-oriented conversations.

- Leave with clear next steps that translate directly into operational and engineering roadmaps to future-proof your networks.

This year’s program is organized around four themes that directly impact customer experience and operational success:

- Modernizing the in-home experience

- Securing next-generation networks

- Building tomorrow’s access networks

- Creating intelligently automated networks

Across these themes, sessions will highlight the critical questions shaping our industry and address their impact on user experience:

- How do we ensure the connected home experience keeps pace with evolving devices and use cases?

- How do we build networks that are secure, resilient and prepared for ever-present, long-term threats?

- What capabilities must access networks support, not just today, but for the next generation of people, businesses and use cases?

- How can intelligent automation improve both operational efficiency and customer satisfaction?

Here’s a closer look at the insights attendees will gain in two full days of expert-led discussions.

Modernizing the In-Home Experience

The home is now a high-performance digital environment, and user expectations continue to rise. Explore practical approaches for delivering faster, more reliable and more adaptive connectivity experiences while simplifying operations behind the scenes. Sessions include:

- Differentiating on Reliability: How to Measure, Maximize and Message It — Explore how operators can define metrics that matter, implement best practices to maximize performance and communicate reliability in ways consumers understand.

Securing Next-Generation Networks

As networks power everything from remote work to critical infrastructure, resilience is non-negotiable and security must be foundational. Sessions in this track will explore threat mitigation, secure architectures and strategies for embedding resilience into next-generation network design. Gain clear direction on building secure-by-design networks that safeguard customers and operators alike, prepared for both today’s realities and tomorrow’s risks. Sessions include:

- Cryptographic Resilience: The Next Chapter for DOCSIS® Network Security — Understand how evolving cryptographic strategies strengthen protection across DOCSIS networks and prepare infrastructure for emerging and long-term threats.

Building Tomorrow’s Access Networks

The future of broadband depends on scalable, high-capacity access infrastructure that can support innovation for decades to come. Sessions across this theme address plant upgrades, performance optimization and the engineering considerations needed to support future growth. Benefit from clear guidance on evolving access networks to meet rising demand while creating a platform that stands ready for what’s next. Sessions include:

- HFC Evolution: From DOCSIS® 4.0 Technology to 3 GHz and Beyond — Dive into the future of Hybrid Fiber Coax (HFC), including spectrum expansion and architectural advancements that enable greater capacity, flexibility and scalability.

Intelligently Automated Networks

Automation is no longer optional. Smarter networks drive efficiency, reliability and improved customer outcomes. Through AI, analytics and automation frameworks, this track explores how to improve operational efficiency, enable predictive maintenance, accelerate issue resolution, and enhance customer experiences through proactive network management. Take away proven strategies for deploying intelligent systems that benefit both operators and end users. Sessions include:

- AI Networking: Transforming Operations and Customer Experiences — Explore how AI-driven insights can streamline operations, reduce troubleshooting time and enable proactive, personalized customer support.

Policy and Industry Alignment

Technology does not evolve in a vacuum; strategy must account for regulatory realities. Join us for:

- Navigating Washington: Policy Forces Shaping the Broadband Industry — In this general session, understand the regulatory and policy dynamics influencing broadband investment, spectrum allocation and deployment strategies, and how to position your organization for success amid shifting federal priorities.

Additional sessions explore mobile optionality over HFC, scaling fiber to the premises (FTTP) efficiently, intelligence at the edge, reliability as a competitive differentiator and experience-driven network investment.

Beyond individual sessions, Tech Summit creates space for candid, private discussions that move ideas into action. Designed for collaboration and peer exchange, sessions are practical and execution-focused, helping the industry scale what works.

Keynote Speaker: WSJ’s Joanna Stern

Joanna Stern, an Emmy Award-winning journalist and one of the most respected voices in personal technology today, will join the speaker lineup to deliver the keynote.

As a senior personal technology columnist for The Wall Street Journal, Joanna blends deep reporting with creativity, producing columns and videos that make complex topics accessible and entertaining. Her upcoming book, “I AM NOT A ROBOT,” explores the impact of AI on daily life by chronicling a year in which she let artificial intelligence infiltrate nearly every aspect of her world. Throughout her career, Joanna has earned numerous honors, including Gerald Loeb Awards for her innovative journalism.

Register Today to Join the Conversation

The conversations shaping tomorrow’s broadband networks start at CableLabs Tech Summit. View the agenda and speaker lineup, and make plans to join us for this private, practical forum designed to move ideas into action.

We look forward to seeing you in April!

DOCSIS

DOCSIS Technology: What’s Changed in the Past Year and Why It Matters

Key Points

- DOCSIS 3.1 technologies (including DOCSIS 3.1+ cable modems) have now become the workhorse of the HFC network, enabling multigigabit tiers at scale.

- DOCSIS 4.0 interoperability events and vendor demonstrations have proven 14 Gbps to 16 Gbps aggregate downstream capacity and multivendor interoperability, validating the 10G roadmap on HFC networks.

- New work on a 3 GHz optional annex for DOCSIS 4.0 technology — and research toward 6 GHz — is turning last year’s long-term ideas into concrete steps for the next generation of DOCSIS technology.

DOCSIS® technology continues to evolve at a rapid pace. Cable operators have continued deploying DOCSIS technology and providing high-speed internet services to hundreds of millions of customers around the world. The year 2025 was exciting for cable networks, both in terms of deployments of new technology and continued innovation in the development of technology for the next quarter of a century.

A year ago, our blog post “The Evolution of DOCSIS Technology: Building the Future of Connectivity” walked through how DOCSIS technology evolution fits in the broader connectivity roadmap, and how CableLabs and the cable industry as a whole are already thinking about and working on what comes next. In 2026, the conversation has shifted from what’s theoretically possible to what’s actually happening in the field.

DOCSIS 3.1 Technology: A Decade In and Still Climbing

It’s been a decade since DOCSIS 3.1 technology debuted on the world stage, and cable operators continue to deploy it in all major markets. During that time, operators have upgraded the outside plant to support mid-split and high-split (increased upstream spectrum) technologies across their footprint. In parallel, the networks have transitioned to the Distributed Access Architectures (DAA), replacing older analog nodes with new remote PHY devices (RPDs). DOCSIS 3.1 continues to be a strong competitive technology.

In 2025, DOCSIS 3.1+ cable modems with additional (4+) OFDM channels were certified and are being both chosen and deployed to get even higher downstream speeds on the network. In 2025, DOCSIS 3.1+ cable modems with additional downstream (four or more OFDM) channels were certified; operators are now deploying these channels to achieve even higher downstream speeds on the network.

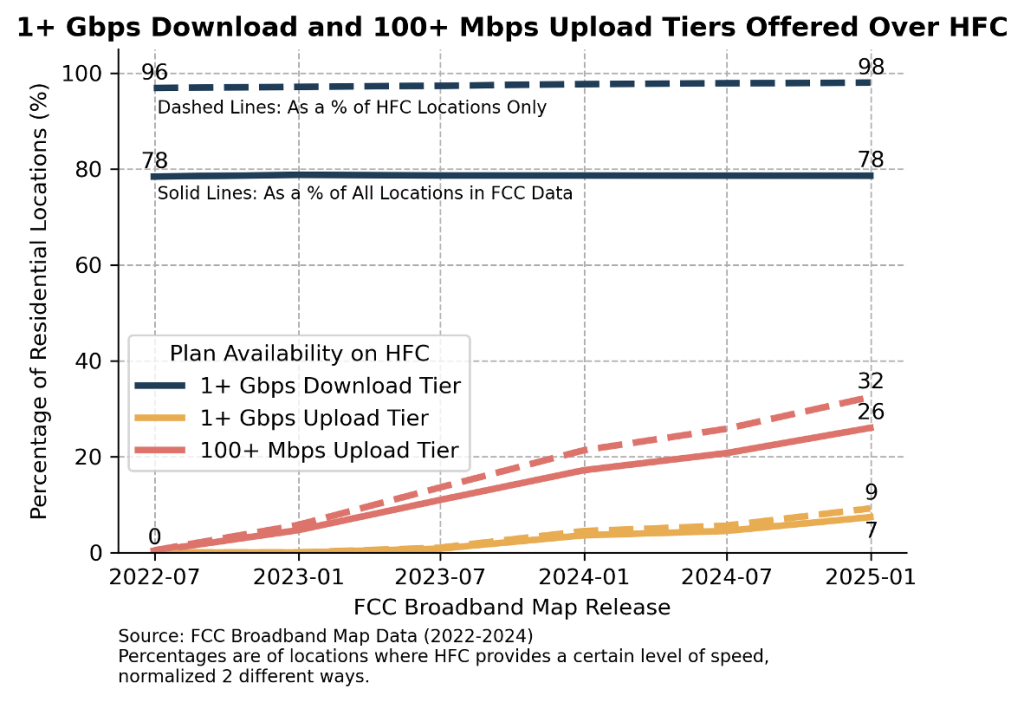

CableLabs’ analysis of FCC National Broadband Map data for the United States (June 2022 through December 2024) shows a strong presence of DOCSIS 3.1 deployments and an accelerating transition to midsplit and highsplit HFC plants. As of December 2024, 98% of all residential locations served by cable operators have access to 1+ Gbps downstream tiers over HFC. Over the same period, the share of locations with access to 100+ Mbps upstream tiers over HFC increased from about 1% to 32%, while 1+ Gbps upstream increased to 9%.

The FCC data analysis and graph below are credited to Jacob Malone, principal strategist and senior director, Network Demand Strategy, CableLabs.

Although multi-gigabit speeds are important, user experience increasingly comes down to latency, consistency and responsiveness — especially for cloud gaming, collaboration tools and immersive applications. That’s where Low Latency DOCSIS (LLD), introduced in DOCSIS 3.1 (and carried forward into DOCSIS 4.0), plays a key role. Operators such as Comcast have made strides in deploying this technology to enable a low-lag connectivity experience for their customers.

At the same time, improving network reliability has become a major focus of cable operators worldwide. Using data from the network, tools such as Profile Management Applications (PMA) and Proactive Network Maintenance (PNM) have become a vital part of network operations by maintaining connectivity, identifying issues and ultimately reducing downtime, as networks push toward higher utilization and complex channel configurations.

DOCSIS 4.0 Technology: From Interop Milestones to Deployment Readiness

Now let’s talk about DOCSIS 4.0, starting with interoperability events and multi-vendor readiness. Over the past year, interoperability events have moved from early experimentation to demonstrating performance and interoperability at scale.

At the June 2025 DOCSIS 4.0 Interop, multiple CCAP cores, RPDs and cable modems from various suppliers operating together in a distributed access architecture, were able to achieve downstream DOCSIS throughput reaching 14 Gbps across a multi-vendor network. A few months later, at the August DOCSIS 4.0 and DAA Technology Interop event, vendors showcased record-setting setups built on that foundation, demonstrating 16.25 Gbps downstream throughput across two load-balanced DOCSIS 4.0 modems. Demonstrations at SCTE Tech Expo’25 showed 2x2 DOCSIS 4.0 RPDs enabling unprecedented capacity.

Beyond the speed records, these results confirm that DOCSIS 4.0 can comfortably achieve 10G-class downstream performance over the HFC network; that multi-vendor interoperability of CCAP cores, RPDs and cable modems is real, and that virtualized, cloud-native CMTS platforms are a key part of the DOCSIS 4.0 story. Upstream speeds up to 3 Gbps and 5.5 Gbps were also demonstrated in different configurations. Taken together, these accomplishments validate the 10G roadmap that the cable industry has laid out and give operators confidence that DOCSIS 4.0 can compete head-to-head with fiber in both performance and operational flexibility.

Operators are in various stages of assessing and testing outside plant components and equipment, ultimately to support the upgrade to DOCSIS 4.0-based services. Over the last year, most operators in their individual labs have been evaluating various equipment. This includes outside plant components, such as taps, passives, and amplifiers, along with RPDs, CCAP cores, and cable modems needed for DOCSIS 4.0 deployments. Some operators are beginning to deploy these components into the network, outside plant components first (e.g., the smart amplifiers), then DOCSIS 4.0 RPDs and CCAP cores, and ultimately the cable modems to the customer homes, along with any necessary passive component upgrades. Deployments enabling the full duplex mode of operation have already seen great success, and the stage is set for successful deployments of frequency division duplex mode of operation as well.

This year, it’s going to be exciting to see these DOCSIS 4.0 deployments begin to scale around the world.

But wait, there’s more!

In 2026 and Beyond: Extending DOCSIS 4.0 to 3 GHz — and Looking to 6 GHz

CableLabs CEO Phil McKinney announced at SCTE TechExpo25 that CableLabs will begin industry work focused on enabling the HFC network to support DOCSIS data transmission up to 3 GHz. This extension can enable up to 25 Gbps of aggregate capacity — a leap that positions HFC networks not just to meet demand, but to stay well ahead of it.

The 3 GHz specification work has since begun with wide industry participation from both the cable operator side and the vendor community. This includes technologists from operators’ engineering organizations, CM vendors, silicon vendors, RPD manufacturers, CCAP core suppliers, along with outside plant component folks such as amplifier vendors, taps and passives manufacturers, et al. The work includes analyzing the plant models and characteristics of the network at higher frequencies and understanding the ability to build outside plant components to support high-fidelity operations at these higher frequencies. As we get agreement on these analyses, a new optional annex will be developed and added to the DOCSIS 4.0 specifications. The extension of the spectrum will allow DOCSIS signals to use the 1.8 GHz to 3GHz frequency range, opening up spectrum for additional downstream OFDM channels. The goal is to make these 3GHz requirements a part of the current DOCSIS 4.0 technology as an optional annex and enable the choice for the operators and vendors to test, develop and deploy interoperable solutions with these enhanced capabilities in markets that need them.

As McKinney also discussed at TechExpo, CableLabs and thought leaders in the industry are analyzing and evaluating the potential of HFC networks all the way to 6 GHz. This expansion could ultimately provide up to 50 Gbps of aggregate capacity and open the door to exciting new use cases that leverage the HFC networks we have today. This research is happening in the background, with various analyses on plant characteristics, tap design, amplifier design, and analysis of HFC network upgrade paths. Once the fundamental building blocks for transmission up to 6 GHz are validated, the technology development and specification work for another leap in DOCSIS capability can begin.

Connecting Past, Present and Future

Taken together, these developments extend the cable technology arc further:

- The past, DOCSIS 3.1 technology, has established a solid, backward-compatible foundation that brought gigabit speeds to market and prepared the HFC plant for higher splits and DAA.

- The present, defined by DOCSIS 4.0 technology, is where operators are beginning to deploy multigigabit services, validating 10G-class performance and building multivendor, virtualized networks.

- The future is now anchored in concrete work on a 3 GHz extension to DOCSIS 4.0 solutions and early planning for 6 GHz operation, ensuring that DOCSIS technology has a roadmap well beyond today’s generation.

It’s an exciting time to be working on HFC and DOCSIS technologies. The past year has shown that the industry is not only keeping pace with demand but actively shaping what the next decades of cable broadband will look like — from the networks already in the field to the specifications and research that will power the internet of the future.

Technology Vision

Milestones and Momentum: Advancing the CableLabs Technology Vision in 2025

Key Points

- Last year, CableLabs made significant, measurable progress toward the Technology Vision for the broadband industry, moving priorities from roadmap to reality.

- Progress advanced access, security, AI and interoperability — strengthening network capability and deployment readiness.

Broadband is entering a new phase defined by how networks perform at scale, support seamless experiences, and adapt as both demand and threats evolve. Over the past year, CableLabs has focused on technologies highlighted in its Technology Vision, a roadmap to prepare the industry for this next era of broadband innovation.

In 2025, CableLabs made progress across access technologies, security and emerging network capabilities, advancing network performance with a focus on scalable deployment and enhancing experiences.

Advancing Scalable Network Performance

Evolving DOCSIS® technology remained a core foundation for hybrid fiber coax (HFC) networks, supporting continued evolution without disrupting existing infrastructure. DOCSIS 4.0 interoperability milestones throughout the year demonstrated increasing ecosystem maturity, including multi-vendor configurations achieving record-setting 16 Gbps downstream and validating readiness for commercial deployment.

CableLabs also certified the first DOCSIS 3.1+ device with four orthogonal frequency-division multiplexing (OFDM) channels, which delivers meaningful capacity gains without requiring plant upgrades. The certification demonstrated how DOCSIS technology continues to extend network capacity through incremental, deployable advances.

In addition, the introduction of the DOCSIS 4.0 Optional Annex pushes HFC spectrum up to 3 GHz (~25 Gbps), demonstrating how far existing infrastructure can scale while highlighting deployment considerations related to power requirements, amplifier density and cost.

From Speed to Experience

2025 also marked a clear pivot toward experience-driven innovation. As connectivity becomes ubiquitous, what differentiates networks is no longer throughput alone, but how seamlessly they adapt to users, devices and environments.

Context-aware networking initiatives advanced this shift by rethinking how devices are recognized across operator footprints, reducing friction, eliminating manual onboarding and enabling connectivity that simply follows the user. Similarly, CableLabs’ work on Seamless Connectivity Services and Mobile Optionality brought us closer to networks that dynamically adapt across access types, supporting reliability rather than forcing tradeoffs.

Wi-Fi innovation reflected the same priorities. From tackling “sticky Wi-Fi” challenges to advancing Low Latency, Low Loss, Scalable throughput (L4S), the focus stayed squarely on delivering consistent, interactive experiences.

Building Intelligence and Trust Into the Network

CableLabs successfully field-tested agentic AI technology, demonstrating how autonomous, multi-agent systems can operate as a virtual team of experts. These systems showed potential to reduce service disruptions, accelerate troubleshooting and enable proactive maintenance, a critical step toward adaptive networking that acts before users feel an issue.

Regarding network security, CableLabs continued to collaborate with a number of cybersecurity organizations, collaborating on best practices. CableLabs also continued to prepare the industry for emerging cryptographic threats, including post-quantum threats, while strengthening protections across home, enterprise and core network environments.

Expanding the Ecosystem, Together

Interoperability played a critical role across access, optical, wireless and security advancements in 2025. CableLabs continued to make advancements in XGS-PON and Coherent PON, including developing the industry’s first single-wavelength 100 Gbps PON specifications.

CableLabs also evaluated emerging technologies like distributed fiber optic sensing and satellite-terrestrial integration, with a focus on real-world applicability.

O-RAN PlugFests and Open AFC collaboration reinforced the same principle across wireless domains: innovation accelerates when it is shared, tested and validated across stakeholders.

Turning the Vision Into What’s Next

The progress made in 2025 demonstrates how the CableLabs Technology Vision is taking shape across the industry. That momentum now carries into the next phase of collaboration, where ideas move faster from lab to deployment and where interoperability, intelligence and security are built directly into network design and operations.

To see where this vision is headed next — and how the industry can help shape it — join us at CableLabs Tech Summit 2026. Tech Summit — April 27–29 in Westminster, Colorado — will bring together CableLabs member operators and exhibiting vendors for two days of strategic collaboration, practical insights and technology.

Network as a Service

The Edge Compute Advantage: Turning Broadband Infrastructure Into Intelligence

Key Points

- The broadband industry’s existing infrastructure makes operators uniquely positioned to capitalize on edge computing.

- CableLabs is advancing edge deployment through standardization efforts including CAMARA Edge Cloud APIs, lab demonstrations and our Network as a Service working group.

- We invite interested members of our operator and vendor community to engage in this work by joining the Network as a Service Working Group, which meets next on January 21.

As AI workloads become more distributed and latency-sensitive, the network edge is emerging as a strategic differentiator for broadband operators. With infrastructure that already spans from customer premises equipment to headends and regional data centers, operators are uniquely positioned to host edge compute, including advanced edge AI, in ways that reduce cost, improve performance and unlock new revenue models.

At CableLabs, we've been exploring this evolution through hands-on research, standards engagement and lab-based proofs of concept. Our goal is to help the industry shape what comes next.

Why Edge Compute and Edge Hosting Matter Now

Our recent work demonstrates that many high-value AI workloads perform best when executed closer to where data is generated. Predictive network maintenance, for example, benefits from localized inference to detect RF impairments before customers experience issues. Edge-based video analytics enable real-time visual intelligence while keeping sensitive footage local for privacy and compliance.

These use cases are detailed in our Edge LLM Hosting paper and reinforce a central insight: the cable footprint is already an edge cloud, ready to be activated for modern applications.

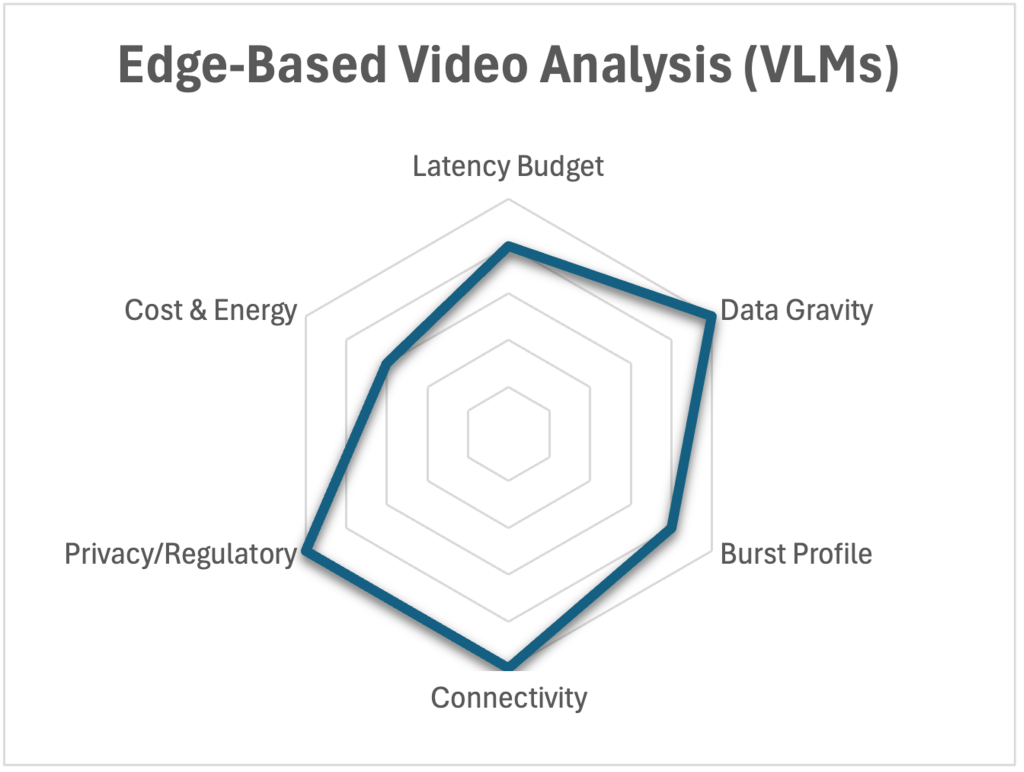

Not every AI workload belongs at the edge. We're developing selection criteria to help operators evaluate where to place different workloads. The radar chart below shows how edge-based video analysis scores across these dimensions.

If you missed our SCTE TechExpo session on operationalizing edge-hosted AI, the recording is available on-demand.

CableLabs’ work in edge compute represents a critical component of the Technology Vision for the industry, specifically within the Platform Evolution vector. By building adaptable platforms through cloud-based virtualization, edge computing and converged provisioning systems, broadband operators gain the flexibility to deliver and enable new network services and applications that meet evolving customer needs.

Advancing Standardization and Real-World Deployments

To accelerate industry adoption, CableLabs continues contributing to the CAMARA Edge Cloud API work, providing a unified way for developers to discover operator edge capabilities and deploy edge applications and inference workloads to them. As operators evaluate how to expose compute resources, the Compute Services APIs offer a relevant reference point for shaping future offerings.

We've paired these standardization efforts with real implementations in CableLabs' 10G Lab, including demonstrations of portable AI workloads and CAMARA-aligned Edge Cloud APIs. Our members continue advancing production-readiness by virtualizing major network functions such as the CCAP/CMTS and CDN infrastructure. These are foundational steps toward flexible, cost-efficient compute services delivered directly from the operator network.

Partnering Through NaaS on Edge Compute

Our Network as a Service (NaaS) program brings these technical threads together. As operators consider how to productize edge compute, standardize APIs and scale AI workloads, CableLabs is expanding discussions within the NaaS Working Group. Another Technology Vision priority, NaaS helps advance the Differentiated Services vector, focusing on context-aware services that enable seamless user experiences and introduce new revenue opportunities for operators.

We're seeking member and vendor input on priorities ranging from monetizing low-latency compute to enabling partner ecosystems to enhancing operational efficiency through edge-deployed AI. We're also working on solutions for operators planning infrastructure refreshes and want to ensure their upgrades support emerging edge AI workloads.

Join the Conversation

Join us for the next NaaS monthly update on Wednesday, January 21. We'll share the latest progress, showcase demonstrations including our CAMARA Edge Cloud API work, and engage directly with members and vendors who are shaping the roadmap.

If you’re interested in this work, stay up to date by joining the working group, which is open to CableLabs member and vendor communities. Meeting details are available when you join the group.

Want to dive deeper into edge computing? Make plans to join us at CableLabs Tech Summit 2026 — April 27–29 in Westminster, Colorado. Tech Summit is an exclusive networking and knowledge-sharing event for our member operators and exhibiting vendor community, building upon the successful format of its predecessor, CableLabs Winter Conference.

In the session “Revolutionizing Customer Experience with Intelligence at the Edge,” we’ll explore what it really takes to deploy an edge application using open-source infrastructure. Register soon to join the conversation. When you complete your registration by January 31, you’ll receive an exclusive CableLabs YETI tumbler when you check in for the conference.

Have questions or want to learn more about edge computing? Contact me to discuss how this future-forward approach can fit into your network strategy.

Wired

Fiber: Laying the Groundwork for the Experience and Adaptive Eras

Key Points

- Fiber infrastructure supports the high-capacity and low-latency foundation needed to support seamless connectivity as user expectations evolve beyond raw speed.

- CableLabs’ work in fiber technologies — from FTTP to DPoE specifications — advances deployment efficiency and interoperability across the broadband ecosystem.

For years, the broadband industry’s innovative energy focused on speed: faster downloads, higher throughput, more gigabits per second. But as online activity has been woven more deeply into the fabric of daily life, user expectations have shifted. Today’s success metrics place less emphasis on speed and more on quality, reliability and seamless experiences that adapt to user needs.

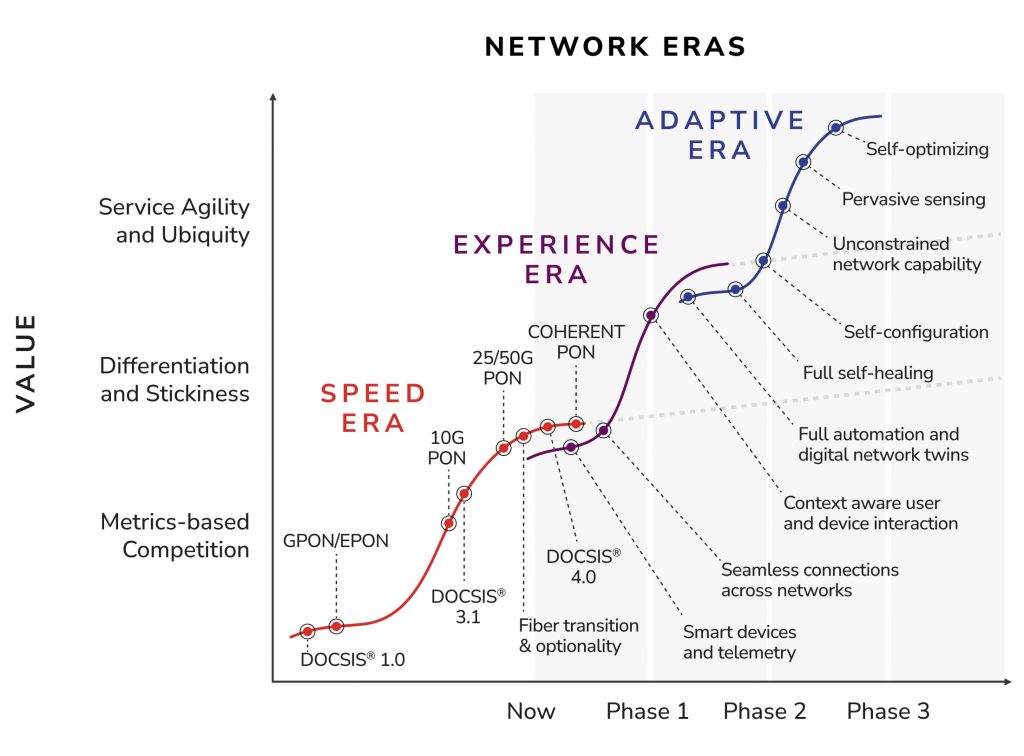

CableLabs has been working on fiber optics throughout this evolution because we view fiber as a valuable, practical technology for broadband networks today — and one whose role and value continue to grow. The industry’s progression from the Speed Era to the Experience Era — and now toward the Adaptive Era — reinforces why fiber optics has been, and remains, foundational to the future of broadband.

Fiber as a Foundational Technology — Then and Now

This evolution from the Speed Era to the Experience Era, and ultimately toward the Adaptive Era, requires deliberate changes in how networks are designed, built and operated. Seamless connectivity and intelligent solutions demand high-capacity, reliable infrastructure that can support new technologies and service offerings.

Fiber optics is a key technology enabling this shift. Fiber optics enabled the Speed Era by addressing growing demands for throughput and scale. As the industry moved into the Experience Era, those same characteristics became essential for delivering consistent, high-quality connectivity — and fiber optics will continue to be a key enabler as networks evolve toward the Experience and Adaptive eras.

Fiber Optics at CableLabs

Fiber optics is widely recognized as the preferred broadband solution for new network builds among municipalities, utilities, telcos and traditional cable operators. Its ability to support significant capacity growth and evolving technologies makes it a strong foundation for future broadband innovation.

At CableLabs, our work in fiber optics spans the full lifecycle of fiber to the premises (FTTP) networks. This includes architecture, operations and management, interoperability, access technologies, provisioning and deployment efficiency — all focused on helping operators deploy and operate fiber networks at scale.

One example of CableLabs’ early work in fiber optics is the DOCSIS Provisioning of EPON (DPoE) specifications. Developed more than 15 years ago, DPoE enabled operators to use existing DOCSIS provisioning systems to configure and manage fiber-based customer premises equipment in much the same way they managed DOCSIS cable modems. This work helped reduce operational complexity, supported early FTTP deployments, and enabled the industry’s first 10 Gbps PON implementations — impacts that are still reflected in networks today, even as technologies continue to evolve.

Today, CableLabs’ fiber optics work continues across a wide range of specifications, research efforts and collaborative activities. This includes the Cable OpenOMCI specification and ongoing interoperability events that help ensure consistent, interoperable FTTP deployments across vendors and operators. CableLabs has also published reports on optical network operations and management and continues to work with vendor partners on specifying interoperable telemetry collection — supporting more observable, manageable and reliable optical networks.

CableLabs is also advancing the coherent PON specification, alongside research into PON security and the development of a complementary PON security specification designed to work in tandem with existing PON security mechanisms.

Beyond access networks, CableLabs’ advanced optics work includes coherent optics specifications, research into Distributed Fiber Optic Sensing (DFOS), advanced wavelength sources, wavelength switching technologies, hollow core fiber, and research into low-latency performance over optical networks.

Fiber Enabling the Experience and Adaptive Eras

Today’s users don’t want to think about their network connection. They simply want it to work — everywhere, on any device. Whether they are video-conferencing from home, streaming entertainment across multiple screens or relying on smart devices to manage daily life, the expectation is seamless performance without interruption.

Fiber optics delivers the speed, reliability and capacity necessary to power these experiences and keep pace with rising demands. More importantly, it provides the headroom required for experiences we cannot yet anticipate.

Looking ahead, the Adaptive Era envisions networks that sense, learn and respond to user needs in real time. These intelligent, context-aware networks will require infrastructure capable of supporting advanced capabilities such as AI-driven network optimization, real-time sensing and self-healing. Fiber optics provides the high-performance foundation these innovations require, enabling proactive maintenance, adaptive bandwidth allocation and self-optimizing networks.

For all-fiber deployments today, passive optical network (PON) technology remains a widely used and effective approach, offering the scalability, resiliency and reliability needed to support current services. At the same time, CableLabs continues to research and evaluate advanced optical technologies that could complement or succeed PON as network requirements and economic considerations evolve.

Fiber Optics as the Path Forward

Fiber optics is the strategic enabler that prepares the industry for whatever comes next. As a critical component of CableLabs’ Technology Vision and its Network Platform Evolution theme, fiber optics provides clear priorities for innovation, aligns with end-user expectations for performance and reliability, and offers a forward-looking foundation for long-term planning.

As broadband continues to evolve through new eras, applications, services and user expectations will inevitably change. Fiber optics provides the stable, high-capacity foundation that allows networks to adapt. CableLabs’ long-standing investment in fiber optics positions the industry to move forward with confidence — building scalable, interoperable and future-ready FTTP networks together.

Technology Vision

Shape the Future of Connectivity at CableLabs Tech Summit 2026

Key Points

- CableLabs Tech Summit is an exclusive networking and knowledge-sharing event for our member operators and exhibiting vendor community, building upon the successful format of its predecessor, CableLabs Winter Conference.

- Joanna Stern, author of the upcoming book “I AM NOT A ROBOT” and award-winning technology columnist at The Wall Street Journal, will deliver the keynote, exploring how consumer tech evolution is shaping broadband industry expectations.

- Registration is open now for the conference, scheduled for April 27–29, 2026, in Westminster, Colorado.

The broadband industry is evolving at unprecedented speed. As networks become more intelligent, adaptive and context-aware, collaboration is key to shaping what comes next. CableLabs Tech Summit 2026 is where that collaboration takes shape — connecting strategy with execution to accelerate innovation and scale what works.

Building on the legacy of CableLabs Winter Conference, Tech Summit deepens the dialogue — uniting operators, exhibiting vendors and broadband leaders to focus on the industry’s most critical priorities. This private, practical forum is designed to move ideas into action.

Influence the Direction of the Industry — Together

With the Technology Vision as a shared foundation, Tech Summit fosters real-time collaboration through structured sessions, networking, working groups and an interactive exhibit floor. This year, the event will focus on how AI, automation and evolving service models are transforming the broadband experience, particularly across platform intelligence, DOCSIS technology and in-home reliability.

Much more than a traditional conference, CableLabs Tech Summit is a focused and comprehensive working session designed to strengthen relationships, spark collaboration and drive progress on shared priorities.

This intimate, closed-door event brings together CableLabs members and approved exhibitors to align on strategic priorities and turn insight into impact. Expect candid discussions, real-world use cases and collaborative problem-solving that drive innovation and strengthen industry momentum.

Featured Keynote: Joanna Stern of The Wall Street Journal

Joanna Stern, an Emmy Award-winning journalist and one of the most respected voices in personal technology today, will deliver the keynote. As a senior personal technology columnist for The Wall Street Journal, Joanna blends deep reporting with creativity, producing columns and videos that make complex topics accessible and entertaining.

Her upcoming book, “I AM NOT A ROBOT,” explores the impact of AI on daily life by chronicling a year in which she let artificial intelligence infiltrate nearly every aspect of her world. Throughout her career, Joanna has earned numerous honors, including Gerald Loeb Awards for her innovative journalism.

Smaller Market Conference

Ahead of Tech Summit on Monday, April 27, CableLabs invites small and mid-tier operators to join Smaller Market Conference, hosted by CableLabs and NCTA – The Internet & Television Association. Connect with and hear from executives and leaders from across the spectrum of responsibilities, representing business strategy, technology, engineering, marketing, operations and customer experience.

This event is available for CableLabs members and NCTA guests. Registration for Smaller Market Conference is separate from Tech Summit, so please register for both if you’re planning to attend.

Collaboration in Action at CableLabs Tech Summit 2026

Be part of the ecosystem defining the next generation of connectivity. Join us in Westminster, Colorado, April 27–29, 2026, for two days of strategic collaboration, practical insights and technology exploration — all built around CableLabs’ Technology Vision. Join us to:

- Explore and advance the industry’s Technology Vision: Delve into the technologies that define this strategic framework and collaborate with fellow members and vendors to advance the Technology Vision.

- Connect with fellow experts: Engage in meaningful discussions, share ideas and collaborate with leaders who are driving industry transformation.

- Future-proof your networks: Gain actionable insights to prepare your networks — and your teams — for the challenges and opportunities ahead.

Tech Summit kicks off on Monday, April 27, with a welcome reception followed by two full days of practical knowledge and enriching insights. This is an event you can’t afford to miss. Members who register by January 31, 2026, will receive an exclusive YETI tumbler.

Security

CableLabs Expands Engagement in the Connectivity Standards Alliance

Key Points

- CableLabs has advanced to Promoter Member status in the Connectivity Standards Alliance, gaining a board seat and greater influence over global Internet of Things and smart-home interoperability standards.

- As the broadband industry moves beyond speed as the primary differentiator, CableLabs is helping shape secure, reliable and interoperable device standards that improve the connected-home experience for consumers.

CableLabs has strengthened its role in the Connectivity Standards Alliance (CSA), becoming a Promoter Member and joining the Board of Directors. As smart homes become smarter and fill with more connected devices, the need for secure, reliable and interoperable devices has never been greater. Our expanded role enables us to help shape the standards that make that possible — from Matter to Zigbee to emerging Internet of Things (IoT) security frameworks.

The CSA is a standards development organization dedicated to the IoT industry and the connected home. It creates, manages and promotes several standards across several working groups including Matter, Zigbee, Product Security, Data Privacy and Aliro (physical access). CSA Matter devices use Wi-Fi and Thread to create an ecosystem “fabric” within the home to allow secure interoperability of IoT devices.

This work is increasingly critical as the broadband industry moves beyond speed as the primary differentiator. The foundation for CableLabs’ Technology Vision, our Eras of Broadband Innovation model illustrates the industry’s evolution from raw speed to intelligent, adaptive services.

These differentiating services are based upon a subscriber’s engagement with their networked devices — making it clear that continued increases in capacity, although necessary, will not be sufficient alone to meet the future connectivity expectations. Subscribers experience the network through their devices, and one of the first places they will see this is within the smart home and IoT space.

One of the heuristics in corporate governance is to use outsourced or contract IT help when operating fewer than 50-75 hosts, but to hire the first network admin once there are 75–100 managed hosts (or over 50 employees).

This year, Comcast reported that the average subscriber home has 36 connected devices. This means that managing those devices is likely already a concern for many consumers. How can operators make managing devices easier and safer for those subscribers? How can they ensure the complexity doesn’t lead to insecurity through user apathy or burdensome expectations?

Delivering the Best Experience Requires Collaboration

The Alliance’s objectives center on a simple premise: if subscribers enjoy their device interactions, if those devices work reliably and make life easier, if they’re secure and protect privacy, and if those devices are interoperable — the networks that make it all happen benefit. But the only way to make it work across manufacturers, ecosystems and technologies is for industry stakeholders to come together around a standards-based approach to secure device interoperability.

All of these customer experiences are areas where the broadband industry’s objectives intersect with those of smart home device providers and operators. Improving these experiences leads to increased adoption, which then drives cycles of economic growth for all participants in this sector — including those providing connectivity solutions.

Standardized, secure interoperability reduces network operator costs (e.g., support calls), lowers risk (e.g., insecure devices and botnets), enhances subscriber safety (e.g., home network vulnerabilities, physical premise security), simplifies device management (e.g., software updates) and improves the overall user experience. This is a key part of our engagement. The broadband industry’s role in providing a safe and easy subscriber experience doesn’t end at the cable modem.

CableLabs is engaging in this work for several reasons, which all play toward a larger vision:

- To help shape the future of smart home global IoT interoperability standards

- To represent the industry and interests in the global marketplace with subscribers

- To ensure broadband ecosystems have compatibility with devices using these standards

- To enhance the security posture of the broadband industry and within the smart home

- To promote industry collaboration and engagement including device manufacturers, ecosystem operators, testing labs, integrators, silicon providers and network operators

Taking On a Larger Leadership Role with CSA

CableLabs’ move from participant to promoter member will enable us to engage in organizational guiding decisions and take on additional leadership roles within the working groups. We’ll have increased visibility and opportunities across the organization, and we can keep the broadband industry front and center in each of these engagement areas.

The board representatives for CableLabs will be our CISO and Distinguished Technologist Brian Scriber and principal architect Jason Page.

We look forward to contributing our unique perspectives on the network operator ecosystem and our Technology Vision for the industry. We’ll also bring a deep understanding of connectivity tooling like Wi-Fi, the home gateway/router experience, security principles across communications protocols and production PKI operations related to consumer electronics security, as well as a thorough understanding of the policy and regulatory space related to cybersecurity and connected devices.

Wired

XGS-PON Interoperability Event Wraps Productive Year of Testing

Key Points

- The fourth XGS-PON Interop·Labs event of 2025 brought new suppliers and chipset families into the testing mix, deepening CableLabs’ supplier diversity.

- The event expanded test coverage to include new OMCI message exchanges and configuration scenarios, which were inspired by findings from earlier events.

- Test cases included new performance-monitoring tests, basic notifications tests and greatly enhanced test plan support for integrated PON gateways.

Our final Interop·Labs event of 2025 was held last month at CableLabs headquarters in Louisville, Colorado. As with prior events, the week focused on interoperability aspects of the ONU Management and Control Interface (OMCI), defined via a combination of ITU-T Recommendation G.988 and the CableLabs Cable OpenOMCI specification.

This event continued our approach of pairing optical line terminals (OLTs) and optical network units (ONUs) from different suppliers to exercise real-world configurations, management and monitoring behaviors. Supplier engineering teams arrived with updated software, new device variants and a fresh set of test cases built from lessons learned through the year’s earlier interop events.

Supplier Participation in the XGS-PON Interop

Participating suppliers of customer premises equipment (CPE) brought a wide range of XGS-PON ONUs and PON residential gateways, representing multiple chipset families. These devices were paired with OLT platforms from Calix (E7-2) and Nokia (Lightspan MF-2) in a collaborative lab environment designed to exercise aspects of the OMCI implementation of the ONUs.

The diversity of CPE devices — including those based on PON SoCs not previously tested at these events — created meaningful multi-vendor pairings. Several new ONU suppliers also joined this event, expanding the ecosystem represented in our lab.

For the November interop event, the following suppliers provided CPE devices: Calix (ONU and multiple gateways), Gemtek (gateway), Hitron (multiple ONUs), Nokia (multiple ONUs and gateway), Sagemcom (ONU and gateway), Sercomm (ONU), Ubee (ONU and gateway) and Vantiva (ONU).

Testing Environment and Themes

As with our August event, each OLT supplier had a dedicated workbench with a small-scale PON Optical Distribution Network. Engineers used OLT debugging tools and our XGS-PON analyzer to collect OMCI traces and performance data.

During the week of testing, engineers focused on several interoperability-related themes:

- The impact of the extended VLAN tagging — Downstream mode attribute on the handling of Priority Code Point (PCP)-marked frames

- OLT configuration to support vendor-specific gateway eRouter VLAN IDs

- MAC Bridge Service Profile (CPE MAC learning) behavior

- 64-bit Ethernet frame counter performance monitoring reporting

- Forwarding of jumbo Ethernet frames

- Notification and alarm behavior of ONU Ethernet link state changes

- Software download and activation processes

Across these themes, the goal of the event remained clear: identify inconsistencies, understand root causes and convert findings into actionable improvements. Test results were encouraging. Many items identified in earlier events have been addressed by suppliers, even as this expanded test coverage uncovered additional issues that will guide our next round of improvements.

This event capped a productive year of XGS-PON interoperability testing at CableLabs. Throughout 2025, we steadily grew supplier participation, evolved the test plan to cover critical OMCI features more thoroughly and expanded support for diverse device types — specifically integrated PON gateways.

In February, CableLabs brought together three OLT suppliers to test their DOCSIS Adaptation Layer implementations, demonstrating how operators can use familiar DOCSIS-style configuration files to provision services on XGS-PON networks. The event validated the viability of this provisioning approach for operators transitioning to ITU-T PON technologies without replacing existing back-office systems.

During our April event, we focused more deeply on the Cable OpenOMCI, marking a milestone in the industry’s effort to improve cross-vendor compatibility. OLT suppliers from Calix, Ciena and Nokia paired their systems with ONUs from six suppliers to test five core OMCI functions.

Then, in August, CableLabs hosted another OMCI-focused event, bringing together the largest group of OLT suppliers yet, alongside seven ONU suppliers. Engineers tested requirements from the I02 version of the Cable OpenOMCI specification, with expanded test cases covering ONU time synchronization and optical power levels.

Continuous Improvement Cycle

The I03 version of the Cable OpenOMCI specification — incorporating learnings from the April and August interop events — is available now. The findings from this event will be discussed in the CableLabs Common Provisioning and Management of PON (CPMP) working group and may generate new engineering change requests to the Cable OpenOMCI specification in the new year.

This cycle of lab testing, specification refinement and standards engagement is central to ensuring that XGS-PON networks can operate as truly multi-vendor systems.

See You Next Year

CableLabs has four additional PON Interop·Labs events scheduled in 2026, each focused on strengthening various aspects of ITU-T PON interoperability. We invite suppliers to join us in the CPMP and Optical Operations & Management working groups as we continue evolving our specifications. And we look forward to welcoming OLT and ONU suppliers back to our Louisville labs at our next interoperability event planned for January 2026.

Wired

The Pull of PNM: More Proactive, Less Maintenance Please

Key Points

- CableLabs and SCTE's proactive network maintenance working groups bring together operators and vendors to collaboratively develop tools and best practices that make network maintenance more proactive and efficient.

- These working groups offer opportunities for operators and vendors to shape the future of broadband network operations alongside other industry experts.

Proactive network maintenance (PNM) continues to make inroads on its goals of reducing troubleshooting time and cost while reducing the impact of network impairments on customer service. The broadband industry achieves this through grassroots efforts developed and shared by participants in two working groups. This global ecosystem of vendors and operators share their ideas, efforts and challenges to help move the community further on the path to efficient operations and improved services.

In a recent face-to-face meeting, we continued to make progress on several of the working groups’ workstreams, sprint toward some near term goals and set some long-term goals. And there is more to come!

Working Groups

CableLabs’ PNM Working Group (PNM WG) meets bi-weekly for all current work, with a focus on some specific workstreams on the off-weeks. SCTE's Network Operations Subcommittee Working Group 7 (NOS WG7) also focuses on proactive network maintenance and related workstreams. The CableLabs group focuses on developing the engineering and science to enable better PNM while NOS WG7 focuses on the field implementation of that engineering and science.

In addition, the chairs of these working groups meet often to manage the workstreams and ensure progress on key developments that benefit the industry most. Many members overlap between the two groups, which ensures an effective pipeline of research, development and implementation.

Workstreams

The proactive network maintenance working groups maintain progress on several developing workstreams, and a few of note have recently concluded.

Our Methodology for Intelligent Network Discovery (MIND) effort is an important workstream where we target repair efficiency through topology discovery and automation. Participants share developments and ideas on Thursdays, then bring the best results to Tuesday meetings, where we contribute canonical methods and software code for reference. This high cadence and open contribution are leading to well-developed ways to utilize channel estimation data for identifying features in the radio frequency (RF) network that aid in determining the ordinality and cardinality of network components. We accomplish this through an initial process of data cleanup to reveal the features in the signal, then through clustering and pattern matching methods we identify the features in the network and reveal the network's topology. As with all research, the initial process demonstrates functionality, which we have done; now we are working to make it reliable for all the diverse network designs and modify it for various PNM use cases.

The PNM WG has been working hard to develop GAI for Network Operations (NetOps) — or, more simply, AIOps. This group develops and shares the software and tooling to enable the output from NOS WG7 and PNM WG to be automated into network operations through retrieval-augmented generation (RAG) models and agentic AI solutions. In parallel, industry experts are peer reviewing the output from these GAI tools to ensure quality and engineering precision can be reliably delivered, improving on our own peer review process as we go.

Meanwhile, the PNM WG is developing standard ways to quantify impairments in the network as they are revealed in PNM data, referred to as the measurements work, to enable GAI applications and streamline PNM automation.

The PNM WG looks forward to publishing an update to our "Galactic Guide" at the beginning of 2026. The current version is available to the public. In the new version, you can look forward to receiving updates on the development of the measurements work and MIND work, in addition to initial methods to use Upstream Data Analysis (UDA), for those cable modems that can reveal the upstream spectrum.

New workstreams start when the groups complete earlier workstreams and queue up the next priority effort. The initial work on UDA was completed early this year, and more work on it is planned for the future. Likewise, NOS WG7 has recently completed outlining some new PNM training to come from SCTE, which updates the training to utilize new learning methods, and includes our newest knowledge about maintenance efficiency and proactivity.

PNM Face-to-Face

About once a year, CableLabs hosts a face-to-face meeting for these PNM groups and their members. We hosted a hybrid event in October with more than 30 participants representing many operators and vendors at our Colorado headquarters and through virtual attendance. Our time during the face-to-face focused on providing updates about our workstream progress, discussing potential future workstreams and developing content for the upcoming Galactic Guide update.

Near-Term Goals

The working groups are hyper-focused on a few key near-term results:

- Completion of the initial use case for channel estimation data to identify network features and determine the network topology. This is the MIND work.

- Unification on impairment quantification — and, specifically, the ability to monitor resiliency in DOCSIS® networks to transform PNM into managing capacity. Our measurements work will help operators better assess urgency with proactive repairs to prioritize work based on risk of customer impact.

- Identifying new UDA opportunities as new methods are published and further validated.

- Completing updates to the Galactic Guide, including incorporating new, more precise treatments of several important RF concepts published in our technical reports (such as several monographs published recently by SCTE’s NOS WG1).

Long-Term Goals

Accomplishments on our near-term goals open up new opportunities:

- The MIND work is not done; we still can develop better, automated localization for troubleshooting proactive and reactive network faults.

- One topic that will be important for our MIND goals is standardizing GIS information. This would allow the automation we created to work across tools and platforms for greater ease of use and broader adoption.

- We look forward to a forthcoming release of the new PNM training material from SCTE, which our expert participants intend to peer review further.

- As our work on GAI use for network operations continues, we intend to develop better input and better testing methods of these new tools to ensure their reliable application.

- The face-to-face meeting also revealed that we should make better use of our knowledge of noise, distortion and interference (NDI). By looking more closely and improving the categorization of these various signal impairments, we expect to identify their sources and locations through automation — which will help form additional efficient PNM opportunities.

Beyond Proactive Network Maintenance

While there is a substantial community working on RF and DOCSIS-related PNM, CableLabs doesn’t want to leave fiber out of the fun!

We are currently identifying near- and far-term opportunities in the passive optical networking (PON) world as well. As many operators push fiber deeper — all the way to the premises — the Optical Operations and Maintenance Working Group (OOM WG) works toward identifying use cases and aligning them to the telemetry that network elements provide. By better aligning PON and DOCSIS technologies, the group helps streamline network operations and maintenance further.

While the fun continues with DOCSIS technology and there is much more to develop, we are applying our experience with RF over coax to RF over fiber.

Whether your concerns are with maintaining DOCSIS or PON deployments, or ensuring network or service reliability, there is a community ready to work with you. Join a working group, and join the fun!

Wired

Unlocking Optical Fiber’s Potential: Distributed Sensing for Smarter Networks

Key Points

- Distributed fiber optic sensing turns standard optical fibers into thousands of sensors for real-time environmental awareness, infrastructure monitoring and intelligent network optimization — effectively creating an early-warning system that enables operators to prevent failures and improve network reliability.

- CableLabs invites operators, vendors and researchers to collaborate on field trials, standards development and commercialization strategies for this technology.

As cable networks evolve to meet the demands of next-generation connectivity, a quiet transformation is unfolding within the fibers that carry our data.

Distributed fiber optic sensing (DFOS) is emerging as a transformative technology that enables real-time environmental awareness, infrastructure monitoring and intelligent network optimization — all using the existing fiber infrastructure.

This sensing revolution reflects broader industry trends toward full automation, digital network twins and pervasive sensing in CableLabs’ Technology Vision, positioning cable networks as foundational platforms for intelligent and adaptive connectivity.

What Is Distributed Fiber Optic Sensing and Why Does It Matter?

DFOS turns standard optical fibers into thousands of sensors capable of detecting acoustic, thermal and mechanical disturbances. This capability allows operators to monitor their networks proactively, detect threats before they cause damage and even gather insights about the surrounding environment.

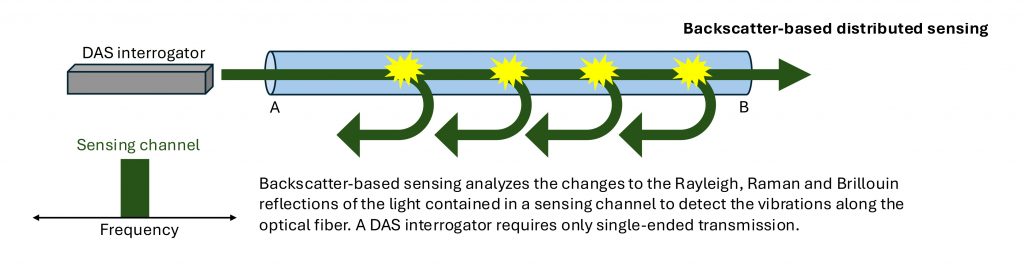

Two main approaches — backscatter-based and forward-based sensing — offer complementary strengths.

Backscatter systems, illustrated below in Figure 1, offer high spatial resolution and single-ended deployment, operating by transmitting laser pulses through the fiber and analyzing subtle variations in the reflected light. These changes carry unique signatures of acoustic, thermal or mechanical disturbances along the fiber.

The term “distributed” means that measurements are captured continuously along the entire length of the optical fiber (not just at discrete points), turning a single fiber strand into thousands of sensing locations.

Figure 1. Backscatter-based distributed sensing.

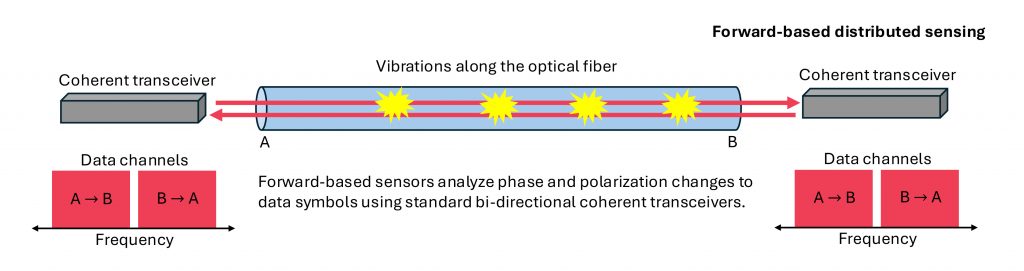

Forward-based DFOS, which Figure 2 shows, excels in long-distance sensing and seamless compatibility with existing optical amplifiers. By leveraging coherent transceivers already deployed in high-capacity networks, this approach enables operators to extract sensing information from the same signals used for data transmission, without requiring additional hardware.

This integration minimizes cost, simplifies deployment and opens the door to advanced analytics over hundreds of kilometers, making it ideal for large-scale infrastructure monitoring and proactive maintenance.

Figure 2. Forward-based distributed sensing.

Cable Networks as City-Wide Sensor Arrays

Imagine a city in which every fiber strand doubles as a sensor. With DFOS, this vision becomes reality. Cable operators can leverage their extensive fiber deployments to create ubiquitous sensing coverage. Bundled fiber paths traversing urban landscapes can detect vibrations, temperature changes and other anomalies — enabling smarter cities and safer infrastructure.

The “Network as Sensors” concept enabled by DFOS transforms optical fibers into thousands of sensing elements, enabling real-time monitoring of large-scale environments and infrastructure.

Real-World Impact: Field Trials and Use Cases

DFOS is already proving its value in the field for proactive maintenance, urban monitoring, environmental sensing and security applications.

Detecting early signs of fiber damage or accidental cable breaks is a key use of DFOS technology. It helps identify unusual activity near critical fiber links, allowing network operators to take preventive action before failures occur.

Researchers have demonstrated this capability using advanced transceivers on long-distance fiber links in real-world network environments. In one case, a DFOS system detected clear polarization changes several minutes before a buried cable was accidentally damaged during construction activity. Such early-warning signals, combined with advanced coherent transceivers, can improve network stability by enabling proactive rerouting and fault prevention.

DFOS is well-suited for cities, where existing fiber networks can be used to monitor traffic, construction and infrastructure conditions in real time. Its continuous, high-resolution sensing helps improve safety and resilience by spotting early signs of damage or stress in urban systems.

Recent studies in cities such as Hong Kong have shown that DFOS can identify and track vehicles based on their unique vibration patterns near roadside fibers. Combining acoustic vibration and temperature sensing has also proven effective for detecting underground issues, such as damaged or flooded cables, and showed strong potential for improving network reliability.

DFOS offers powerful capabilities for environmental and geophysical monitoring by transforming standard optical fibers into dense, real-time sensor arrays. It can detect and localize ground vibrations, temperature changes and strain along vast lengths of deployed fiber, making it ideal for monitoring earthquakes, landslides, permafrost thaw, subsea tsunamis and subsurface hydrological processes. DFOS allows researchers to observe dynamic environmental changes over time and across large areas. This enables early warning systems, long-term climate studies and enhanced understanding of natural hazards in both remote and populated regions.

DFOS can enhance security around critical infrastructure by complementing traditional tools like cameras, radar and lidar. Using vibration data along network fibers, it can detect and classify mechanical threats such as jackhammers or excavators. Researchers have shown that machine learning (ML) techniques, including transfer learning, can achieve high accuracy when analyzing these signals. This demonstrates that DFOS can reliably identify various types of mechanical activity, even when trained on limited or noisy data.

Overcoming Challenges and Looking Ahead

Although DFOS offers immense promise, several hurdles remain.

- Integrating sensing with live data traffic. The ultimate goal of fiber sensing is to use existing optical fiber networks to send data and sense environmental changes at the same time. However, DFOS systems still rely on unused “dark” fibers because combining sensing with live data traffic is difficult. Early tests showed that strong sensing pulses caused errors in nearby data channels. These high-power signals create interference through nonlinear effects, so the spacing between sensing and communication channels must be carefully controlled.

- Deploying in PONs. It’s challenging to integrate traditional DFOS techniques into access networks, such as passive optical networks (PONs), which employ passive power splitters to connect multiple homes and businesses to the internet. This is because the backscattered signals from various drop fibers of the splitters superimpose at the trunk fiber before being detected at the optical line terminal.

- Reducing interrogator costs. Most DFOS interrogators available today are costly because they’re designed for long-range operation, high optical power and specialized industrial applications such as oil and gas, security, and geophysical sensing. To enable broader deployment in communication networks, the technology must be scaled by reducing the per-unit cost and optimizing the design for operator-focused use cases.

- Training ML models on rare events. Training ML models to spot important events in DFOS data is key to realizing the full potential of fiber sensing, especially for rare but critical issues like early fiber damage or breaks. The challenge is that DFOS systems generate huge amounts of data, most of which come from harmless background noise. For instance, a system monitoring tens of kilometers of fiber can produce terabytes of data every day. As a result, meaningful events are buried in a sea of routine data, making it hard for ML models to learn what truly matters.

CableLabs is tackling these challenges with pioneering approaches:

- Coexistence strategies. A novel method enables sensing on active fiber networks without compromising broadband data channels. By using only a fraction of the fiber spectrum, operators can embed distributed sensors into live networks, eliminating the need for dedicated fiber strands and unlocking cost-effective scalability.

- Low-power coded sequences. CableLabs has demonstrated techniques that allow sensing signals to coexist seamlessly with traditional data channels, paving the way for integration without service disruption and enabling self-learning networks.

- Adaptive sensing algorithms. Leveraging AI and ML, these algorithms dynamically adjust to changing environments, improving detection accuracy and reducing false positives.

The cable industry now has a unique opportunity to lead in shaping sensing frameworks and driving global standards.

Join the Sensing Revolution

DFOS is more than a technical innovation; it’s a strategic asset for cable operators. By transforming fiber into a sensing platform, the industry can unlock new capabilities in resilience, intelligence and environmental awareness.

CableLabs invites operators, vendors and researchers to collaborate on field trials, standards development and commercialization strategies. Whether you're exploring sensing-as-a-service models or integrating AI-driven analytics, now is the time to engage. Reach out to us, Dr. Steve Jia and Dr. Karthik Choutagunta, to get started.

The future of cable isn’t just about faster speeds. It’s about smarter, more intelligent networks that anticipate, adapt and protect. CableLabs’ vision is to transform connectivity into a platform for innovation, where networks do more than transmit data: They sense, learn and respond in real time.