Wireless

How 3GPP Is Fostering Network Transformation for Edge Compute

Edge computing is expected to grow tremendously in the near term—between 40 and 70 percent by 2028. In the short term, cloud-native applications deployed closer to client devices will be used to solve edge use cases. In the long term, edge computing infrastructure demand will be driven by edge-native use cases—such as augmented reality (AR)/extended reality (XR), autonomous vehicles and vehicle-to-everything (V2X) communications—that can only function when edge computing infrastructure capabilities are available.

Edge computing applications need last-mile networks that can support stringent requirements for end-to-end network latency, jitter, bandwidth, application-specific quality of service (QoS), reliability and availability. Challenges in edge networking go beyond latency and jitter. The network should support local breakout, dynamic insertion of data network attachment points and dynamic traffic steering.

Solving these challenges is important because edge computing will be a key driver in transforming mobile network operator (MNO) and multiple system operator (MSO) networks. Edge computing applications will gain a foothold in markets—including manufacturing, health care and retail—as well as benefit residential and mobile customers through smart home technologies, streaming services and content delivery, and virtual reality (VR) and AR.

Because last-mile networks are owned by communication service providers (CSPs) like MNOs and MSOs, these operators are well suited to play an essential role in edge compute infrastructure. They also own a lot of real estate and infrastructure, allowing them to host edge compute servers closer to the end user. Collaboration among hyperscalers, CSPs, edge service providers and edge application developers is essential for wider adoption of edge computing.

The Mobile Broadband Standard Partnership Project (3GPP) and partner organizations such as ETSI and GSMA are developing standards for defining network architecture and edge compute application architecture so that vendors can develop interoperable edge infrastructure and applications.

Edge-Compute Infrastructure Transformation

Transforming the applications and CSP infrastructure to support edge compute use cases can happen in two distinct stages based on short-term and long-term use cases. The first stage involves upgrading existing provider networks to be edge compute–ready so that cloud-native applications can support edge compute use cases without any edge infrastructure awareness. Edge-unaware applications are cloud-native applications that need to support an edge compute use case but aren’t capable of interfacing with edge compute infrastructure.

The second stage involves developing brand-new edge compute–aware applications capable of solving edge use cases. Edge-aware applications can utilize edge compute infrastructure features and APIs to optimize the connectivity between clients and servers so that they can support stringent service requirements of edge compute use cases.

In this blog post—the first in a two-part series—I’ll discuss 3GPP initiatives that support CSP network transformation, which will enable cloud-native, edge-unaware applications supporting edge compute use cases. In a later blog post, I’ll talk about the 3GPP SA6 edge app architecture that defined a framework for edge-aware applications to support edge-native use cases, such as AR/VR, V2X and so on.

5G System Transformation for Edge Compute

One of the main requirements of edge compute applications is to connect the most optimal application server instance to the client device satisfying latency requirements. An edge application typically will be hosted on a distributed cluster of application servers that are deployed in multiple data centers connected to a CSP network.

The 3GPP SA2 working group has defined procedures to discover the optimal server. The procedures involve resolving the application server’s fully qualified domain name (FQDN) to a server instance that is closest to the client device. SA2 introduced a new network function and procedures that intercept the Domain Name System (DNS) requests sent by the client for server FQDN resolution. This allows the network to redirect the DNS request to a data network—or, more specifically, a DNS server within the data network—that hosts the application server closest to the client. The Session Management Function (SMF) within the CSP core network plays a critical role in application server discovery. The SMF identifies the data network and local DNS server that resolves the application server FQDN based on the client device location.

SA2 introduced a new network function called Edge Application Server Discovery Function (EASDF), which is configured as a DNS resolver in the user equipment (UE). The EASDF sends a copy of DNS messages to the SMF for it to select a data network. To perform DNS server selection or data network selection, the SMF needs to learn all the Edge Application Servers (EASs) deployed in the data networks. The EAS information includes data network identification, EAS FQDNs, EAS IP addresses and the DNS server. CSPs push EAS deployment information to the network using Network Exposure Function (NEF) northbound APIs, whenever they deploy an EAS.

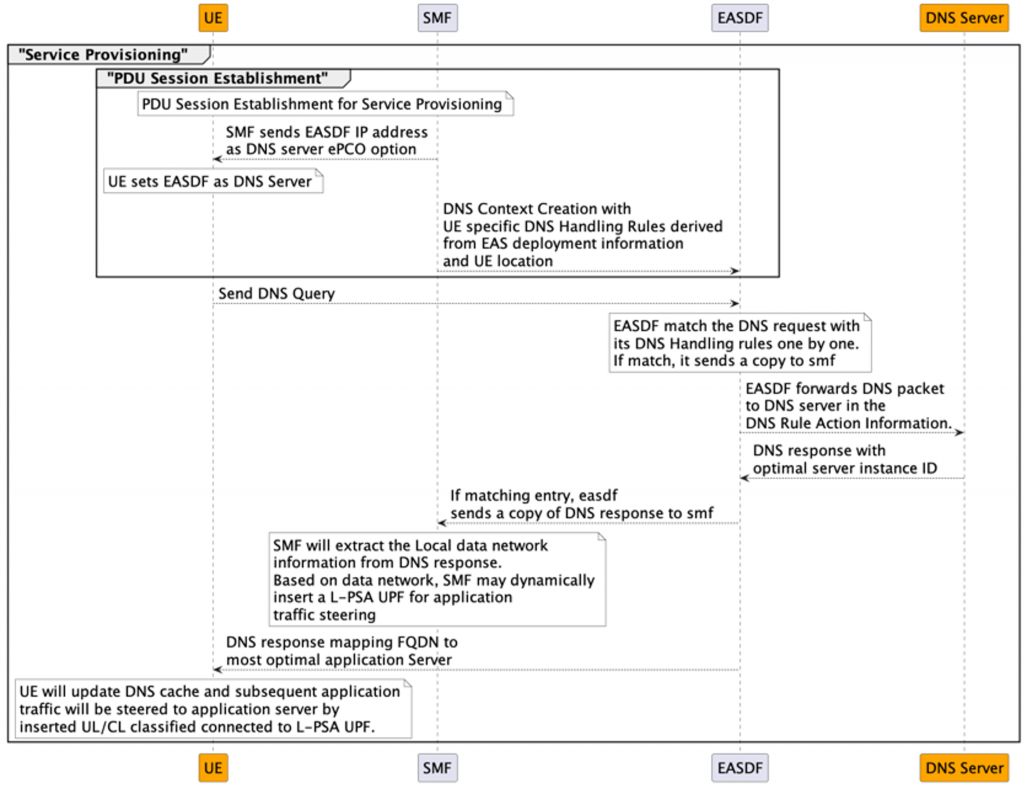

Edge Service Provisioning

The edge service provisioning process includes the discovery of an optimal edge server. SA2 modified the Protocol Data Unit (PDU) session establishment procedure between client device and SMF to enable edge service provisioning. During the PDU session establishment, the SMF configures the EASDF as a DNS resolver on the client device through a protocol configuration option. The SMF also creates client device–specific DNS context that includes DNS rules in the EASDF so that the EASDF copies the DNS requests that the client device sent to the SMF. The copy of the matching DNS query messages from the client device will be sent to the SMF.

The SMF selects the DNS server to resolve the FQDN based on the client device location. The SMF informs the EASDF of the selected DNS server, and the EASDF forwards the cached request to the DNS server that the SMF selected. Similarly, the EASDF will match the DNS response from the DNS server against the DNS rules, and it may send the copy of the DNS response to the SMF. The SMF may dynamically insert a local PDU session anchor and Up Link classifier to steer traffic to the data network that hosts the application server if needed.

Edge Relocation

Edge relocation refers to the process of selecting and connecting to a new application server when the current application server becomes suboptimal. This happens if the client device moves to a new location or if available compute and network resources become too low.

This process completes the server transition with minimal service interruption. The edge relocation process within 5GS relies on 5GS service continuity procedures that are defined for UE roaming scenarios. Procedures for 5GS SA2 edge relocation can support only the client device mobility scenario, not the application server compute and network resource availability.

3GPP 5GS defines three service continuity modes, and 5GS can support edge relocation in SSC Mode-2 and SSC Mode-3 because a new PDU session is established in both these modes. Whenever a new PDU session is established, the client device will initiate a DNS query to resolve the application server FQDN. The network will discover the most optimal server based on the client device location, as mentioned above in the edge service provisioning procedure.

What's Next?

SA2 network architecture defines extensions to 3GPP 5G system architecture to enable edge-unaware applications to support edge compute use cases. These extensions help applications discover the optimal application server based on the client device location by resolving server FQDN to the closest application server by proximity. But optimal application server discovery based on other KPIs—such as compute resources, network resources, capabilities and so on—requires edge-native applications. The 3GPP SA6 working group defined an edge application framework to match application client KPIs with EAS capabilities to select the most optimal EAS.

Transformation of the CSP network is required to support edge-native use cases like AR/VR. The network transformation happens gradually by supporting edge-unaware applications initially and, later, supporting edge-native applications that require more stringent network KPIs.

To read more about how 3GPP supports edge compute transformation, click the button below to read CableLabs’ recent technical brief. Please note that you must have a CableLabs account to view or download the brief.

Convergence

Achieve Seamless Access with Converged Access Edge Controller (CAEC)

Imagine a world in which end users no longer worry about which network they’re connected to because the most optimal connectivity for any given moment is automatically provided. This connectivity consists of one or more seamlessly combined network connections, intelligently customized by a multitude of factors, such as application requirements, user priority and network status. CableLabs believes convergence will be the driving force in making this world a reality and is working on solutions to enable it. Converged Access Edge Controller (CAEC) is one of those solutions.

How Does CAEC Work?

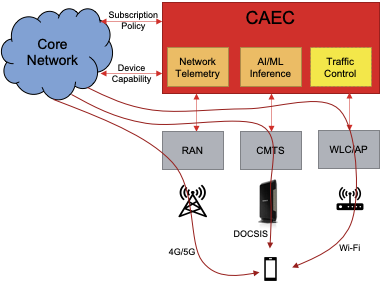

CAEC facilitates the converged use of HFC, Wi-Fi and mobile access technologies to optimize the use of network assets to deliver a seamless user experience. The controller dynamically switches, steers, or splits subscribers' data traffic across the available access links based on subscribers' device capabilities, subscription profile and real-time telemetry data of each access link, such as utilization and link quality.

For example, CAEC can be programmed to optimize the transport cost without degrading the perceived user experience. Households closer to the mobile site could primarily be served via wireless access link; CAEC will transparently switch them to HFC access link in the event of temporary congestion on that site to avoid degradation in the user experience. Similarly, households farther away from the mobile site could primarily be served via the HFC network, and CAEC could split the traffic between HFC and wireless upon onset of congestion in the HFC infrastructure. In another use case, the CAEC algorithm can be optimized to provide an instantaneous bandwidth boost by combining the available accesses based on device and subscription policies.

CAEC’s Modular and Extensible Architecture

CAEC offers powerful, near real-time traffic bonding, steering, and splitting capabilities across multiple access technologies managed by a single operator (i.e. multiple system operator). CAEC sports a microservices-based architecture consisting of three main services; network telemetry, AI/ML inference and traffic control. CAEC’s modular design and open APIs allow it to easily interoperate and complement other network services. For example, CAEC can be implemented as part of standardized network services such as O-RAN Alliance’s RAN Intelligent Controller (RIC) and 3GPP’s Network Data Analytics Function (NWDAF). The CAEC platform is extensible to run customized machine learning models to identify the patterns in network behavior that are often tough for operators to identify. The platform also provides operators the flexibility to develop and implement their own traffic control algorithms.

There are alternative solutions to provide converged access that aggregate mobile and Wi-Fi traffic on capable client devices. For example, 3GPPP has defined an access aggregation specification called Access Traffic Steering, Switching & Splitting (ATSSS). Additionally, several companies are offering cloud-based solutions similar to ATSSS. CAEC can complement these solutions by providing near real-time directions to steer, switch or split the traffic based on a combination of AI/MI models and network intelligence. Converged Access Edge Controller can also provide valuable insights for proactive network maintenance based on real-time statistics analysis and pattern identification within the network.

Learn More

If you need more information or have any further questions, please feel free to reach out to Arun Yerra – Principal Mobile Architect, CAEC Project Lead (a.yerra@cablelabs.com).