Consumer

Wi-Fi vs. Duty Cycled LTE-U: In-Home Testing Reveals Coexistence Challenges

Rob Alderfer, VP Technology Policy, CableLabs and Nadia Yoza-Mitsuishi, Wireless Architect Intern, CableLabs also contributed to this article.

In our last blog on Wi-Fi / LTE coexistence, we laid out the dangers attending the apparent decision of a few large carriers to go forward with the carrier scale deployment of a non-standard form of unlicensed LTE in shared spectrum. This time, we will review some of the testing conducted by CableLabs recently to explain why we are worried. We covered this material at a recent Wi-Fi Alliance workshop on LTE-U coexistence, along with the broader roadmap of research that we see as needed to get to solutions that are broadly supported.

Before we begin, let’s recap: there are different approaches to enabling LTE in unlicensed spectrum: LAA-LTE, and LTE-U. LAA is the version that the cellular industry standards body (3GPP) has been working on for the past year, and its coexistence measures appear to be on a path similar to what is used in Wi-Fi: “listen before talk,” or LBT. In contrast, LTE-U, the technology being developed for the US market, is taking a wholly different approach. LTE-U has not been submitted for consideration to a collaborative standards-setting body like 3GPP, instead it is being developed by a small group of companies through a closed process. LTE-U uses a carrier-controlled on/off switch that “duty cycles” the LTE signal. It turns on to transmit for some time determined by the wireless carrier, then it switches off (again, at the discretion of the carrier) to allow other technologies such as Wi-Fi the opportunity to access the channel.

CableLabs has tested duty-cycled LTE-U in our lab, our office environment, and most recently in a residential environment (test house) to research how technologies are experienced by consumers. Our research to date has raised significant concerns about the impact LTE-U will have on Wi-Fi services. Today we will review our most recent research, which was conducted in our test house.

How We Did Our In-Home Tests

Proponents of LTE-U claim that the technology will be ‘more friendly to Wi-Fi than Wi-Fi is to itself.’ So we decided to test that statement, using our test house to approximate a real-world environment, with technology that would comply with the LTE-U Forum Coexistence Specification v1.2.

(Note that just this week, the LTE-U Forum released a new version of their Coexistence Specification. We’re still looking at it along with the rest of the Wi-Fi community, since it is again a product of their closed process, but we don’t think it changes much for our purposes here. And judging by the discussion at this week’s Wi-Fi Alliance workshop, much work remains to get to reliable coexistence.)

To do our tests, we went to Best Buy and bought two identical off-the-shelf Wi-Fi APs, and we have a LTE signal generator that we can program to cycle the signal on and off – “duty cycling,” in the parlance of the LTE-U Forum.

We first established a baseline of how fair Wi-Fi is to itself. We had our off-the-shelf APs send full buffer downlink traffic (i.e., an AP to a Wi-Fi device), and took throughput, latency and packet loss measurements. We found that the two APs shared the spectrum about equally – throughput was roughly 50:50, for instance.

Next, we replaced one of the APs with LTE-U and repeated our tests, using various duty cycle configurations (LTE ‘on’ times). To make things simpler, we made sure that all of our measurements were done at the relatively strong signal level (-62 dBm) that is used by the LTE-U Forum as the level for detecting Wi-Fi.

What Our Research Found

If our Wi-Fi AP performed better in the presence of LTE-U, that would validate the claims made about it being a better neighbor.

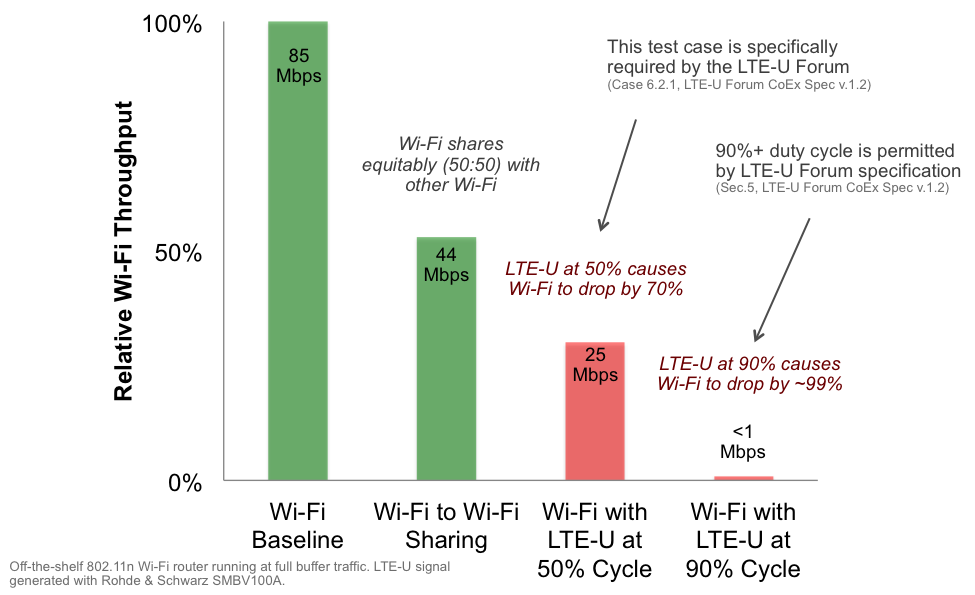

But, we unfortunately did not find that. Quite the opposite, in fact. Our results showed that Wi-Fi performance suffered disproportionately in the presence of LTE-U. Wi-Fi throughput (“speed”) is degraded by 70% when LTE-U is on only 50% of the time, for instance. And more aggressive LTE-U configurations (longer ‘on’ times) would do even more damage, as seen in Figure 1.

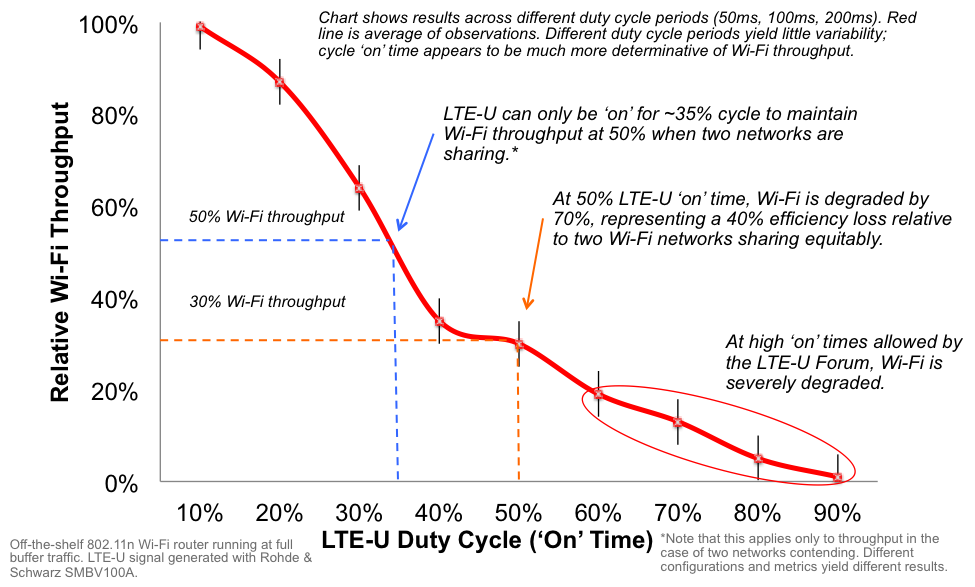

Why does LTE-U do disproportionate damage to Wi-Fi? The primary reason is that it interrupts Wi-Fi mid-stream, instead of waiting its turn. This causes errors in Wi-Fi transmissions, ratcheting down its performance. We ran tests across a range of duty cycles to explore this effect. In our test case of two networks sharing with each other, to maintain Wi-Fi at 50% throughput, LTE-U could be on for no more than 35% of the time, as seen in Figure 2.

Does this mean that if LTE-U were to limit its duty cycle to 35% that Wi-Fi would perform acceptably? Unfortunately, it is not that simple. Our test case is admittedly limited: We are showing only two networks sharing the spectrum here, but in reality there can sometimes be hundreds of Wi-Fi APs within range of each other. If LTE-U took 35% of the airtime when sharing with 5 Wi-Fi networks, or 50 for that matter, the Wi-Fi experience would surely suffer significantly. And the problem gets worse if there are multiple LTE-U networks as well, which seems likely.

The effect that LTE-U would have across a range of real-world circumstances is, frankly, unknown. It has not been researched – by anyone. What we do know, based on our work here, is that the 50% LTE-U ‘on’ time considered by the LTE-U Forum when two networks are sharing (see Section 6.2.1 of their coexistence spec) does not yield proportionate throughput results.

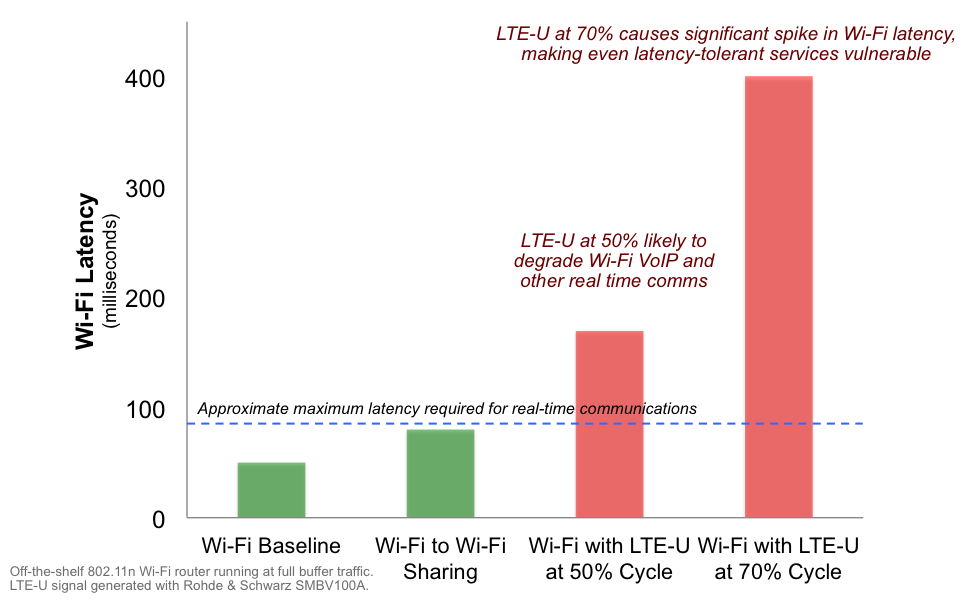

That’s the effect of LTE-U on throughput, which is important. But equally important is latency, or the amount of delay on the network, which can have a dramatic impact on real-time applications like voice and video conferencing. We tested Wi-Fi latency across different duty cycles, and the results are seen in Figure 3 below. What’s important to note is that while the two Wi-Fi APs we tested can co-exist while providing smooth operation of real-time communications, that doesn’t appear to be the case if LTE-U is present. Even if LTE-U is only on 50% of the time, it degrades real-time Wi-Fi communications in a way that would likely be irritating to Wi-Fi users. And if LTE-U is on 70% of the time, then latency reaches levels where even latency-tolerant applications, like web page loading, are likely to become irritating.

So, we have seen in our research that LTE-U causes disproportionate harm to Wi-Fi, even when it is configured to share roughly 50:50 in time – which is not a given, as we have noted before. That’s because LTE-U interrupts Wi-Fi signals instead of waiting its turn through the listen-before-talk approach that Wi-Fi uses. We discussed the importance of using listen-before-talk in our last blog, and now you can see why we think it is important for LTE-U.

This research shows that LTE-U is not, in fact, a better neighbor to Wi-Fi than Wi-Fi is to itself, as its proponents claim. What should we make of these competing results? Is one claim right, and the other wrong? It’s not really that simple – the answer depends on how LTE-U is configured and deployed, and what coexistence features it actually adopts.

This highlights the need for the open and collaborative R&D that we have long been urging, so that we can find solutions that actually work for everyone. That has been happening with LAA, the 3GPP standard form of unlicensed LTE, where there is room for cautious optimism on its ability to coexist well with Wi-Fi. Hopefully LTE-U proponents will move toward actual collaboration.

Jennifer Andreoli-Fang is a Principal Architect in the Network Technologies group at CableLabs.

Rob Alderfer is VP Technology Policy and Nadia Yoza-Mitsuishi is Wireless Architect Intern, both at CableLabs, also contributed to this article.