Technical Blog

Adaptive Bitrate and MPEG-DASH

The basic solution that streaming video provides is that an entire video file does not need to be downloaded before it is viewed. Little chunks of media may be grabbed for playback in order to achieve the same effect. The one caveat is that the media must be received as fast of the player consumes it. Furthermore, all things being equal, the higher the quality of a particular piece of content, the more bytes it occupies on disk. So it holds true that to stream higher quality video a faster network connection is required. But all things are not equal when it comes to network speeds seen by the multitude of connected devices in the world today.

The introduction of Adaptive Bitrate (ABR) streaming technology has revolutionized the delivery of streaming media. There are two fundamental ideas behind ABR:

- Multiple copies of the content at various quality levels are stored on the server.

- The client device detects its current network conditions and requests lower quality content when network speeds are slower and higher quality content when network speeds are faster.

These principles are quite simple, but there are many technical challenges involved in producing a functional design for an ABR system. First, the media segments on the server must be created in such a way that the client application is allowed to switch between higher and lower quality versions at any time without seeing a disruptive change in the presentation (e.g. video “jumps” or audio “clicks, pops”). Second, there must be a way for the client to “discover” the characteristics of the ABR content so that it knows what sorts of quality choices are available. And finally, the client itself must be implemented so that it can smartly detect network speed changes and potentially switch to a different quality stream.

Today, a large portion of video streaming over the internet is using one of several ABR formats. Apple’s HTTP Live Streaming (HLS) and MPEG’s Dynamic Adaptive Streaming over HTTP (DASH) are the predominant technologies. Adobe’s HTTP Dynamic Streaming (HDS) and Microsoft’s Smooth Streaming (MSS) were once quite popular, but have fallen out of favor recently. As you can see, most ABR technologies rely on HTTP as the network protocol for serving and accessing data due to the near ubiquitous support in servers and clients. All ABR technologies specify some sort of descriptive file or “manifest” which describes the locations, quality, and types of content available to the client.

Of all the ABR formats described previously, only MPEG-DASH was developed through an open, standards-based process in an effort to incorporate input from all industries and organizations that plan to deploy it. CableLabs, once again, played a critical role in representing the needs of our members during this process. We will focus on the details of MPEG-DASH technology for the remainder of the article.

MPEG-DASH

The MPEG-DASH specification (ISO/IEC 23009-1) was first published in early 2012 and has undergone several updates since. As in other formats, media segments are stored on a standard web server and downloaded using the HTTP or HTTPS protocols. While DASH is audio/video codec agnostic, there are profiles in the specification that indicate how media is to be segmented on the server for ISOBMFF and MPEG2 Transport Stream container formats. Additionally, both live and on-demand media types have been given special consideration.

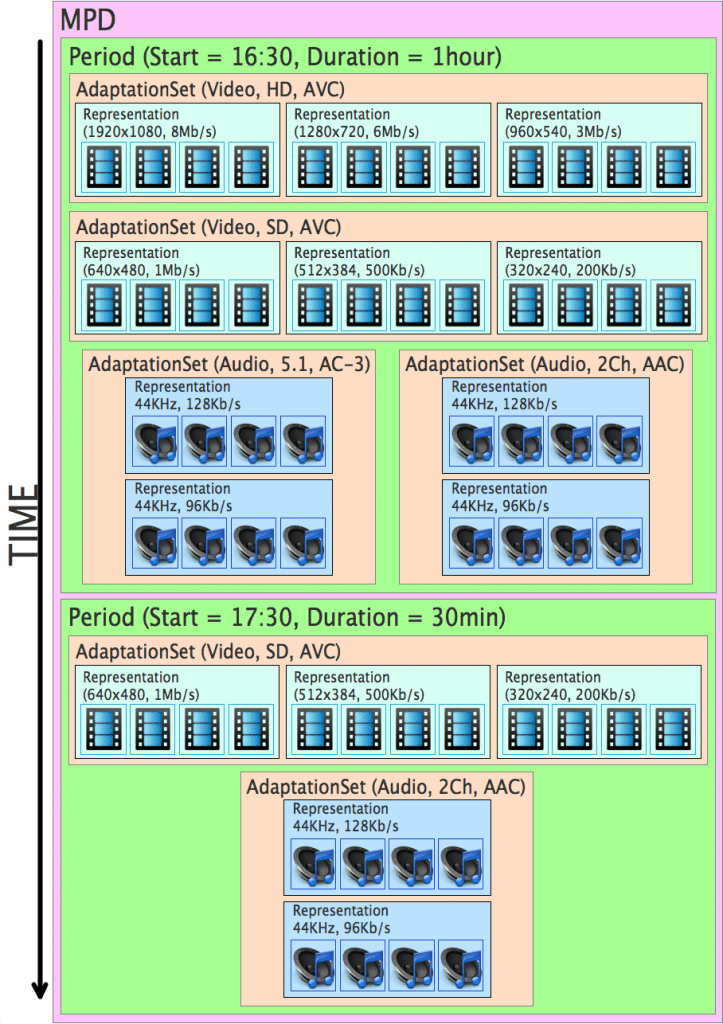

The DASH manifest file is known as a Media Presentation Description, or MPD. It is XML-based and contains all the information necessary for the client to download and present a given piece of content.

The root element in the manifest is named MPD. This contains high-level information about the content as a whole. MPDs can be “static” or “dynamic”. A static MPD is what would be used for a typical on-demand movie. The client can parse the manifest once and expect to have all the information it needs to present the content in its entirety. A “dynamic” MPD indicates that the contents of the manifest may change over time, such as would be expected for live or interactive content. For dynamic manifests, the MPD node indicates the maximum time the client should wait before it requests a new copy.

Within the root MPD element is one or more Period elements. A Period represents a window of time in which media is expected to be presented. A Period can reference an absolute point in time, as would be the case for live media. Alternatively, it can simply indicate duration for the media items contained within it. When multiple Periods are present in an MPD, it is not necessary to specify a start time for each Period in order for them to be played in the sequence that they appear. Periods may even appear in a manifest prior to its associated media segments being installed on the server. This allows clients to prepare themselves for upcoming presentations.

Each Period contains one or more AdaptationSet elements. An AdaptationSet describes a single media element available for selection in the presentation. There may be one AdaptationSet for HD video and another one for SD video. Another reason to use multiple AdaptationSets is when there are multiple copies of the media that use different video codecs, which would enable playback on clients that only support one codec or the other. Additionally, clients may want to be able to select between multiple language audio tracks or between multiple video viewpoints. Each AdaptationSet contains attributes that allow the client to identify the type and format of media available in the set so that it can make appropriate choices regarding which to present.

[highlighter line=0]

audio_aac-lc_128k_dashinit.mp4

audio_aac-lc_192k_dashinit.mp4

video_1280x720_h264-2500k_dashinit.mp4

video_1920x1080_h264-4500k_dashinit.mp4

video_512x288_h264-360k_dashinit.mp4

video_704x396_h264-620k_dashinit.mp4

video_896x504_h264-1340k_dashinit.mp4

At the next level of the MPD is the Representation. Every AdaptationSet contains a Representation element for each quality-level (bandwidth value) of media available for selection. Different video resolutions and bitrates may be available for selection and the Representation element tells the client exactly how to find media segments for that quality level. There exists several different mechanisms to describe the exact duration and name of each media file in the Representation (SegmentTemplate, SegmentTimeline, etc.), but we won’t dive into that level of detail in this article.

Content Protection

Since this blog series is focused on the topic of premium subscription content that is required to be protected, I want to briefly discuss the features incorporated in the DASH specifications that describe support for encrypted media.

There are actually four separate documents that make up the DASH specification set. Part 1 (ISO/IEC 23009-1) is the base DASH specification. Part 2 (ISO/IEC 23009-2) describes the requirements for conformance software to validate the specification. Part 3 (ISO/IEC 23009-3) provides guidelines for implementing the DASH spec. Finally, part 4 (ISO/IEC 23009-4) describes content protection for segment encryption and authentication. In segment encryption, the entire segment file is encrypted and hashed so that its contents can be protected and its integrity validated. This is different than the “sample encryption” that is the focus of this blog series. For sample encryption, only audio and video sample information is encrypted, leaving container and codec metadata “in the clear”.

The ContentProtection element indicates that one or more media components are protected in some way. ContentProtection elements can be specified at either the Representation or AdaptationSet level in the MPD. The schemeIdUri attribute of the ContentProtection element uniquely identifies the particular system used to protect the content. I will dive further into the usage of this element when we cover CommonEncryption in Part 4 of the blog series.

...

AAAAUXBzc2gAAAAA7e+LqXnWSs6jyCfc1R0h7QAAADEIARIQWYGZeOWWXjWir2xOsQ2lvBoJY2FibGVsYWJzIgdUV09LRVlTKgVBVURJTzIA

AAAANHBzc2gBAAAAEHfv7MCyTQKs4zweUuL7SwAAAAFZgZl45ZZeNaKvbE6xDaW8AAAAAA==

...

DASH-IF

One of the drawbacks that come with MPEG-DASH being developed in an open standards body is the relative complexity of the final product. Individuals and organizations from around the globe have contributed their own requirements to the standardization effort. This leads to a specification that covers a vast array of use-cases and deployment scenarios. When it comes to producing an actual implementation, however, the standard becomes somewhat unwieldy. Several new standards bodies have now emerged to whittle down the base DASH spec to a subset that meets the needs of its members and reduce the complexity to the point that functioning workflows can actually be developed.

One such standards body is the DASH Industry Forum, or DASH-IF. What started out as marketing and promotions group for MPEG-DASH, has grown into a full-blown standards organization with members such as Adobe, Microsoft, Qualcomm, and Samsung. The goal of this group is drive adoption of MPEG-DASH through the development of “interoperability points” that describe manifest restrictions, audio/video codecs, media containers, protection schemes, and more to produce a more implementable subset of the overall DASH spec.

The DASH-IF Interoperability Guidelines for DASH-AVC/264 (latest version is 3.0) sets forth the following requirements and restriction on the base DASH specification:

- AVC/H.264 Video Codec (Main or High profiles)

- HE-AACv2 Audio Codec

- Fragmented ISOBMFF Segments

- MPEG-DASH “live” and “ondemand” profiles

- Segments are IDR-aligned across Representations and have specific tolerances with regard to variation in segment durations.

- CommonEncryption

- SMPTE-TT Closed Captions

Greg Rutz is a Lead Architect at CableLabs working on several projects related to digital video encoding/transcoding and digital rights management for online video.

This post is part of a technical blog series, "Standards-Based, Premium Content for the Modern Web".