Security

CableLabs Expands Engagement in the Connectivity Standards Alliance

Key Points

- CableLabs has advanced to Promoter Member status in the Connectivity Standards Alliance, gaining a board seat and greater influence over global Internet of Things and smart-home interoperability standards.

- As the broadband industry moves beyond speed as the primary differentiator, CableLabs is helping shape secure, reliable and interoperable device standards that improve the connected-home experience for consumers.

CableLabs has strengthened its role in the Connectivity Standards Alliance (CSA), becoming a Promoter Member and joining the Board of Directors. As smart homes become smarter and fill with more connected devices, the need for secure, reliable and interoperable devices has never been greater. Our expanded role enables us to help shape the standards that make that possible — from Matter to Zigbee to emerging Internet of Things (IoT) security frameworks.

The CSA is a standards development organization dedicated to the IoT industry and the connected home. It creates, manages and promotes several standards across several working groups including Matter, Zigbee, Product Security, Data Privacy and Aliro (physical access). CSA Matter devices use Wi-Fi and Thread to create an ecosystem “fabric” within the home to allow secure interoperability of IoT devices.

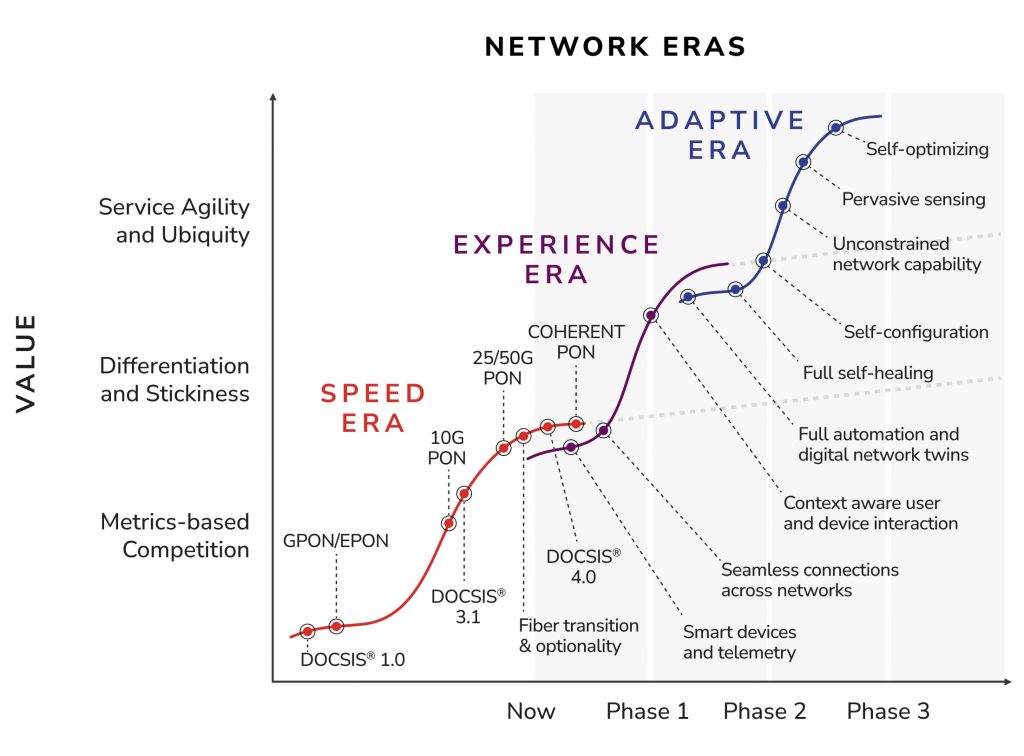

This work is increasingly critical as the broadband industry moves beyond speed as the primary differentiator. The foundation for CableLabs’ Technology Vision, our Eras of Broadband Innovation model illustrates the industry’s evolution from raw speed to intelligent, adaptive services.

These differentiating services are based upon a subscriber’s engagement with their networked devices — making it clear that continued increases in capacity, although necessary, will not be sufficient alone to meet the future connectivity expectations. Subscribers experience the network through their devices, and one of the first places they will see this is within the smart home and IoT space.

One of the heuristics in corporate governance is to use outsourced or contract IT help when operating fewer than 50-75 hosts, but to hire the first network admin once there are 75–100 managed hosts (or over 50 employees).

This year, Comcast reported that the average subscriber home has 36 connected devices. This means that managing those devices is likely already a concern for many consumers. How can operators make managing devices easier and safer for those subscribers? How can they ensure the complexity doesn’t lead to insecurity through user apathy or burdensome expectations?

Delivering the Best Experience Requires Collaboration

The Alliance’s objectives center on a simple premise: if subscribers enjoy their device interactions, if those devices work reliably and make life easier, if they’re secure and protect privacy, and if those devices are interoperable — the networks that make it all happen benefit. But the only way to make it work across manufacturers, ecosystems and technologies is for industry stakeholders to come together around a standards-based approach to secure device interoperability.

All of these customer experiences are areas where the broadband industry’s objectives intersect with those of smart home device providers and operators. Improving these experiences leads to increased adoption, which then drives cycles of economic growth for all participants in this sector — including those providing connectivity solutions.

Standardized, secure interoperability reduces network operator costs (e.g., support calls), lowers risk (e.g., insecure devices and botnets), enhances subscriber safety (e.g., home network vulnerabilities, physical premise security), simplifies device management (e.g., software updates) and improves the overall user experience. This is a key part of our engagement. The broadband industry’s role in providing a safe and easy subscriber experience doesn’t end at the cable modem.

CableLabs is engaging in this work for several reasons, which all play toward a larger vision:

- To help shape the future of smart home global IoT interoperability standards

- To represent the industry and interests in the global marketplace with subscribers

- To ensure broadband ecosystems have compatibility with devices using these standards

- To enhance the security posture of the broadband industry and within the smart home

- To promote industry collaboration and engagement including device manufacturers, ecosystem operators, testing labs, integrators, silicon providers and network operators

Taking On a Larger Leadership Role with CSA

CableLabs’ move from participant to promoter member will enable us to engage in organizational guiding decisions and take on additional leadership roles within the working groups. We’ll have increased visibility and opportunities across the organization, and we can keep the broadband industry front and center in each of these engagement areas.

The board representatives for CableLabs will be our CISO and Distinguished Technologist Brian Scriber and principal architect Jason Page.

We look forward to contributing our unique perspectives on the network operator ecosystem and our Technology Vision for the industry. We’ll also bring a deep understanding of connectivity tooling like Wi-Fi, the home gateway/router experience, security principles across communications protocols and production PKI operations related to consumer electronics security, as well as a thorough understanding of the policy and regulatory space related to cybersecurity and connected devices.

Security

A Unified Approach to AI Security in the Broadband Industry

Key Points

- AI has the potential to transform networks to be smarter, more adaptable and more reliable; however, this rapid evolution introduces new and complex security challenges.

- CableLabs is launching the AI Security Working Group to bring well-understood security principles into AI R&D, promoting best practices and practical security guidance for AI development to secure the cable broadband industry’s critical infrastructure.

AI is turning the vision of pervasively intelligent networks into reality. It’s becoming the driving force behind networks that are smarter, more adaptable, more reliable and more secure than ever. No longer limited to generating text, AI is evolving into an active operational agent within cable networks. It’s an evolution that unlocks unprecedented opportunities for innovation and new subscriber experiences.

However, this transformation also introduces new and complex security challenges.

Managing the Challenges of AI

Many of AI’s challenges stem from the relentless pace of innovation. New protocols, tools and methods are adopted soon after they emerge, leaving security as an afterthought. For instance, some AI agents are capable of autonomously interacting with data, using tools and calling APIs. All of this adds new layers of complexity, dependencies and attack surfaces. These risks should be carefully managed and addressed as early as possible in the design and development stages.

A key element in any risk management framework is grounding this innovation in a secure foundation that spans the entire AI stack, from the large language models (LLMs) at the core, to the AI agents and their prompts, to the tools and APIs they use, and finally to the AI protocols that connect and coordinate them.

CableLabs is investigating cable-specific elements where we can bring well-understood security principles into AI R&D, laying the foundation of protecting cable’s critical infrastructure from tampering and subversion. Just like any other software, the AI stack would benefit from provenance guarantees, integrity, identity assertions and transitive credentialing.

The AI Security Working Group

Because AI architectures interact directly with critical systems and sensitive data, securing the entire ecosystem — from clients and servers to the protocols that link them — isn’t just a technical best practice; it’s a strategic imperative for the industry. A unified focus on AI security will enable trusted communications and accelerate innovation across both business and technical domains.

To foster collaborative input and discussion, CableLabs is launching the AI Security Working Group (AISWG), a CableLabs member initiative focused on developing and promoting best common practices and practical security guidance for AI deployment relevant to the cable broadband industry. By bringing our members together in this forum, we can help our industry harness the transformative power of AI confidently, responsibly and securely.

CableLabs is also actively engaged in two other non-cable groups working on securing AI:

- The Messaging Malware Mobile Anti-Abuse Working Group (M3AAWG) is working on preventing abuse of and abuse from AI systems.

- The Open Worldwide Application Security Project (OWASP) is working on a broad range of threats to AI.

How You Can Help

There are a few ways CableLabs members can be part of this essential initiative:

- Maximize your membership: Register for a CableLabs account to explore exclusive, member-only resources and content.

- Join the working group: Request to join the AI Security Working Group, and help us define the future of AI security. (A CableLabs account is required to log in.)

- Connect with us: Introduce us to the people in your organization who are focused on AI security, governance and agent protocol implementation.

Security

Securing Smart Homes: Protecting Networks in a Connected World

Key Points

- Advancements in smart home security standards have improved device security markedly over the last decade.

- Adapting and improving pre-existing security tools for the proliferation of smart home devices will be critical to satisfying consumers’ needs in the Experience Era.

- A CableLabs working group explores potential challenges and solutions for continuing to evolve security tool to meet the changing and surging demand of new smart devices.

Have you ever turned the car around just to make sure you turned off your oven or unplugged your iron? That same anxious instinct contrasts with the whimsical possibility of lights switching on and off as we enter or leave a room.

Imagine if, instead of being one more burden to manage, a building — and everything inside of it — could become an active assistant in consumers’ hectic lives. As we realize the Technology Vision for the industry and grow into the Experience Era of broadband innovation, these once-fantastical concepts are no longer outlandish ideas or the one-off creations of hobbyists.

Now, thanks to new advancements in smart home technology and Internet of Things (IoT) devices, it actually is possible for consumers to connect everyday appliances — ovens, irons, dishwashers, mirrors, toothbrushes… the list goes on and on — to their smart home ecosystems.

Over the last several years, smart home devices have proliferated in nearly every store you may enter or visit online. In some cases, it is now more difficult to find a “non-smart” version of a device than just a few years ago, when it was difficult to find the smart version. Just try to find a television without apps built in.

While smart home devices enhance convenience and control within consumer’s homes, concerns about their security persist. Are these smart devices truly secure? What steps can consumers take to keep their smart homes safe ?

The Hidden Complexity of Smart Devices

Smart home devices are often small and sometimes battery powered, which can make them appear simple at first glance. In some ways, using them can be as straightforward as turning a lightbulb on or off. Yet behind that simplicity lies significant complexity: connecting these devices to a network, managing software and firmware updates, handling interactions with other devices and even navigating the challenges of the initial physical installation.

For example, a smart garage door opener might be purchased and installed but onboarding frustrations may have prevented the customer from actually connecting it to the home network. Despite it not being connected to the user’s network, the device’s wireless interface may still be turned on — which can allow anyone within radio signal range to connect and potentially control it.

Recent advancements in device security have helped transform this landscape. These complexities have been redesigned with security integrated from the ground up, allowing devices to be easily and confidently onboarded to a consumer’s home network.

Standardization Has Made Smart Home Security Easier

Many smart home device manufacturers have worked hard to standardize and simplify installation and onboarding activities — which means consumers don’t need to be professional IT experts to bring a smart device into their home networks. Through these standardization processes, smart home devices enable security settings by default.

One of the more prominent smart home standards organizations is the Connectivity Standards Alliance (CSA). Matter, one specification developed within CSA, is an industry-wide initiative designed to simplify setup, interoperability and security. A Matter-certified device must meet strict requirements, so consumers can trust that essential protections are in place.

Network operators along with us here at CableLabs have been a part of these conversations within CSA, helping ensure that smart homes are not just functional, but also secure.

Practical Security With Today’s Smart Home

With smart home specifications and standards such as Matter, smart home device security is standard and user-friendly. For instance, many of the most common security recommendations are enabled by default:

- Automatic updates: Over-the-air device update capabilities are required for certification.

- Network segmentation: Devices are placed on a virtual network called a fabric, where only authenticated and authorized devices can communicate and send commands.

- Authenticity: The authentication and authorization mechanisms in place in Matter utilize the same foundational PKI technologies that ensure authenticity and enable strong encryption of home network traffic as it leaves the cable modem.

Baseline security settings on devices are now the standard in most instances. When paired with a network operator’s Wi-Fi access points and apps, it’s even easier to observe and maintain the continued security settings of these devices.

What Can Consumers Do?

Below are just a few routines that can assist users with the continued security of their smart home devices:

- Control guest access of smart home devices: If guest access is needed, guest accounts should be created through the main administrator account.

- Watch for unusual behavior: Identify when a device isn’t behaving normally. Smart home devices have much lower network bandwidth consumption. From a network provider’s app, customers can view spikes in bandwidth usage, which may signal security issues. Consumers can then take appropriate actions to ensure security.

- Retire or isolate old devices: If a device has stopped receiving automatic updates, it’s standard operating procedure to replace it.

Working to Make the Internet Safer and More Secure

Smart homes are making progress; it is much easier now than it was years ago to bring a smart home device onto a consumer’s home network. CableLabs continues to work to improve the onboarding process for these devices and make consumers’ connectivity experiences more secure — and more seamless.

When smart home devices have been certified to conform to a smart home standard, consumers can feel confident that their household devices have security controls built in.

Here at CableLabs, working alongside our member operators in our IoT Security working group, we continue to advance our secure network solutions so that smart home devices can safely and securely connect to smart homes. If you’re an employee of a CableLabs member operator and want to help shape these smarter, more secure solutions, consider joining the working group (member login required).

This work is foundational as we build networks that intelligently respond to user and device needs in the moment. By prioritizing connectivity that understands context and adapts automatically, CableLabs, our members and our industry partners are transforming how people experience their connected environment.

To learn more about the Experience Era and how it is redefining what connectivity means for consumers, read about the Technology Vision for the future of the industry.

Security

Addressing Emerging Cryptographic Threats in the Age of Quantum Computing

Key Points

- Threats against cryptography are evolving; among them, the threat of quantum computing is increasingly putting critical infrastructure and the data that traverses it at risk.

- Enabling cryptographic agility and leveraging it to migrate to quantum-safe cryptography are approaches that can mitigate these emerging threats.

- Through CableLabs’ Future of Cryptography Working Group, we collaborate with operators and vendors to help the industry navigate the migration to new cryptographic paradigms.

Cryptography is a foundational security technology used to protect digital information by providing the underpinnings for confidentiality, authentication and integrity. Today’s cryptographic algorithms may soon be undermined by emerging attacks, including the realization of a cryptographically relevant quantum computer (CRQC). Such attacks pose a very real and increasingly urgent threat across virtually all industries and their technologies, including broadband network infrastructure.

With cryptography and public key infrastructure being foundational to the security of cable networks, the broadband industry is uniquely positioned to rise to this challenge and seize the opportunity to future-proof networks to be robust, flexible and responsive to any cryptographic threat — quantum or otherwise. In this blog, we’ll review the threats against cryptography on the horizon, the solution to mitigate those threats and actions to start migration to new cryptographic paradigms. Many organizations, including network operators, have started taking action to plan for and execute cryptographic migrations.

The Threat: Attacks Against Cryptography

So, what exactly is the risk? To put it simply, quantum computers will one day be powerful enough to crack the asymmetric cryptography that is the basis of confidentiality, authenticity and integrity of data at all layers for virtually all devices deployed today. The current timeline for the potential development of a CRQC is 10–30 years — with increasing probability. That estimate isn’t certain, and recent research advancements suggest that it could be sooner.

While that time frame is wide, the risk of compromise is relevant today, thanks to the “harvest now, decrypt later” style of attack. In this scenario, adversaries may capture encrypted data today and retain it, planning to decrypt it once they have access to a CRQC. Any sensitive data generated today that will remain sensitive in the future (such as health records) is therefore at risk today.

The Solution: Cryptographic Agility and Post-Quantum Cryptography

So, how can the industry future-proof itself against these threats? The solution is twofold:

- Enabling cryptographic agility: Cryptographic agility is the ability to switch cryptographic systems quickly and efficiently. It’s a forward-looking design principle and capability that helps security interfaces stay flexible and adaptable in the face of all future threats.

- Post-quantum cryptography (PQC): Also called quantum-safe cryptography, these new encryption algorithms are designed to resist attacks from quantum computers. Their standardization, primarily driven by the National Institute of Standards and Technology in the United States, is a global effort that’s been ongoing for the last decade.

PQC aims to be the replacement for today’s vulnerable cryptography. Cryptographic agility is the framework by which systems will be migrated to PQC (and future iterations of cryptographic algorithms). Together, these strategies offer a path forward.

Migrating to PQC and Leveraging Existing Guidance

From existing guidance on cryptographic migrations, the CableLabs Future of Cryptography Working Group — a collaborative initiative bringing together operators, vendors and security experts to prepare for and to navigate changes to evolving cryptography — has identified certain “no-regret” actions, which can benefit network security posture regardless of whether or when the threat of a CRQC is realized. Some of these no-regret actions include:

- Establishing a cryptographic inventory: A comprehensive inventory of what cryptography is deployed is a critical artifact for any organization to compile as a first step towards cryptographic migrations.

- Assessing and estimating cryptographic agility enablement: Cryptographic agility is a deceptively simple concept; effectively enabling it begins with quantifying how cryptographically agile security interfaces are today. Tools exist today to aid in that effort.

- Discussions with vendors: Vendors play a critical role in cryptographic migrations, providing the implementation of security interfaces and concretely enabling cryptographic agility. Therefore, early and ongoing engagement with vendors on their roadmaps for enabling cryptographic agility and migrations to new cryptographic paradigms is key.

- Risk assessments and defining risk tolerance: Migration of cryptography at full organization scale is an optimization problem; undertaking risk assessment activities to identify the devices, services and interfaces that are at highest risk and should be prioritized for migration is crucial.

Taking Action Through Collaboration

Over the next decade, regulatory bodies around the world expect critical infrastructure — including broadband networks — to adopt quantum-safe cryptography. That makes the next five years crucial for operators looking to future-proof their networks and enable cryptographic agility as a key security capability. Reaching that goal will require deep collaboration, not just between network operators, but across the entire ecosystem of equipment manufacturers, software developers and standards organizations.

To ensure a smoother transition, the CableLabs Future of Cryptography Working Group is continuing to drive the foundational work to adapt current crypto migration and agility guidance to cable networks, identifying gaps therein and developing strategies to address those gaps. The working group’s mission is to develop practical, industry-specific guidance for enabling cryptographic agility as a new capability and migrating operator networks to post-quantum cryptography.

The threat may be complex, but the goal for the cable broadband industry is simple: Keep our networks — and the people who rely on them — secure for the future. To learn more or if you’re interested in contributing, the Future of Cryptography Working Group is open to CableLabs members and our vendor community. Join us here.

Security

The Malicious Economy: What Happens If Your Defenses Are Insufficient?

Key Points

- Law enforcement is dedicating greater attention to incident reporting and active threat monitoring, an encouraging trend in the fight against ransomware. Still, threat actors continue to evolve their tactics, leading to rising numbers of victims and ransomware variants.

- CableLabs collaborates with several cybersecurity organizations, including M3AAWG, with which we helped develop and maintain best practices for responding to ransomware attacks.

Ransomware has changed a lot in the past few years. The term refers to a form of malicious software loaded by attackers to restrict access to files and other data with the intention of extracting payment from the owners of that data.

CableLabs has been working to make sure that residential and business subscribers have the tools they need not only for preparedness and prevention, but also in the event that ransomware actors target them.

Let’s take a look at how the ransomware landscape has evolved, how law enforcement has changed its approach and how one important document can alter the course of your network’s future.

The Law Enforcement Front

The global climate on the regulatory, legislative and law enforcement front has changed, as you can see in the table below.

|

Technical Developments

|

Policy Involvement

|

|

Threat Actors & Threat Evolution

|

Cyber Insurance Market

|

|

Law Enforcement

|

National Security Implications

|

Evolving Threat Actor Behavior

We’re also seeing changes in threat actor behavior. There’s been a sharp increase in both the number of victims (over 200 percent) and the number of ransomware variants (over 30 percent) in 2025 — a deviation from last year’s trends.

The increased use of ransomware-as-a-service (RaaS), the open availability of threat tools and malicious actor communication all continue to evolve. No longer does the threat actor have to find a way to access systems, they can now buy opened systems and immediately move to the ransom phase. The horizontal disaggregation of the marketplace has enabled more threat actors to engage against more victims, with less technical know-how. Exploited vulnerabilities are now the primary method of malicious access, followed by compromised credentials and email/phishing.

Collaborating to Combat Threat Actors

CableLabs engages with several Information Sharing and Analysis Centers (ISACs) and anti-abuse groups. One of the more focused groups is the Messaging, Malware and Mobile Anti-Abuse Working Group (M3AAWG), where we’re proud to have helped to both originally build (and then shepherd updates to) the “M3AAWG Ransomware Active Attack Response Best Common Practices” document.

We do this work because — although the dogma of cybersecurity defense is to prepare, prepare, prepare — the reality is that no matter how good a network’s defenses are, they can always be stronger.

The Best Common Practices document starts with advice from victims who were previously infected, moves on to steps to follow, lists numerous resources, provides a high-level view of what to expect and finally offers decision guideposts about who to involve and when. The document helps with detection, analysis and response activities; demonstrates how to communicate; and enumerates the deliverables necessary for each stage.

This document doesn’t prescribe specific behaviors, but it helps to make sure the reader is equipped with the right questions to ask, as well as the considered order of approach to tackling a problem.

There will be decisions to make about when to declare an event, whether you have reporting requirement, what law enforcement’s role will be, which disclosures are necessary, whether you pay a ransom (or whether that is legally permissible in your situation), when and how to engage on cybersecurity insurance, and what your potential negotiation options are.

There are always collateral victims in attacks like these, and there may be actions possible or preferable on those fronts that will need to be evaluated. That process is one of many that will involve others within the organization. This document helps lay out who should be considered in each step.

The Importance of Having a Plan

Everyone hopes that this aspect of the global economy will come to a decisive end but, in reality, that’s neither the trend nor the expectation. In a dangerous world, it’s best to have a plan for how your company will act in a multitude of situations — even the unpleasant ones.

The Best Common Practices document is a tool for checking existing policies, technologies and the people involved in the prevention plans, but it can also be a cheat sheet for those who have had to balance other needs against external threats and suddenly find themselves in a difficult situation.

Read the “M3AAWG Ransomware Active Attack Response Best Common Practices” document to learn more about the options that are available for victims of ransomware attacks. The document is one resource in a broader cross-sector toolkit that helps defend against and manage the risk of ransomware threats. For more, check out:

- A Cybersecurity Framework 2.0 Community Profile from the National Institute of Standards and Technology (NIST)

- StopRansomware.gov from CISA

Winston Churchill famously said, “If you’re going through hell, keep going.”

These resources can show you how.

Security

Hacker Summer Camp 2025 Debrief: AI and the New Threat Landscape

Key Points

- The annual confluence of cybersecurity conferences — known informally as Hacker Summer Camp — drew experts and practitioners from all corners of the cybersecurity ecosystem to Las Vegas in August.

- In this blog post, CableLabs highlights high-level takeaways from the Black Hat USA and DEF CON conferences — with the evolution of AI (and the security gaps arising from it) being a common theme.

- We delve deeper into our insights from the conferences in a new members-only technical brief and will explore the topics further in a CableLabs webinar on Sept. 17.

Last month’s Hacker Summer Camp brought together hackers, researchers, practitioners and leaders in cybersecurity to review the cutting edge of security research, share tools and techniques, and find out what’s at the front of everyone’s mind in the security space.

So, what was at the forefront of the conversation this year in Las Vegas, and what trends in cybersecurity do you need to be aware of?

We outline our takeaways from Black Hat USA and DEF CON in a new CableLabs technical brief, available exclusively for our member operators. We’ll also dive into the conferences further during a members-only webinar on Wednesday, Sept. 17. Members can register here to join us.

For now, some of our high-level insights are summarized here.

What’s Old Is New Again: Hacking Like It’s the 90s

With the rapid adoption of tooling like generative AI and its agentic variants, the implications of overlooking the basics in security are more impactful than ever. Many different presentations at Hacker Summer Camp focused on this theme, in which researchers consistently demonstrated how classic cyberattacks are still thriving, now applied to modern contexts like agentic AI. The takeaway? AI-centric software is still software; thus, the basics apply: applying least privilege, separation of interests, thorough input sanitization and more.

AI: Your New, Non-Deterministic, Insecure Execution Environment

Unsurprisingly, AI remains at the forefront of the discussion. Novel from recent years was the notion that AI is no longer simply a chatbot interface added to your architecture, but a whole new execution environment. Many presentations and demonstrations showcased how large language model (LLM)-powered applications and agentic AI constructions can be abused or confused, with several undesirable outcomes. While there’s a great deal of work to be done to secure emerging AI technologies, many strategies for mitigating attacks were recommended. AI is being adopted in cybersecurity too, from both the adversarial and defender perspectives, which makes it clear that another cybersecurity arms race is underway.

Automation Pitfalls: Deploy Fast, Break Faster — With a Bigger Blast Radius

Automation tooling, such as continuous integration and continuous delivery (CI/CD), took center stage for many discussions, where simple and subtle misconfigurations resulted in significant consequences, from initial access and lateral movement. With one of the killer use cases of agentic AI being coding assistants and source code reviews, today’s relationship between AI and automation tooling is a close one. The aforementioned attack surface of those AI components thus has direct implication to that of the automation tooling.

Initial Access: CI/CD, Developers and Supply Chain Attacks

Finally, the conferences this year demonstrated the increasing burden of developers to act as bastions of security. However, the attack surface faced by the average developer is also increasing. This is due in part to the addition and management of AI assistants with access to code as well as the use of plugins for Integrated Developer Environments (IDEs) like Visual Studio Code that are ubiquitous across the industry. Developers and the tools they interact with are increasingly valuable for adversaries to target, creating opportunities for initial access and even executing supply chain attacks.

Cybersecurity Evolution Continues Moving Forward

Hacker Summer Camp 2025 made it clear that the rapid shifts we’re seeing in technologies like AI have left significant gaps in ensuring that the basics of security remain covered. It also demonstrated that cybersecurity professionals and enthusiasts are doing the work required to address those gaps, as well as adopting new and adapting classic approaches to bolster cybersecurity controls.

To learn more about the content and themes covered during the conferences, download our members-only tech brief and plan to join us Wednesday, Sept. 17, for the Lessons from Hacker Summer Camp 2025 webinar.

If you’re an employee of a CableLabs member operator and don’t yet have an account, register for access to the tech brief and much more member-exclusive content.

Security

Tangled Web: Navigating Security and Privacy Risks of Overlay Networks

Key Points

- By opening their networks to third parties, end users may be inviting risk from botnets, DDoS attacks and other potentially illegal activities.

- Learn how overlay networks function, why deployments of these networks are becoming more common and what the security and privacy risks are for internet service providers and their customers.

Residential proxies and decentralized physical infrastructure networks (DePINs) are technologies that enable end users to participate in semi-anonymous communications similar in function to virtual private networks (VPNs) by essentially sharing their broadband connection with anonymous third-party users. These types of networks are not new, but they have become more popular, easier to set up (sometimes even inadvertently) and are advertised to subscribers to make passive income, remove geo-blocking restrictions, and increase their privacy and security.

In this blog, we’ll look at how these networks function, why subscribers are implementing them on their home networks, and finally the security and privacy risks presented by these types of networks to both subscribers and internet service providers (ISPs).

What Are Overlay Networks?

Generally speaking, overlay networks are logical networks built on top of existing physical networks. Residential proxies and DePINs are examples of overlay networks that consist of software or hardware that runs on the subscriber’s home network or mobile device.

Many of these networks include a crypto token (bitcoin, Ethereum, etc.) that allows the end user to earn a financial stake by sharing their bandwidth in the overlay network. These networks are marketed to subscribers to earn passive income, with catchphrases like, “Get paid for your unused internet” or “Turn your unused internet into cash,” and companies offering these services often have signup bonuses, specials, referral incentive programs and pyramid schemes.

Harms to the Subscriber

End users believe that they will get extra security and privacy by participating in these types of networks. However, they often face a very different reality.

To participate, users must put their trust in the proxy provider, which has strong incentives to monetize their access to end-user data and online activity by selling user information to data brokers or other third parties. For example, privacy violations can occur by leaking sensitive information, such as what sites the subscriber is visiting, to third parties for targeted ads and profiling.

By sharing their broadband connection with these proxy networks, subscribers may unwittingly participate in botnets, distributed denial-of-service (DDoS) attacks and other illegal activities such as copyright violations or, even worse, facilitating the transfer of child sexual abuse material.

The broadband subscriber simply cannot know what undesirable or illegal traffic they are allowing to transit their broadband connection. This can harm the reputation of the subscriber’s IP address, which could result in the subscriber’s access to legitimate services being blocked. It could even result in legal actions against the subscriber as government authorities will track down the often-unwitting subscriber by their IP address.

Additional ways that a broadband subscriber may suffer harm is through the unintentional installation of malware or info-stealing software. For example, a cybercrime campaign by a group named Void Arachne uses a malicious installer for virtual private networks (VPNs) to embed deepfake and artificial intelligence (AI) software to enhance its operations. End users may believe they are installing software that will enhance their privacy and security but are actually installing malware that tracks them and feeds sensitive data to bad actors.

Harms to the Broadband Network

Residential proxies consume bandwidth and produce traffic that is not directed to or originates from the broadband subscriber. This extra bandwidth consumption could adversely affect the subscribers' perceptions of their service and may increase costs for the network operator. There can be implications to peering agreements between operators as well. A residential proxy that facilitates the transfer of certain traffic may lead to lowered reputations of the IP addresses in use and potential blocking by external services.

ISPs face a much broader risk when it comes to IP reputation. The reputation of one IP that has been damaged due to running an overlay network can affect not just one subscriber but multiple subscribers as the IP address is reassigned through Dynamic Host Configuration Protocol (DHCP). If operators use network address translation (NAT), all addresses behind the NAT can be affected. This not only causes disruption in service for the subscribers but can also cause reputational harm to the ISP and its brand.

Some overlay networks require that static inbound port forwarding be set up to fully participate in the network. These ports are then easily scanned and recorded in databases such as Shodan, making participating nodes easy to discover. DePIN hardware will inevitably be deprecated and no longer receive firmware updates and security patches. This will lead to a higher risk of the devices being compromised and exploited for other purposes, such as participating in a botnet.

Improving Capabilities to Counter Threats

In summary, decentralized overlay networks such as residential proxies and DePINs pose real and significant security and privacy concerns for both subscribers and their ISPs. These technologies enable semi-anonymous communications but also increase the risk of reputational harm, disruption in service and potential malicious use.

As these networks become more widespread and are increasingly exploited by malicious actors, it is essential to improve detection capabilities and develop effective mitigation strategies to address these risks.

To effectively mitigate these risks, a multi-stakeholder approach is necessary, involving collaboration between civil society, ISPs, overlay network providers, regulatory bodies and law enforcement agencies. This can include implementing robust network monitoring and security protocols and developing guidelines for educating subscribers on safe usage practices. By taking a proactive and coordinated approach, we can minimize the risks associated with overlay networks and promote a safer and more secure online environment for all users.

If you are a CableLabs member or a vendor and are interested in collaborating with us on solutions for safer, more secure online experiences, explore our working groups and contact us using the button below.

Security

CableLabs Updates Framework for Improving Internet Routing Security

Key Points

- An update to CableLabs’ Routing Security Profile further demonstrates the need to continue to evolve the profile and underlying technical controls to stay ahead of a constantly changing threat landscape.

- The profile provides a wholistic, risk management approach to routing security that is applicable to any autonomous system operator.

- CableLabs’ Cable Routing Engineering for Security and Trust Working Group (CREST WG) developed the profile.

Threats to internet routing infrastructure are diverse, persistent and changing — leaving critical communications networks susceptible to severe disruptions, such as data leakage, network outages and unauthorized access to sensitive information. Securing core routing protocols — including the Border Gateway Protocol (BGP) and the Resource Public Key Infrastructure (RPKI) — is an integral facet of the cybersecurity landscape and a focus of current efforts in the United States government’s strategy to improve the security of the nation’s internet routing ecosystem.

CableLabs has released an update to the “Cybersecurity Framework Profile for Internet Routing” (Routing Security Profile or RSP). The profile serves as a foundation for improving the security of the internet’s routing system. An actionable and adaptable guide, the RSP is aligned with the National Institute of Standards and Technology (NIST) Cybersecurity Framework (CSF), which enables internet service providers (ISPs), enterprise networks, cloud service providers and organizations of all sizes to proactively identify risks and mitigate threats to enhance routing infrastructure security.

The RSP is an extension of CableLabs’ and the cable industry’s longstanding leadership and commitment to building and maintaining a more secure internet ecosystem. It was developed in response to a call to action by NIST to submit examples of “profiles” mapped to the CSF that are aimed at addressing cybersecurity risks associated with a particular business activity or operation.

Improvement Through Feedback and Alignment

The first version of the RSP (v1.0) was released in January 2024 in conjunction with an event co-hosted with NCTA — the Internet & Television Association, featuring technical experts and key government officials from NIST, the Federal Communications Commission (FCC), the National Telecommunications and Information Administration (NTIA), the Cybersecurity and Infrastructure Security Agency (CISA) and the White House Office of the National Cyber Director (ONCD).

Following the release of the first version of the RSP, CableLabs conducted outreach to other relevant stakeholders within the broader internet community to raise awareness about this work and to seek feedback to help improve the profile. In addition, NIST released its updated CSF 2.0 in February 2024.

The RSP update reflects stakeholder input received to date and accounts for changes in the NIST CSF 2.0. In particular, the RSP v2.0:

- Aligns with NIST CSF 2.0’s addition of a “Govern” function and revisions of subcategories in the RSP’s mapping of routing security best practices and standards to the applicable key categories and subcategories of the NIST CSF 2.0’s core functions.

- Adds routing security considerations for most subcategories that previously did not include such information.

- Incorporates informative and relevant references within the context of the mapping rather than as a separate column of citations.

Advancing Routing Security Through Public-Private Partnership

Since its release, the RSP has been cited as a resource by various government stakeholders in recent actions and initiatives, including NTIA's Communications Supply Chain Risk Information Partnership (C-SCRIP)’s BGP webpage, the FCC’s proposed BGP rules and ONCD’s Roadmap to Enhancing Internet Routing Security.

In addition, CableLabs continues to closely engage in public-private stakeholder working groups. They include the joint working group recently established by CISA and ONCD, in collaboration with the Communications and IT Sector Coordinating Councils. The working group was created, according to the ONCD roadmap, “under the auspices of the Critical Infrastructure Partnership Advisory Council to develop resources and materials to advance ROA and ROV implementation and Internet routing security.”

The Ever-Evolving Cybersecurity Puzzle

The RSP remains a framework for improving security and managing risks for internet routing, which is just one key piece of a larger critical infrastructure cybersecurity puzzle. As with any endeavor in security, the RSP will evolve over time to reflect changes to the NIST CSF, advances in routing security technologies and the rapidly emerging security threat landscape.

The RSP was developed by CableLabs’ Cable Routing Engineering for Security and Trust Working Group (CREST WG). The group is composed of routing security technologists from CableLabs and NCTA, as well as network operators from around the world.

Learn more about all CableLabs’ working groups, including the CREST WG, and how to join us in this critical work. Download the profile here, or view it using the button below.

Security

Driving Industry Development of Zero Trust Through Best Common Practices

Key Points

- As the architecture of networks continues to evolve, we must continue to evolve how we approach security.

- Governments have been pushing zero trust implementation for critical infrastructure, including the broadband industry.

- CableLabs and its members formed the Zero Trust and Infrastructure Security (ØTIS) working group, which aims to develop best common practices (BCP) that focus on zero trust implementation, secure automation and security monitoring, as well as defining consistent and default security controls to infrastructure elements.

In recent years, the U.S. government has undertaken efforts to adopt a zero trust architecture strategy for security to protect critical data and infrastructure across federal systems. It has also urged critical infrastructure sectors — including the broadband industry — to implement zero trust concepts within their networks.

The industry plays a key role in managing the National Critical Functions (NCFs) as a part of the Cybersecurity and Infrastructure Security Agency (CISA) critical infrastructures sections. Therefore, cable operators need to embrace zero trust concepts and do their best to apply them to their infrastructure elements.

What Is Zero Trust?

For quite a long time, some critical infrastructure elements have been considered as trusted because they happen to be physically located within the operator’s perimeter (e.g., back offices, trust domains). However, this approach can’t prevent these infrastructure elements from threat vectors that exist within the operator’s perimeter, such as illegal lateral movements. Additionally, conventional solid, hardware-based network perimeters are vanishing as the industry shifts toward software-define, virtualized and cloud networks.

As specified in the NIST "Zero Trust Architecture" document (NIST SP 800-207), “zero trust assumes there is no implicit trust granted to assets or user accounts based solely on their physical or network location (i.e., local area networks versus the internet) or based on asset ownership (enterprise or personally owned).”

What Is the Zero Trust Best Common Practices Document?

The Zero Trust Best Common Practices (ØTIS BCP), which will be released on September 24, was developed as a joint effort by CableLabs and steering committee members in the Zero Trust and Infrastructure Security (ØTIS) working group. Taking the aforementioned NIST SP 800-207 document and the CISA Zero Trust Maturity Model (ZTMM) into account during its development, the ØTIS BCP addresses security gaps that our members have identified and develops a zero trust security framework that covers the following areas:

- Credential protection and secure storage

- Identity security and data protection

- Asset and inventory management

- Supply chain risk management

- Secure automation

- Security monitoring and incident responses

- Boot security

- Policy-based access management

- Consistent security control

The ØTIS BCP is intended to serve as a guideline for cable operators and vendors as they implement zero trust concepts and support network convergence and automation. Cybersecurity professionals and decision-makers involved in the security of access networks may also find the ØTIS BCP informational because the document shows the broadband industry’s consensus on how to provide consistent security baselines for infrastructure access networks.

What Is the Next Step?

After releasing this initial version of the ØTIS BCP, we plan to expand the ØTIS working group so that it includes CableLabs’ vendor partners, who will review and further refine the recommendations. Notably, we’ll continue the process of mapping the ØTIS BCP to current and future guidance from relevant government agencies to identify potential gaps in the BCP and address those as appropriate.

How Can You Engage in the Zero Trust Effort?

If you’re a cable operator or vendor interested in taking part in this work, learn more about the ØTIS working group and how to join.

Security

Black Hat USA and DEF CON: A Lot to Unpack After “Hacker Summer Camp”

Key Points

- Pervasive and deep understanding is critical for security practitioners in securing their infrastructure.

- Core principles in security are paramount; their ubiquitous application and adherence to both existing and emerging technologies is crucial.

- Advanced technologies and techniques are being adopted by adversaries. To maintain our upper hand, we must carefully embrace the adoption of new technologies as well.

- AI adoption is not slowing down, nor is its application to security use cases or new ways to undermine its security. There continues to be immense potential here.

This year has been a particularly interesting one for cybersecurity. Notable incidents and other areas of focus in cybersecurity set the backdrop for “Hacker Summer Camp 2024” in Las Vegas in August. Topics frequently alluded to during this year’s conferences included:

- Increased focus on critical infrastructure — Critical infrastructure is increasingly complex, distributed and difficult to characterize in terms of security. This year’s conferences accordingly brought an increased attention to securing critical infrastructure.

- Echoes of the CrowdStrike incident — Although the now-infamous CrowdStrike Windows outage in July was a mistake, allusions to lessons that could be learned from the event were often made from the perspective of critical infrastructure security. The outage — and its fallout —prompted discussions about what the impact could be if bad actors were behind a similar incident.

- The XZ Utils (almost) backdoor — The discovery of the XZ Utils backdoor in early 2024 — the focus of a dedicated talk at DEF CON — serves as a reminder of the growing sophistication of adversaries.

I’ve published a CableLabs Technical Brief to share my key takeaways from this mega cybersecurity event that combined the Black Hat USA 2024 and DEF CON 32 conferences. In addition to covering the highlights of talks and demos I attended, this Tech Brief delves deeply into the discussions I found to be most insightful and the commonalities I observed across several areas of the conferences.

There’s no denying that “Hacker Summer Camp” offers more than any one person could hope to see or do on the conference floor in a single day. Each conference was packed with a wealth of new research and perspectives, demonstrations and much more. Still, the key highlights in my Tech Brief provide a solid and in-depth overview of some of the most talked-about topics and issues existing today in the field of cybersecurity.

I’ve included more quick takeaways below, and CableLabs members looking for a more comprehensive debrief can download the Tech Brief.

Common Ties at Black Hat USA and DEF CON

I found that topics from the presentations, demonstrations and conversations at Black Hat and DEF CON fell into three overarching themes. I expand on the implications of these in the tech brief.

Deep (human) learning: A need for more pervasive understanding

Doing rigorous background research is key to gaining an upper hand in innovating and building strong security postures. Especially in light of rapid adoption of advanced technologies, security experts need to deepen their knowledge to better secure their infrastructure. Collaboration is also a crucial element of building deeper bases of knowledge on technical topics.

Back to basics: Returning to and applying core principles

The core principles of cybersecurity are foundational to maintaining a strong security posture when implementing, deploying or maintaining any technology. As security researchers and practitioners, part of our role is to see through the use cases toward the misuse cases as a first step to ensuring the fundamentals are there and to educate and empower others to do the same.

Inevitabilities and cybersecurity: What we must embrace and why

My Tech Brief elaborates on examples in which adversaries will adopt and take advantage of new technologies, regardless of our own adoption. There are always caveats and important details that must be accounted for to ensure the secure use of new technologies as they are adopted. However, the Tech Brief discusses how the potential benefits to bolster security that come with the thoughtful adoption of new technologies often significantly outweigh the risks that they introduce.

AI’s Rapid Adoption, Potential and Pitfalls

AI once again took center stage (including at Black Hat’s inaugural AI Summit). Particularly in focus were agentic AI, assistants and RAG-enhanced LLMs. Like last year, these tools were looked at through the (mostly mutually exclusive) lenses of “AI for security applications” and considerations of “the security of AI,” both of which present immense opportunities for research and innovation.

Download the Tech Brief to read my takeaways from notable talks about this from the conferences.

Building More Secure Networks Together

It’s a thrilling time in cybersecurity! With all of the innovations, perspectives and calls to action seen at Black Hat USA and DEF CON this year, it’s clear that there’s a lot of work to be done.

To read more from my debrief, download our members-only Tech Brief. Our member and vendor community can get involved in this work by participating in CableLabs’ working groups.

Did you know?

In addition to in-depth tech briefs covering events like this, CableLabs publishes short event recap reports — written by our technologists, exclusively for our members. Catch up on recent recaps (member login required).