Wireless

The Sticky Wi-Fi Challenge: Aligning Minds for Seamless Solutions

Key Points

- Consumers often face connectivity disruptions and instability when transitioning their devices between Wi-Fi and cellular networks — a frustrating problem sometimes known as “sticky Wi-Fi.”

- CableLabs is working with our members and industry stakeholders to align on a solution to this problem that enhances the user experience by achieving seamless connectivity.

“Have you ever had to…”

This phrase sometimes precedes a story about a challenging or difficult experience. For internet users around the world, maybe this rings a bell: Have you ever had to turn off the Wi-Fi on your phone to prevent calls from dropping when leaving home?

It’s likely that many people reading here can relate to this scenario. Why is that?

It’s because mobile devices tend to stick to Wi-Fi (whether at home, in the office or elsewhere) for too long, often switching to cellular when it’s too late. This can lead to dropped voice calls and data connections that stall for 20 seconds or more. This “sticky” behavior has existed ever since mobile devices first integrated Wi-Fi and cellular radios.

The problem stems from a fundamental characteristic of mobile devices, which follow a “Wi-Fi first” approach. However, the problem is exacerbated by the lack of a standardized mechanism to seamlessly transition between Wi-Fi and cellular networks, which leaves users vulnerable to connectivity disruptions.

How Does This Impact the End User?

In today’s digital age, we rely heavily on mobile devices for everything — from video calls and streaming services to online work and social interactions. Yet, many users face a frustrating experience: When moving between cellular and Wi-Fi networks, their devices often struggles to maintain a stable connection, resulting in dropped calls, buffering videos or lost data sessions.

This lack of seamless connectivity not only disrupts user activities but also impacts perceptions of service quality, leading many to disable Wi-Fi altogether or switch to less efficient networks.

How Can We Improve the Experience?

Collaborating with member operators, CableLabs has been working to characterize the poor user experience that this problem creates and to understand how frequently it happens.

Our field testing included evaluating hundreds of video conference calls, testing video calling apps and making voice calls across devices from major manufacturers and operating systems. We assessed the user experience based on audio dropouts, video stutters and freezes, and dropped calls, and compiled the results.

Our members also analyzed hundreds of millions of Wi-Fi signal strength data points during times when phones transitioned from Wi-Fi to cellular networks. We even conducted surveys with actual customers to gather insights into their experience when leaving Wi-Fi coverage.

What Did We Find?

Long story short, the problem is real. It’s disruptive to users and occurs frequently. Our testing highlights the extent of this issue as summarized below.

- Most devices we tested experienced long periods (usually between 19 and 54 seconds) of bad audio, dropped audio and/or frozen video during calls.

- Standard voice-only calls had between 10 and 13 seconds of bad audio during the transition from Wi-Fi to cellular.

- From analysis of operator-provided Received Signal Strength Indicator (RSSI) data, the RSSI is low enough to likely cause issues with Wi-Fi voice calls 60 percent of the time when transitioning from Wi-Fi to cellular. When using other apps problems are likely to occur 40 percent of the time.

- About half of the surveyed users manually switch their mobile device between their home Wi-Fi and cellular at least once per week, with 44 percent of those users experiencing issues during the transition.

The consequence is clear: Without seamless network transitions, user experience suffers, reliability drops and dissatisfaction increases.

While standards development organizations have made some advancements, particularly for Wi-Fi calling, these solutions have not yet fully addressed the latency and disruption challenges during network transitions for other applications.

What’s Next?

This problem is multifaceted, given the diverse set of stakeholders involved — including device vendors, operating system (OS) vendors, chipset vendors, operators and application developers, each using their proprietary algorithms/KPIs to try to solve the sticky Wi-Fi problem. Switching to cellular as soon as Wi-Fi starts to degrade can improve the user experience to a certain extent, but it won’t fully solve the problem.

CableLabs is actively engaging with all the industry stakeholders in an attempt to align on a common, streamlined solution aimed at enhancing the user experience. This will ensure we can address the challenges users face today during network transitions in a consistent manner, regardless of a user’s access network or device.

We are also working with industry vendors and standards organizations (like Wi-Fi Alliance) to test and develop solutions for truly seamless connections that offer reliable and consistent experiences for users. If your company is a mobile device vendor and you would like to engage with CableLabs in this work, contact us to learn how we can collaborate to achieve seamless connectivity.

To read more about this and the CableLabs working group tackling this problem, read our recent blog post, “Unlocking the Power of Seamless Connectivity.”

DOCSIS

Speed, Scale and Seamless Integration: Key Industry Firsts at DOCSIS 4.0 Interop

Key Points

- With CableLabs’ latest Interop·Labs event, DOCSIS® 4.0 interoperability has reached a new level of maturity as multiple vendors successfully demonstrated high-performance operation across modem components.

- A convergence of industry firsts during the event signaled a real-world readiness for DOCSIS 4.0 deployment, proving that the technology is ready to deliver the performance, flexibility and vendor diversity we've been working toward.

The broadband industry is experiencing a transformative moment — one defined by unprecedented collaboration, groundbreaking technology and milestone achievements that set the tone for the future of connectivity. Today, we’re excited to highlight a convergence of firsts that represent significant strides in driving scalable, interoperable and high-performance broadband networks.

These advances were on full display at the latest Interop·Labs event, held June 9–12 at CableLabs’ headquarters in Louisville, Colorado. The event provided an opportunity for suppliers to verify interoperability between equipment designed to be compliant to the DOCSIS 4.0 specifications. The focus: speed and stability, two critical factors that directly impact the quality and reliability of broadband service.

A New Milestone: Uniting Innovation With Scale

In this blog post, we’ll walk through the key breakthroughs that made this interop a milestone event for DOCSIS 4.0 technology and the broadband industry as a whole.

1. 10 DOCSIS 4.0 Modem Suppliers

Interest in delivering modems into the DOCSIS technology ecosystem remains high, with 10 modem suppliers having attended a DOCSIS 4.0 interoperability event at CableLabs.

2. Three CCAP Core Suppliers Demonstrating Advanced Capabilities

In an industry first, three DOCSIS core suppliers attended the interop and demonstrated:

- Stable registration with multiple high-speed channels.

- Cable modem interoperability.

- High-speed throughput both upstream and downstream.

- Advanced service features.

The successful interop of multiple cores with the modems at the interop further proves that operators are no longer bound to a single core vendor solution. Instead, they can architect their networks based on performance, cost and feature alignment — without compromising on integration or scale.

3. Five RPD Suppliers Interoperating in a Single Network

Additionally, for the first time, five Remote PHY devices (RPD) were tested during the event and successfully achieved interoperability with the cores, reinforcing the maturity of the Distributed Access Architecture (DAA) ecosystem. Additionally, for the first time, five Remote PHY Devices (RPD) were tested during the event and successfully achieved interoperability with the cores reinforcing the maturity of the Distributed Access Architecture (DAA) ecosystem. This marks a major achievement for interoperability in a traditionally siloed space, where vendor lock-in could limit agility and innovation.

Each RPD supplier brought unique implementation characteristics to the table — ranging from management capabilities to different form-factors. The cores not only handled these diverse devices gracefully but also maintained service performance and control plane consistency while achieving high-speed data delivery.

4. Achieving 14 Gbps DOCSIS Downstream Throughput

The fourth major milestone is a technological leap forward: 14 Gbps of downstream DOCSIS throughput achieved across a multi-vendor network. This achievement leverages the full potential of DOCSIS 4.0 technology, DAA architectures, next-gen modulation (4096-QAM) and intelligent spectrum management.

This bandwidth benchmark isn't just a speed milestone — it’s a validation of the DOCSIS roadmap, demonstrating that hybrid fiber coax (HFC) infrastructure remains a future-ready platform that can compete with fiber on both performance and longevity.

Why These Firsts Matter

These achievements go beyond engineering milestones — they signal a new era of openness and agility in broadband infrastructure. For operators, this means:

- Faster innovation cycles due to supplier interoperability and innovation.

- Reduced operational risk via interoperable fallback options.

- Future-proof investments in scalable architectures like DAA and DOCSIS 4.0 technology.

For consumers and businesses, it translates to more reliable service, higher speeds and faster deployments of next-generation broadband.

Participants test their equipment for interoperability, with a focus on speed and stability, during the June 2025 event at CableLabs.

Key Contributors and Participants

Attendance at the event was strong, with new suppliers, new products and three operators on hand to observe demos and share their DOCSIS 4.0 network progress.

Suppliers included CommScope, Harmonic and Vecima, who brought DOCSIS 4.0 cores to the event. For the first time, we saw five Remote PHY Device (RPD) platforms from: Calian, CommScope, Harmonic, Teleste and Vecima. Eight DOCSIS 4.0 modem suppliers in attendance — Arcadyan, Askey, Compal, Gemtek, Hitron, Sagemcom, Sercomm and Ubee — brought multiple cable modem models.

Participating in the event were chipmakers Broadcom and MaxLinear, who brought local engineering support for their cable modem partners. Calian participated with its test solutions, and Microchip brought its clock and timing system.

Testing combined virtual cores, RPDs and modems from different vendors to verify performance and cross-compatibility. DOCSIS 3.1 and 4.0 devices were once again integrated to showcase interoperability between legacy and next-gen technology. Suppliers providing test equipment used these setups to verify their solutions.

Looking Ahead

This confluence of industry firsts is not an endpoint, but a foundation. With successful demonstrations of interoperability, performance and scalability, our industry is entering a period where the promise of next-gen broadband becomes reality — not just in labs, but in communities around the world.

The future is open, fast and here — and we couldn’t be more excited to play a part in building it.

Upcoming Events

The next phase of broadband promises faster speeds, greater reliability and seamless integration across today’s HFC networks. Another DOCSIS 4.0 interop is planned in August at CableLabs. Consider joining us to explore the possibilities firsthand.

Also, registration is now open for SCTE TechExpo25, happening Sept. 29–Oct. 1, 2025, in Washington, D.C. The theme of the industry-leading annual event is “Network (R)Evolution: Delivering the Seamless Experience.” Join us to explore how operators can thrive amidst the transformation across wireline, wireless and converged networks. CableLabs members are eligible to receive a complimentary full-access pass to SCTE TechExpo.

Wired

From If to When: What’s Next for Coherent PON?

Key Points

- The perception of coherent passive optical network (CPON) technology has evolved from if to when and how, with momentum accelerating throughout the broadband industry.

- Through collaborative development and industry-wide engagement, CPON is positioned to become a foundational technology for scalable, high-performance broadband networks of the future.

There are a lot of reasons to attend an industry conference. To name just a few, events like SCTE TechExpo or the recent CableLabs Winter Conference provide opportunities to:

- Learn about the latest technology research.

- Discover emerging products.

- Network with colleagues.

- Identify technology trends.

The last of these was very much evident at this year’s Optical Fiber Communications (OFC) conference, the world's leading event for fiber optic technologies, where there was a palpable shift in how CPON technology is being viewed. In past years, much of the conversation has centered around if coherent optics technology could viably and practically be applied to passive optical networks. This year, it was much more about when the technology would come to market and how to make it cost effective.

What was once a forward-looking vision is evolving into a real-world opportunity with long-term potential.

CPON, a mainstay in CableLabs’ optical fiber portfolio, is entering a new, more active phase of industry planning. Network operators are exploring opportunities to implement it sooner rather than later, and the broader ecosystem — including vendors and standards bodies — is also signaling interest.

We’ve often seen the technology talked about in the context of long-term evolution, but it also holds the potential to solve immediate challenges for operators. With speed no longer a key service differentiator, providers are tasked with delivering superior online experiences and services that adapt to the needs of the user.

CPON technology contributes to experience and adaptability by enabling:

- Lower latency.

- Streamlined integration with existing architectures.

- Simplified, cost effective capacity scaling.

- Reduced operational complexity.

These capabilities parallel the Technology Vision for the industry, which emphasizes competitiveness, scale and alignment across the ecosystem while also cultivating next-generation technology solutions.

What Is Coherent PON, and How Did We Get Here?

Amid ever-increasing demands for a faster, more reliable exchange of data, PON technologies remain one of the dominant architectures capable of meeting that growth. Existing PON technologies transmit data over fiber optic cables using a technique known as Intensity Modulation – Direct Detect (IM-DD), which has proven to be a simple, cost-effective means of supporting multiple users over a shared optical data network (ODN).

However, there’s a problem. As speeds ramp up higher and higher, IM-DD based PON solutions require more and more power, require more complicated signal processing, have more limitations on operating frequencies, and can only reach more limited distances. As capacity increases, IM-DD is no longer the simple, cost-effective solution it was before.

So, what’s the alternative?

Coherent optics is an advanced fiber optic technology, which uses coherent modulation and detection to transmit more data for a given unit of time with greater sensitivity over longer distances than comparable IM-DD solutions. Coherent optics has long been used for point-to-point connections in submarine, long-haul and metro networks. More recently, it has begun to be applied to the access network as well, driven in part by work done by CableLabs to demonstrate that it can be a practical, cost-effective solution with a wide range of benefits.

CPON, for the first time, applies coherent optics technology to PON, resulting in a whole host of benefits.

Compared with traditional PON technologies, CPON solutions enable data transmission rates starting at 100 Gbps upstream and downstream — with the ability to easily scale to even higher capacities — while covering greater distances with better signal quality. This combination allows CPON systems to support more users and services on the same network.

More than simply an upgrade in speed and capacity, CPON technology also enables a shift in how networks are built. Combined with expanded, scalable capacity and ultra-low latencies, CPON technology also expands the services that can be provided over those networks. This enables CPON to provide a foundation for scalable service delivery across increasingly complex network architectures. As operators shift their network strategies to include a mix of technologies — hybrid fiber coax (HFC), fiber, wireless and even satellite — the technology is emerging as a key enabler of unified, flexible architectures.

Coherent PON On the World Stage

While CPON has long been a priority within CableLabs’ optical strategy, it’s exciting to see the broader industry begin to take notice, too.

As we mentioned earlier, the energy around CPON was especially visible this year in San Francisco at OFC 2025. We saw a significant uptick in technical contributions related to coherent technology, providing further proof that momentum is building beyond CableLabs’ own efforts. Multiple sessions, presentations and demos focused on coherent optics and access network applications, drawing particular interest from network operators considering new avenues to rethink network design and service delivery.

The event made it clear that coherent technology has entered mainstream planning discussions for PON. The increase in engagement signals a growing recognition that CPON is becoming a necessary component of next-generation broadband strategy. Much more importantly, it demonstrates the industry’s collective commitment to moving the technology forward.

Additionally, even as CPON gains more attention from the broader industry, CableLabs continues to serve as a leader in advancing this technology. At OFC 2025, our CableLabs working group participants were particularly productive, covering next-gen PON solutions generally and CPON technology and solutions specifically in several engaging talks (both invited and contributed) as well as panel discussions. Here are some of the highlights from the PON-related contributions presented by our working group collaborators:

- Single-Laser BiDirectional Coherent PON: a Hybrid SC/DSC Architecture for Flexible and Cost-Efficient Optical Access Networks — Showcased a novel single-laser BiDirectional CPON capability featuring hybrid single-carrier and dual-subcarrier support.

- Low-Complexity 100G Burst-Mode TDMA-CPON Transmission Achieving 38 dB Link Budget — Experimentally demonstrated practical 100 Gbps burst-mode coherent transmission and reception without optical amplification.

- Robust Colorless Coherent Receiver for Next-Generation PONs: Coexistence With Legacy Systems and Multi-Wavelength Operation — Explored a colorless coherent receiver designed for next-generation PONs, emphasizing its ability to coexist with legacy PONs and support multi-wavelength operation.

- Crafting Fiber Access Networks for Service Excellence Assurance — Provided in-depth analysis and insights into the evolving landscape of fiber access networks.

- Harmony From Chaos: Orchestrating and Interoperable Ecosystem for the Future of PON — Explored standards and strategies for streamlined future-ready deployment and management across diverse vendor systems.

- Out of the Darkness: A Sneak Peek at CableLabs’ CPON Specifications — Covered how CPON is shifting from feasibility to implementation.

Accelerating Adoption Through Collaboration

Together with our member operators and vendor community, we’re actively shaping the direction of CPON technology. A CableLabs-led working group continues to advance this effort, with a shared goal of moving CPON from specification to broader adoption. Our work to develop and refine the suite of specifications is ongoing, and we expect to publicly issue the full suite by the end of 2025.

These working groups play a critical role in building a healthy, collaborative broadband ecosystem: ensuring that resulting solutions align with the real-world needs of both operators and end users, and can be developed and built by manufacturers at a reasonable cost. If you’re a CableLabs member or vendor interested in contributing to this effort, we encourage you to learn more about our working groups and how you can get involved.

Continuing this shared development and ecosystem-wide collaboration is critical, especially as the suite of specifications moves closer to public release and industry interest ramps up.

As CPON systems move closer to widespread implementation, this collaborative model will be key to its success. More than a next-gen PON technology milestone, CPON is a foundation for seamless, scalable connectivity — enabling a faster, more responsive and more reliable broadband experience for the future.

Policy

Wi-Fi Spectrum: 6 GHz Use Is Surging and Headed Toward Exhaustion

Key Points

- A new Wi-Fi network use analysis from CableLabs shows that current unlicensed spectrum won’t be able to keep pace with the demands of increasingly more connected devices over the next five years.

- Exhaustion of the 6 GHz band is nearing as the growing number of Wi-Fi devices and applications strain current unlicensed spectrum.

- If not addressed, the strain on unlicensed spectrum will significantly degrade Wi-Fi performance for consumers in high-density environments.

- Policymakers should act now to ensure Wi-Fi connectivity for all Americans.

As U.S. and global policy makers debate the future of spectrum policy, CableLabs is releasing initial results of our Wi-Fi network use analysis, which further confirms the critical need to keep the current unlicensed spectrum resources and add more soon.

In short, the 6 GHz band — the key frequency band for Wi-Fi, alongside the legacy 2.4 GHz and 5 GHz bands — is experiencing explosive growth and rapid adoption that is expected to significantly accelerate this year. As a result of the fast-paced growth of consumer data and device demands expected over the next five years, exhaustion of the 6 GHz band is quickly approaching in high-density environments.

Reallocating 6 GHz Spectrum Would Decimate Wi-Fi Connectivity

Without more unlicensed spectrum in the pipeline, full utilization of existing Wi-Fi spectrum will result in degraded performance of applications and services that rely on Wi-Fi as the workhorse of modern connectivity.

Any proposals to reduce or repurpose 6 GHz unlicensed spectrum would be devastating to Wi-Fi performance and seriously detrimental to consumers, U.S.-based device manufacturers, and other businesses that expect and depend on reliable connectivity. Failing to assign more unlicensed spectrum to support the growing use of Wi-Fi will cause consumers to experience degraded Wi-Fi performance.

These conclusions are based on a rigorous network simulation of a multi-story residential environment, using a highly capable modeling tool known as NS-3 that incorporates the specific parameters of Wi-Fi technology and user behavior. This analysis builds on CableLabs’ prior work to articulate the need for additional Wi-Fi spectrum, which we’ve explored in two recent blog posts:

- The Near Future Requires Additional Unlicensed Spectrum (February 2025)

- The Case for Additional Unlicensed Spectrum (March 2024)

This work now goes even further to model and analyze the full Wi-Fi environment in a multi-story residential building where a high number of client devices, users and networks operate in close proximity.

Specifically, the study modeled a 12-story residential building (for example, an apartment or condo building) with 12 units per floor. We included every 6 GHz Wi-Fi access point and active client device (e.g., smartphones, laptops, tablets, TVs and other connected devices) in the building. In the simulation, the full 6 GHz band is used, and specific channels and channel bandwidths were assigned to each unit randomly, while avoiding adjacent units being on the same channel. The starting point for device and traffic growth was based on a distribution of today’s typical connected homes.

The study then increased both the number of devices and peak traffic over the coming years in line with industry projections.

The Simulation in Action

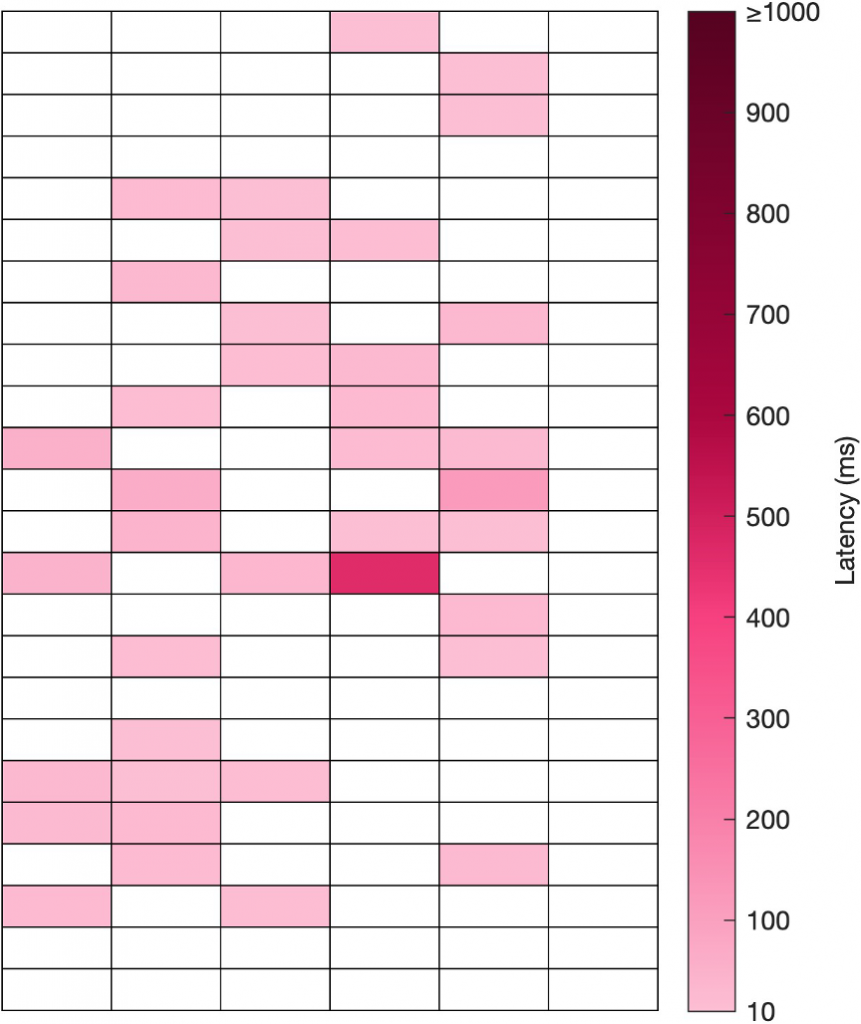

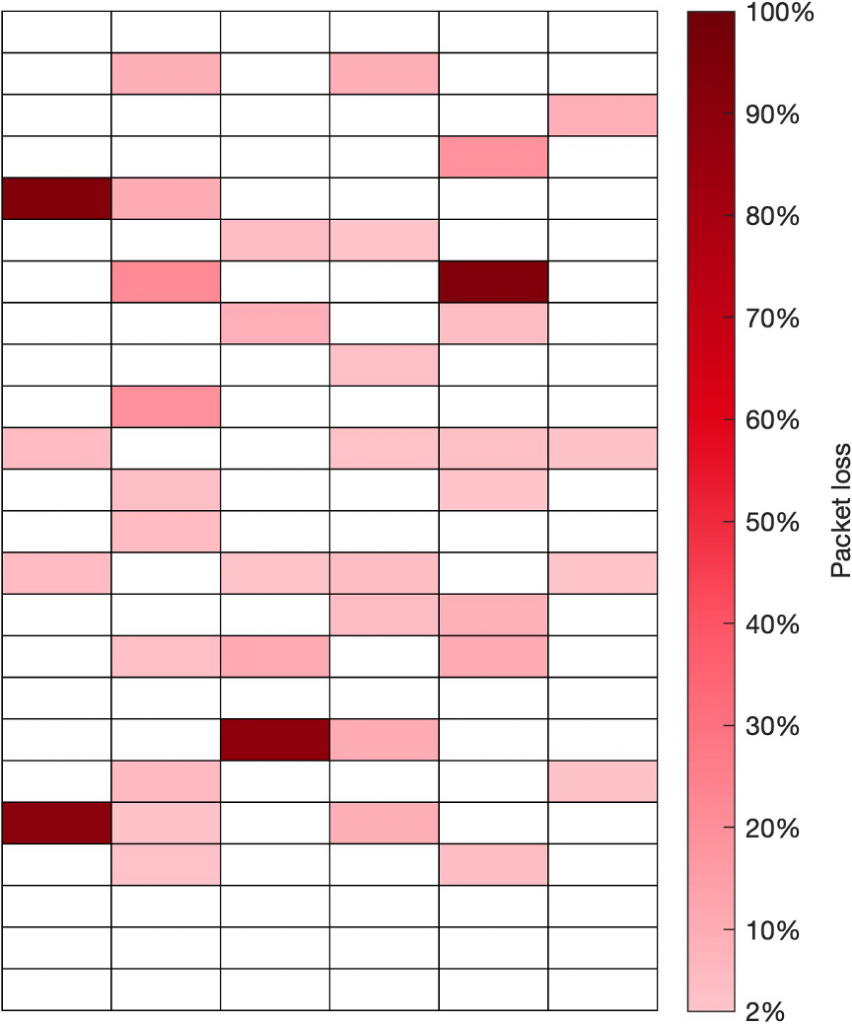

To identify Wi-Fi spectrum exhaustion, the study analyzed the key indicators of latency (transmission delay) and packet loss (lost data) across every connected device in each residential unit during periods when Wi-Fi activity is highest. Latency is a strong predictor of service quality and user experience for many popular and essential applications, including real-time communications like FaceTime or Zoom, media streaming, online gaming and home security. Packet loss impairs all types of applications and is correlated with connection unreliability and congestion.

The results of the simulation show that consumers in dense residential environments are likely to experience widespread and significant Wi-Fi performance degradation, indicating near-term spectrum exhaustion based on growing demand.

In particular, the study examined the Wi-Fi performance within the 12-story residential building based on five years of growing Wi-Fi demand. In this scenario, consumers in roughly 30 percent of the simulated building experience increased one-way Wi-Fi latency — greater than 10 milliseconds (ms) — and packet loss of 2 percent or more. As latency and packet loss exceed these thresholds, the resident’s quality of experience will begin to degrade, particularly for real-time applications, such as video calling, that are most sensitive to Wi-Fi performance. As latency and packet loss further increase, even non-real-time applications, such as media streaming, will begin to fail.

Below, figures 1 and 2 are abstractions of the 144-unit building. Each rectangle represents a unit in the building. Figure 1 shows the specific units, their relative location in the building and the amount of latency for at least one 6 GHz client device in the unit after five years of growth in client devices and peak traffic. Figure 2 shows the same for packet loss. The variation in latency and packet loss across the building is a function of the complex interactions between devices within the unit and across units, varying numbers of client devices and amounts of peak traffic in various units, and the variation in Wi-Fi signal propagation and contention within and across units.

The initial results demonstrate how essential 6 GHz spectrum is to maintain Wi-Fi’s reliability and performance for American consumers and businesses. In addition, the findings underscore the need for policymakers to allocate more unlicensed spectrum. A failure to act would undermine the reliable Wi-Fi connectivity that enables American consumers and businesses to access high-speed broadband.

CableLabs is developing a white paper to share the detailed technical analysis summarized above, including the underlying methodology.

Subscribe to the CableLabs blog to stay updated on this critical work to future-proof our networks.

Fiber

Driving Alignment: New Progress Toward XGS-PON Equipment Interoperability

Key Points

- A recent CableLabs PON Interop·Labs event brought together OLT and ONU suppliers to test device interoperability and exercise the requirements of the Cable OpenOMCI specification.

- Testing helps equipment manufacturers ensure interoperability among their devices — key to building a healthy, collaborative ecosystem.

With more and more CableLabs member operators deploying or preparing to deploy ITU-T-based passive optical networking (PON) technologies such as XGS-PON, interoperability of equipment from different vendors is more important than ever. One well-known source for the lack of cross-vendor interoperability of XGS-PON equipment stems from differing implementations of the ONU Management Control Interface (OMCI) — primarily specified by ITU-T Recommendation G.988.

Last year, the CableLabs Common Provisioning and Management of PON (CPMP) working group set out to tighten some of the gaps in G.988, via the publication of the first version of the Cable OpenOMCI specification. This specification aims to enumerate the set of management elements from G.988 that are most important to CableLabs member operators and clarify how those elements must be supported in XGS-PON equipment intended for sale to those operators.

At a recent XGS-PON Interop·Labs event at CableLabs, multiple suppliers of PON optical line terminal (OLT) and PON optical network unit (ONU) equipment exercised their gear’s ability to interoperate. It was the industry’s first opportunity to exercise equipment implementations conformant to the requirements defined in the Cable OpenOMCI specification.

But the event, held April 28–May 1 in our Colorado labs, exercised more than just OMCI interoperability. It also included a continuation of the DOCSIS Provisioning of XGS-PON config file interoperability testing, first initiated during our February interop. Please see my blog post from March for a deeper description of the concept of DOCSIS Provisioning of XGS-PON and the scope of that event.

Supplier Participation in the XGS-PON Interop

Interoperability events enable participants to collaborate and problem-solve on specific technologies and goals. The participation of vendors is critical to advancing technology solutions for the entire industry.

Participants at our April Interop·Labs event included XGS-PON OLT suppliers — showcasing their OMCI and DOCSIS Adaptation Layer (DAL) implementations — as well as XGS-PON ONU suppliers, who showcased the OMCI aspects of their ONUs.

These XGS-PON OLT suppliers included Calix, Ciena and Nokia. In particular, Calix tested using their E7-2 OLT and DPx DAL. Ciena brought their Tibit MicroPlug OLT, MCMS controller and DAL system. And Nokia tested using their Lightspan MF-2 OLT and Altiplano controller. While the primary focus of the event was on XGS-PON technology, Ciena also brought their pre-production 25GS-PON OLT and 25GS-PON ONU, and demonstrated DOCSIS provisioning of that equipment, as well as traffic forwarding through it.

In addition to the OLT supplier participants, XGS-PON ONU suppliers in attendance included Askey, Cambridge Industries Group, Hitron, MaxLinear, Sagemcom and Sercomm. While Sagemcom brought an ONU embedded in a residential gateway, most suppliers brought bridging ONUs. Numerous ONUs from additional suppliers (including Calix, Ciena/Tibit and Nokia) were also on hand for interested OLT vendors to test with their OLT and DAL implementations.

The XGS-PON OMCI Test Cases

The OMCI test plan executed during the event was based on the requirements defined in the I01 version of the Cable OpenOMCI specification. Interested OLT and ONU suppliers met over the course of several weeks prior to the event to define the test cases that would be included in the interop test plan.

Following the general contents of the Cable OpenOMCI specification, the following test cases were defined:

- The MIB upload component of OMCI configuration management

- Create and get methods of OMCI configuration management

- Performance monitoring via OMCI

- ONU software image download

- ONU software image activation

During the first three test cases, the lab’s 100GE traffic generation system was used to transmit and receive traffic via a given OLT and ONU combination under test. And during each of the five test cases, an XGS-PON analyzer or OMCI debug capabilities of the OLT were used to capture and examine the message exchanges between the ONU and OLT. One participating OLT vendor indicated that, during the event, they tested with 12 different ONU models, inspecting close to 100 OMCI management elements for each ONU.

The results of the interop testing were encouraging and showed that the participating suppliers have already begun implementing the requirements of the Cable OpenOMCI specification in their software. As expected, the testing also uncovered additional OMCI interoperability issues. Those issues will inform the next set of work items for the CPMP working group to tackle.

New Issues Bring New Fixes

With a wide array of OLT and ONU vendor implementations on hand at the event, new issues were bound to be discovered — which is exactly why we hold interoperability events.

Now, the CPMP working group will discuss our findings and prioritize solutions for them via an engineering change process to the Cable OpenOMCI specification. Fixes for the simpler issues will likely be included in the upcoming release of the I02 version of the spec. More complex issues may take more time for the working group to solve and will therefore be addressed in a later version of the spec.

Join Us Next Time

CableLabs is planning two more PON Interop·Labs events this year, with the next event scheduled for the week of Aug. 4. Stay tuned for more details.

The August event will provide an opportunity for OLT and ONU suppliers to return to test interoperability based on compliance with an anticipated I02 version of the Cable OpenOMCI specification. We also welcome OLT suppliers to return to exercise their DAL solutions for ONU and config file interoperability.

Innovation

Preparing the Industry for the Next Era of Broadband Innovation

Key Points

- CableLabs helps shape the future of connectivity and advance the technologies that will define it by empowering the people who make it possible.

- Together with SCTE, our member operators and other industry stakeholders, we can build stronger foundations for tomorrow’s networks by investing in collaboration, community and continuous innovation.

The broadband industry is only as strong as the people who power it.

At CableLabs, we believe that innovation isn’t driven by technology alone — it’s driven by the people behind it. That’s why we’re focused on strengthening the industry through three key areas: collaboration, connection and preparation. By creating environments where people can exchange knowledge, engage with peers and accelerate skill development, we’re not just preparing for the future — we’re actively shaping it.

Together with our subsidiary, SCTE, we build our efforts around:

- Collaborating to solve industry-wide challenges

- Connecting experts, engineers and leaders

- Preparing the future workforce at every level

Through these pillars, groundbreaking ideas become real solutions — and the people who bring them to life are empowered to thrive.

Collaborating to Solve the Industry’s Toughest Challenges

From idea to impact, collaboration is at the core of everything we do.

We know that innovation is a team effort. CableLabs’ working groups bring together member operators, vendors and industry peers to align on challenges and develop real-world solutions. Whether it's advancing DOCSIS® technology through hands-on collaboration in our Interop·Labs events or shaping network evolution strategies across our innovation ecosystem, every breakthrough starts with the right people in the room.

This commitment to collaboration is reflected in efforts like the Technology Vision for the industry, which reframes broadband innovation around user experience — and the shared work it takes to deliver it. You can also see it in action through initiatives like our Interop·Labs events, where engineers and vendors test, refine and accelerate the development of new technologies together.

All of this is backed by the work happening inside our labs, where real-world testing environments make it possible to move from concept to deployment — with speed and precision.

This collaborative spirit is what enables solutions to scale. Collaboration within the broadband ecosystem helps align the industry and drive progress — ultimately allowing operators and vendors to accomplish more together. It’s essential that we create impact together by turning shared challenges into real-world solutions. We invite our members and the vendor community to engage with us and one another through CableLabs working groups, SCTE Standards, and a host of interoperability and industry events.

Connecting Industry Leaders

Innovation accelerates when people come together.

The broadband industry moves forward when people have the space to connect, exchange ideas and learn from one another. At CableLabs, we create opportunities — both in person and online — for members to engage in meaningful conversations that drive the industry ahead.

Events like the CableLabs Winter Conference 2025 bring leaders together to align on priorities and explore the technologies shaping tomorrow’s networks. Our ongoing webinars and virtual events offer flexible, accessible ways to stay informed and engaged throughout the year.

You’ll often see CableLabs at industry events — whether we’re attending, speaking or connecting with peers across the ecosystem. At CES 2025, President and CEO Phil McKinney explored how major trends like AI and immersive experiences are shaping the future of connectivity — and the key role the broadband industry will play in delivering those experiences. Catch the key takeaways in this recap.

Whether face-to-face or virtual, every event is a chance to learn and build the relationships that move innovation forward.

Preparing the Future Workforce

The future of broadband depends on the people who build it.

Sustaining innovation means preparing the next generation of broadband professionals — and supporting those already in the field. CableLabs and our subsidiary, SCTE, are committed to empowering these rising professionals through meaningful on-the-job experiences that help them build the skills, confidence and connections they need to thrive in the industry.

To cultivate future technology leaders, CableLabs’ summer internship program welcomes driven and talented university students to gain real-world experience at our Colorado headquarters and contribute directly to industry innovation. At the high school level, our support for programs like P-TECH are helping local underserved students gain hands-on STEM experience and mentorship.

We’re also proud to collaborate with SCTE, which plays a vital role in equipping engineers and technicians with the certifications, standards and skills they need to meet the challenges of today’s networks — and tomorrow’s.

By investing in education, mentorship and continuous training, we’re helping ensure the broadband workforce is ready for what’s next.

Looking Ahead

Collaboration, connection and preparation are foundational to the success of the broadband industry. By investing in people and creating opportunities to work and grow together, we’re not only getting ready for what’s next; we’re helping lead the way.

We look forward to building the future — together.

Wireless

CableLabs and Industry Leaders Unite to Advance Open AFC Solution

Key Points

- In collaboration with industry partners, CableLabs is helping continue the development of the Open Automated Frequency Coordination (AFC) solution.

- The open-source AFC solution supports Standard Power operation for unlicensed devices in the 6GHz band.

CableLabs is proud to partner with Broadcom, Cisco, the Wireless Broadband Alliance (WBA) and the Wi-Fi Alliance (WFA) to offer an open-source version of the Open Automated Frequency Coordination (AFC) solution.

This platform enables unlicensed devices to operate as Standard Power (SP) devices within the recently opened 6GHz band. SP devices have a higher power than indoor devices operating in the same band. While the most common unlicensed technology is Wi-Fi, the AFC can also support other wireless technologies.

What Is the Open AFC Project?

This partnership, called the Open AFC Project, focuses on maintaining an open-source version of the AFC. The goal is to provide a foundation for new AFC vendors to enter the ecosystem and to offer a platform for global regulators to further explore expanding share spectrum in their regions. The Open AFC open-source platform originated from the Open AFC work under the Telecom Infra Project (TIP).

In March 2024, TIP announced the retirement of the Open Automated Frequency Coordination Software Group, which had been tasked with developing a platform to enable Standard Power Wi-Fi operation in the 6GHz band. This industry collaboration group’s mission was to quickly develop an AFC platform for the U.S., with an eye toward global adoption.

The TIP Open AFC enabled three entities — Broadcom, WBA and WFA — to receive Federal Communications Commission (FCC) approval, expanding the number of AFC solutions available within the ecosystem. With the retirement of the TIP Open AFC, TIP has handed off the golden version of the open-source reference Open AFC platform to the Open AFC Project.

The goal of the Open APF Project is to manage this open-source AFC platform, ensuring continued alignment with current and future FCC regulations. Contributions from the AFC community will support ongoing improvements, and as more global regions adopt 6GHz, the AFC can expand to include their regulations and rules — ultimately supporting a unified global platform.

As additional bands are reviewed worldwide to become shared spectrum between licensed, partially licensed and unlicensed deployments, AFC technology has the potential to play a key role in coordinating services within those bands.

For more information about the Open AFC Project, contact Luther Smith, or subscribe to the CableLabs blog for updates on our work on wireless technologies and more.

DOCSIS

CableLabs Certifies First DOCSIS 3.1+ Device with Four OFDM Channels

Key Points

- The certification of the first DOCSIS® 3.1+ device enables significantly increased network capacity without the need for plant upgrades while signaling the growing momentum toward full DOCSIS 4.0 technology deployments.

- This achievement is the latest step in the evolution of CableLabs' DOCSIS technology, further underscoring the adaptability of DOCSIS technology and the importance of certification in maintaining an interoperable ecosystem.

CableLabs is pleased to announce the certification of the industry’s first DOCSIS 3.1 Plus (DOCSIS 3.1+) device supporting four orthogonal frequency-division multiplexing (OFDM) channels. The certified device, developed by Vantiva, represents a key milestone in advancing broadband performance and capacity for cable operators worldwide.

The DOCSIS 3.1 specifications require support for at least two OFDM channels but have always included the option to support more; until recently, the available technology solutions did not support that additional capability. Devices identified as DOCSIS 3.1+ are certified to be compliant to the existing DOCSIS 3.1 specification with additional OFDM channels, extending the modem’s capabilities with increased downstream throughput (up to ~8-9Gbps).

By increasing the number of OFDM channels from two to four, the newly certified device significantly boosts potential bandwidth, improving user experience and opening up new service opportunities without expensive plant upgrades.

This achievement by Vantiva highlights the continued innovation in the broadband ecosystem and sets the stage for operators to begin delivering even greater speeds and capacity to their customers.

Why Certification Is Critical

This certification demonstrates both the readiness of the technology and the growing momentum toward more scalable, efficient network solutions ahead of full DOCSIS 4.0 deployments.

Although the DOCSIS 3.1 specification has been published for several years, certification remains an extremely important part of maintaining a reliable, interoperable ecosystem. Devices submitted to CableLabs often encounter issues during certification testing that require extensive troubleshooting and firmware changes before certification can be granted. This significantly reduces the amount of testing that needs to be conducted by operators prior to deployment, accelerating deployment. Therefore, operators rely on and choose devices that have been properly tested and certified at CableLabs.

Continuing the March Forward

In past years, DOCSIS technology has steadily advanced, driven by a strong ecosystem of member operators and vendors collaborating with CableLabs.

Every generation of the technology — from DOCSIS 1.0 to 3.0 and 3.1 to 4.0 — has enabled operators to deliver higher speeds, increased capacity, lower latency and more robust security. That momentum continues as CableLabs continues to define the future of DOCSIS networks and how that evolution fits into our broader strategy for member operators.

Learn more about CableLabs’ certification process and how DOCSIS technology has evolved over the years. To engage with us in our ongoing DOCSIS technology work, request to join a related working group or participate in a future Interop-Labs event.

Strategy

CableLabs’ Connectivity as a Service: Simpler, Smarter and Always On

Key Points

- Connectivity as a Service (CaaS), a new service concept in the works from CableLabs, takes the guesswork — and hassle — out of network connectivity for internet service subscribers at home and on the go.

- Because they either own and operate their own mobile networks or leverage mobile virtual network operator relationships, some broadband operators are uniquely positioned to offer CaaS.

For most consumers, staying connected to the services they rely on every day likely requires subscriptions to two separate services: a broadband service at home and another for their mobile phone when they’re away from home.

What if network operators could provide a single connectivity service that ensures their subscribers’ applications and devices would work seamlessly at home, away from home, in remote areas, on a plane or traveling internationally? All their devices and applications would connect — wherever, whenever — and they wouldn’t have to worry about managing connections, passwords, authentication or security.

That’s the objective of Connectivity as a Service (CaaS).

Multiple system operators (MSOs) are uniquely positioned to offer a CaaS solution. Those that either own and operate their own mobile networks or leverage mobile virtual network operator (MVNO) relationships can make the solution available to every household or business passed by their networks. CaaS can be positioned as a truly differentiated service that other types of connectivity service providers can’t match at scale.

Connectivity That’s On — Whenever, Wherever

Put yourself in your subscribers’ shoes.

If your gateway and service provider provided a solution like CaaS, you wouldn’t need to worry about your wired or wireless connection at home or work going down. Your provider would monitor both and ensure all your devices, security services and Internet of Things (IoT) applications remain online. When you start a video conference at home and head to work, the session would seamlessly switch from your home Wi-Fi to cellular. Then, when you arrive at the office, it would transition to your company’s Wi-Fi network — all without voice or video drops, or manual intervention. All without giving the network a single thought.

Imagine you’re at the airport and need to send a critical file to a colleague. Your CaaS would automatically tether your laptop to your smartphone and “know” not to use one of the many untrusted options that the corporate security team keeps warning about. In the air, you would enjoy the Wi-Fi that your CaaS provider offers through a partner satellite service — without the umpteen steps your mobile provider and the airlines make you jump through for a weak connection.

Then, at your foreign destination, you would still be connected, with your service leveraging the roaming agreements that your CaaS provider has with top local networks. Finally, if you head into a remote area, your smartphone would be able to stay connected via low Earth orbit (LEO) satellite connectivity, ensuring connectivity for critical messages, calls and safety purposes.

A Differentiated Service for Happier Users

With CaaS, the discussions over whether to use fiber, cable, fixed wireless or satellite at home or which mobile carrier works best at work, at school, on vacation or wherever become moot.

By providing such a service, operators can guarantee their customers stay connected with whatever applications they’re running, whenever, wherever they are. Add in customizable privacy features and an “away” button for when users just need to disconnect, and you have a winning connectivity solution — and more satisfied subscribers.

CableLabs is working on the concepts, user stories, requirements and architecture elements that MSOs will need to offer Connectivity as a Service. Let’s work together to deliver the only connectivity service your subscribers will ever need.

Contact us to learn more about CaaS, share your ideas for additional features and get involved.

Network as a Service

Open-Source Network APIs Advance With Latest CAMARA Meta-Release

Key Points

- A new release from the Linux Foundation’s CAMARA project includes 38 APIs, further helping bridge the gap between network operators and application developers.

- CableLabs contributions fall within our ongoing work to develop Network as a Service, a standardized solution for connecting applications to network services.

Last year, we shared how CableLabs is transforming networks through open-source API solutions as part of our work in the CAMARA project. Today, we’re excited to highlight the progress made in CAMARA’s newly published spring 2025 meta-release. The new release includes significant updates across CAMARA’s expanding suite of open network APIs — and spotlights CableLabs’ continued contributions to this global effort.

We actively contribute to the open-source project, which is hosted by Linux Foundation, to drive the development of new APIs and help advance industry alignment. These contributions are all part of CableLabs’ work on Network as a Service (NaaS), which allows network operators to expose previously unavailable features to application developers via open-source APIs. Other contributors to CAMARA include network operators — both wired and mobile — as well as application developers and hardware vendors.

Through this open-source approach, CAMARA aims to ultimately improve the performance of applications across all types of networks — a win for the entire industry.

What's New in CAMARA's Spring 2025 Meta-Release?

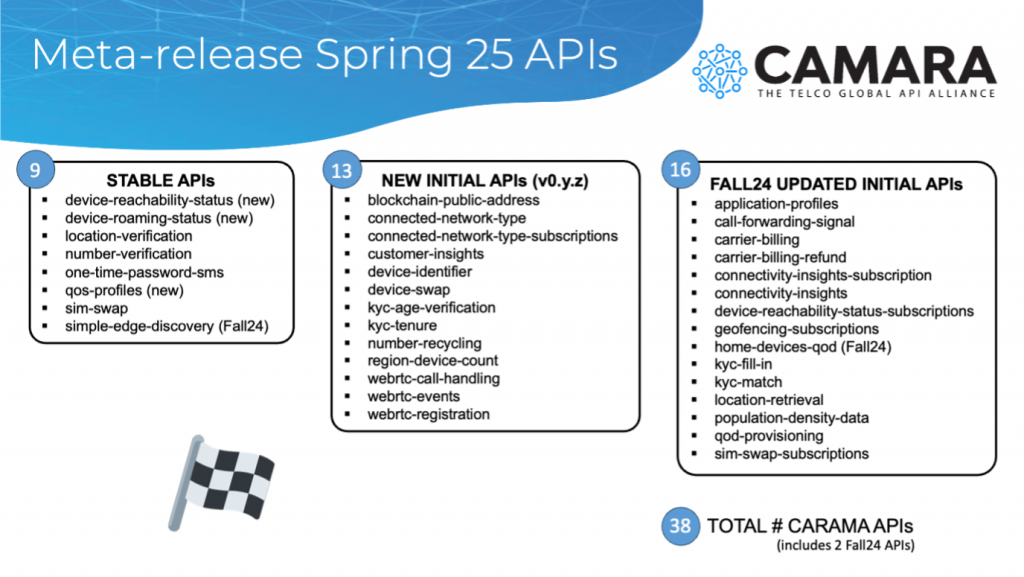

CAMARA’s spring 2025 meta-release marks a major milestone for the project, boasting 38 APIs — 9 stable APIs, plus 13 new and 16 updated APIs. The project continues to grow in momentum and industry adoption, bridging the gap between network operators and application developers through a common set of open APIs.

Here are some of the highlights from the Spring25 release that multiple service operators (MSOs) should have on their radar:

- Quality on Demand (QoD) with advanced quality of service (QoS) profile support, including Low Latency Low Loss Scalable Throughput (L4S)

- Continued enhancements to Edge Cloud, Connectivity Insights and Network Access Management APIs

- Creation of Session Insights sandbox API repository, which will enable real-time visibility into device behavior and network sessions from application to network operator. This was previously called Quality by Design and is now called Session Insights at CAMARA.

You can explore the full scope of the spring 2025 meta-release on the CAMARA wiki or read the Linux Foundation’s summary.

This spring release is the first of two planned for this year. After being released by CAMARA, APIs are available for anyone to download and use. The fall 2025 meta-release is currently in the planning phase.

Here’s a look at the timeline for the upcoming fall release:

- End of April: Scope finalized

- June: Code ready for testing

- July/August: Testing and defect fixing

- September: Official release targeted

Get Involved

Read more about the CAMARA open-source project on its website and join the project on GitHub. If you are a CableLabs member or part of our vendor community, you can join the Network as a Service working group. Member operators can also learn more by visiting our recently updated Member Portal, which requires a CableLabs account for log-in.

Let's help drive the industry forward together!