DOCSIS

How DOCSIS 3.1 Reduces Latency with Active Queue Management

At CableLabs we sometimes think big picture, like unifying the worldwide PON standards, and sometimes we focus on little things that have big impacts.

In the world of cable modems a lot has been said about the ever-increasing demand for bandwidth, the rock-solid historical trend where broadband speeds go up by a factor of about 1.5 each year. But alongside the seemingly unassailable truth described by that trend is the nagging question: "Who needs a gigabit per second home broadband connection, and what would they use it for?" Are we approaching a saturation point, where more bits-per-second isn't what customers focus on? Just like no one pays much attention to CPU clock rates when they buy a new laptop anymore, and megapixel count isn't the selling point it once was for digital cameras.

It's clear that aggregate bandwidth demand is increasing, with more and more video content (at higher resolutions and greater color depths) being delivered, on-demand, over home broadband connections. DOCSIS 3.1 will deliver this bandwidth in spades. But, in terms of what directly impacts the user experience, what is increasingly getting attention is latency.

Packet Latency and Application Performance

Latency is a measure of the time it takes for individual data packets to traverse a network, from a tablet to a web server, for example. In some cases it is referred to as "round trip time," both because that's easier to measure, and because for a lot of applications, it’s what is most important.

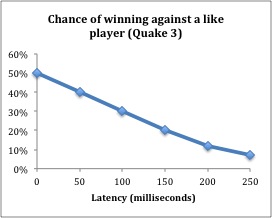

For network engineers, latency has long been considered important for specific applications. For example, it has been known since the earliest days of intercontinental telephone service that long round-trip times cause severe degradation to the conversational feeling of the connection. It’s similar for interactive games on the Internet, particularly ones that require quick reaction times, like first-person-shooters. Latency (gamers call it "lag" or "ping time") has a direct effect on a player's chance of winning.

Based on data from M. Bredel and M. Fidler, "A Measurement Study regarding Quality of Service and its Impact on Multiplayer Online Games", 2010 9th Annual Workshop on Network and Systems Support for Games (NetGames), 16-17 Nov. 2010.

But for general Internet usage, web browsing, e-commerce, social media, etc. the view has been that these things aren't sensitive to latency, at least at the scales that modern IP networks can provide. Does a user really care that much if their Web browsing traffic is delayed by a few hundred milliseconds? That's just the blink of an eye – literally. Surely this wouldn't be noticed, let alone be considered a problem. It turns out that it is a problem, since a typical webpage can take 10 to 20 round-trip-times to load. So, an eye-blink delay of 400ms in the network turns into an excruciating 8-second wait. That is a killer for an e-commerce site, and for a user's perception of the speed of their broadband connection.

And, it turns out that more bandwidth doesn't help. Upgrading to a "faster" connection (if by faster we mean more Mbps) will have almost zero impact on that sluggish experience. For a typical webpage, increasing the connection speed beyond about 6 Mbps will have almost no effect on page load time.

If Latency is Indeed Important, What Can We Do About It?

There is a component of latency that comes from the distance (in terms of network link miles) between a client and a server. Information currently travels at near the speed of light on network links, and, while some network researchers have bemoaned that "Everybody talks about the speed of light, but nobody ever does anything about it" (Joe Touch, USC-ISI), it seems that once we harness quantum entanglement we can call that one solved. Until that day comes, we have to rely on moving the server closer to the client by way of Web caches, content-delivery networks, and globally distributed data centers. This can reduce the round-trip-time by tens or hundreds of milliseconds.

Another component of latency comes from the time that packets spend sitting in intermediate devices along the path from the client to the server and back. Every intermediate device (such as a router, switch, cable modem, CMTS, mobile base station, DSLAM, etc.) serves an important function in getting the packet to its destination, and each one of them performs that function by examining the destination address on the packet, and then making a decision as to which direction to forward it. This process takes some time, at each network hop, while the packet waits for its turn to be processed. Reducing the number of network hops, and using efficient networking equipment can reduce the round-trip-time by tens of milliseconds.

But, there is a hidden, transient source of significant latency that has been largely ignored – packet buffering. Network equipment suppliers have realized that they can provide better throughput and packet-loss performance in their equipment if they include the capability of absorbing bursts of incoming packets, and then playing them out on an outgoing link. This capability can significantly improve the performance of large file transfers and other bulk transport applications, a figure of merit by which networking equipment is judged. In fact, the view has generally been that more buffering capability is better, since it further reduces the chance of having to discard an incoming packet due to having no place to put it.

The problem with this logic is that file transfers use the Transmission Control Protocol (TCP) to control the flow of packets from the server to the client. TCP is designed to try to maximize its usage of the available network capacity. It does so by increasing its data rate up to the point that the network starts dropping packets. If network equipment implements larger and larger buffers to avoid dropping packets, and TCP by its very design won't stop increasing its data rate until it sees packet loss, the result is that the network has large buffers that are being kept full whenever a TCP flow is moving files, and packets have to sit and wait in a queue to be processed.

Buffering in the network devices at the head of the bottleneck link between the server and the client (in many cases this is the broadband access network link) have the most impact on latency. And, in fact, we see huge buffers supported by some of this equipment, with buffering delays of hundreds or thousands of milliseconds being reported in many cases, dwarfing the latency caused by other sources. But it is a transient problem. It only shows up when TCP bulk traffic is present. If you test the round-trip time of the network connection in the absence of such traffic, you would never see it. For example, if someone in the home is playing an online game or trying to make a Skype call, whenever another user in the home sends an email with an attachment, the game or Skype call will experience a glitch.

Is There a Solution to This Problem?

Can we have good throughput, low packet loss and low latency? At CableLabs, we have been researching a technology called Active Queue Management where the cable modem and CMTS will keep a close eye on how full their buffers are getting, and as soon as they detect that TCP is keeping the buffer full, they will drop just enough packets to send TCP the signal that it needs to slow down, so that more appropriate buffer levels can be maintained.

This technology shows such promise for radically improving the broadband user experience, that we've mandated it be included in DOCSIS 3.1 equipment (both cable modem and CMTS). We've also amended the DOCSIS 3.0 specification to strongly recommend that vendors add it to existing equipment (via firmware upgrade) if possible.

On the cable modem, we took it a step further and require that devices implement an Active Queue Management algorithm that we've specially designed to optimize performance over DOCSIS links. This algorithm is based on an approach developed by Cisco Systems, called "Proportional Integral Enhanced Active Queue Management" (or, more commonly known by its less tongue-tying acronym: PIE), and incorporates original ideas from CableLabs and several of our technology partners to improve its use in cable broadband networks.

By implementing Active Queue Management, cable networks will be able to reduce these transient buffering latencies by hundreds or thousands of milliseconds, which translates into massive reductions in page load times, and significant reductions in delays and glitches in interactive applications like video conferencing and online gaming, all while maintaining great throughput and low packet loss.

Active Queue Management: A little thing that can make a huge impact.

Greg White is a Principal Architect at CableLabs who researches ways to make the Internet faster for the end user. He hates waiting 8 seconds for pages to load.

For more information on Active Queue Management in DOCSIS, download the white paper.