Virtualization

Give your Edge an Adrenaline Boost: Using Kubernetes to Orchestrate FPGAs and GPU

Over the past year, we’ve been experimenting with field-programmable gate arrays (FPGAs) and graphics processing units (GPUs) to improve edge compute performance and reduce the overall cost of edge deployments.

Unless you’ve been under a rock for the past 2 years, you’ve heard all the excitement about edge computing. For the uninitiated, edge computing allows for applications that previously required special hardware to be on customer premises to run on systems located near customers. These workloads require either very low latency or very high bandwidth, which means they don’t do well in the cloud. With many of these low-latency applications, microseconds matter. At CableLabs, we’ve been defining a reference architecture and adapting Kubernetes to better meet the low-latency needs of edge computing workloads.

CableLabs engineer Omkar Dharmadhikari wrote a blog post in May 2019 called Moving Beyond Cloud Computing to Edge Computing, outlining many of the opportunities for edge computing. If you aren’t familiar with the benefits of edge computing, I’d suggest reading that post before you read further.

New Features

As part of our efforts around Project Adrenaline, we’ve shared tools to ease the management of hardware accelerators in Kubernetes. These tools are available in the SNAPS-Kubernetes GitHub repository.

- Field-programmable gate array (FPGA) accelerator integration

- Graphics processing unit (GPU) accelerator integration

Hardware Acceleration

FPGAs and GPUs can be used as hardware accelerators. There are three advantages that we consider when moving a workload to an accelerator:

- Time requirements

- Power requirements

- Space requirements

Time, space and power are all critical for edge deployments. You have limited space and power for each location. The time needed to complete the operation must fall within the desired limits, and certain operations can be much faster running on an accelerator than on a CPU.

Writing applications for accelerators can be more difficult because there are fewer language options than general-purpose CPUs have. Frameworks such as OpenCL attempt to bridge this gap and allow a single program to work on CPUs, GPUs and FPGAs. Unfortunately, this interoperability comes with a performance cost that makes these frameworks a poor choice for certain edge workloads. The good news is that several major accelerator hardware manufacturers are targeting the edge, releasing frameworks and pre-built libraries that will bridge this performance gap over time.

Although we don’t have any hard-and-fast rules today for what workloads should be accelerated and on which platform, we have some general guidelines. Integer (whole number) operations are typically faster on a general-purpose CPU. Floating point (decimal number) are typically faster on GPUs. Bitwise operations, manipulating ones and zeros, are typically faster on FPGAs.

Another thing to keep in mind when deciding where to deploy a workload is the cost of transitioning that workload from one compute platform to another. There’s a penalty for every memory copy, even within the same server. This means that running consecutive tasks within a pipeline on one platform can be faster than running each task on the platform that is best for that task.

Accelerator Installation Challenges

When you use accelerators such as FPGAs and GPUs, managing the low-level software (drivers) to run them can be a challenge. Additional hooks to install these drivers during the OS deployment have been added to SNAPS-Boot, including examples for installing drivers for some accelerators. We encourage you to share your experiences and help us add support for a broader set of accelerators.

Co-Innovation

These features were developed in a co-innovation partnership with Altran. We jointly developed the software and collaborated on the proof of concepts. You can discover more about our co-innovation program on our website, which includes information about how to contact CableLabs with a co-innovation opportunity.

Extending Project Adrenaline

Project Adrenaline only scratches the surface of what’s possible with accelerated edge computing. The uses for edge compute are vast and rapidly evolving. As you plan your edge strategy, be sure to include the capability to manage programmable accelerators and reduce your dependence on single-purpose ASICs. Deploying redundant and flexible platforms is a great way to reduce the time and expense of managing components at thousands or even millions of edge locations.

As part of Project Adrenaline, SNAPS-Kubernetes ties together all these components to make it easy to try in your lab. With the continuing certification of SNAPS-Kubernetes, we’re staying current with releases of Kubernetes as they stabilize. SNAPS-Boot has additional features to easily prepare your servers for Kubernetes. As always, you can find the latest information about SNAPS on the CableLabs SNAPS page.

Contact Randy to get your adrenaline fix at Mobile World Congress in Barcelona, February 24-27 2020.

Virtualization

Crack the NFV Code for Free with our New NFV 101 Training Course

Network Functions Virtualization (NFV), Software-Defined Networking (SDN) and virtualization are transitioning from “buzz words” to reality. As this transition takes place, our members and other players in the ecosystem are asking:

- What is NFV at its core?

- How will it impact my operations?

- What does it mean for my long-term strategy?

In an attempt to answer those questions, CableLabs has developed an NFV 101 training course. This seven-part training series:

- Introduces NFV basics,

- Key NFV requirements,

- Use cases,

- Current industry landscape,

- The role of open source and standards,

- And the future trends we’re expecting from this technology.

NFV will play a key role in shaping how we operate as an industry. With that in mind, this course was designed for anybody who wants to learn more about the technology. Let’s explore in more detail what to expect from each part of the series:

Part 1: NFV Basics

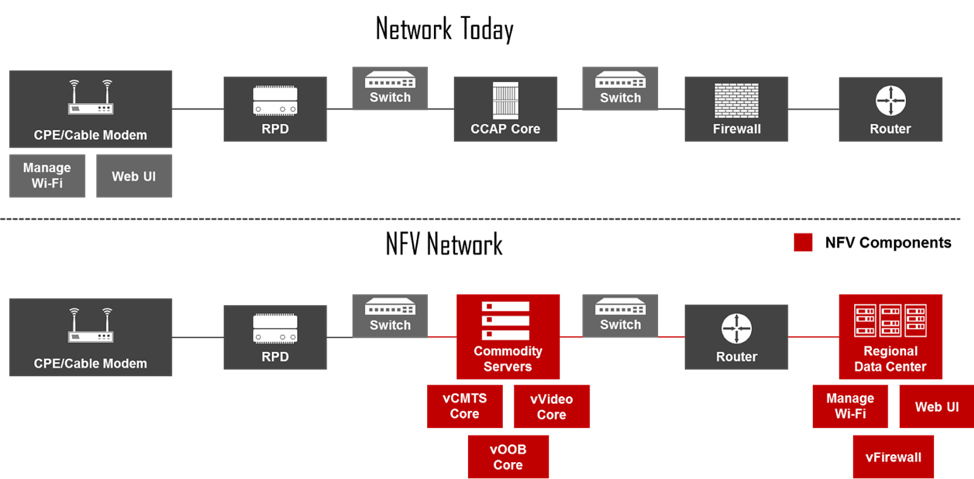

This section will lay the foundational building blocks by exploring what NFV is at its core. You will also find examples of how the technology will impact the network and where SDN plays a role with NFV.

Part 2: Key NFV Requirements

For NFV implementations to be successful, key requirements must be met. This section explores what those requirements are and how to manage those requirements as you encounter them. These key requirements are:

- Cloud-based network topology implementation

- Environmental considerations (e.g., space, power)

- Performance (e.g., latency, throughput)

- Security

- License management

- Availability (e.g., reliability, resilience, fault management)

Part 3: NFV Use Cases

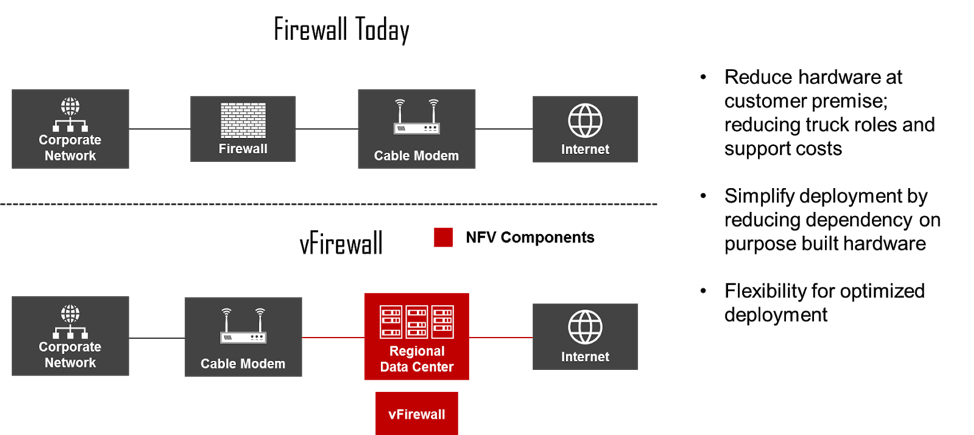

NFV presents some compelling use cases, and in this section, we’ll explore some of the most prominent that we’re seeing in the industry, including vFirewall, vCCAP Core with Remote PHY Device (RPD), and SD-WAN. This section also discusses how each use case will impact your operations and customer base.

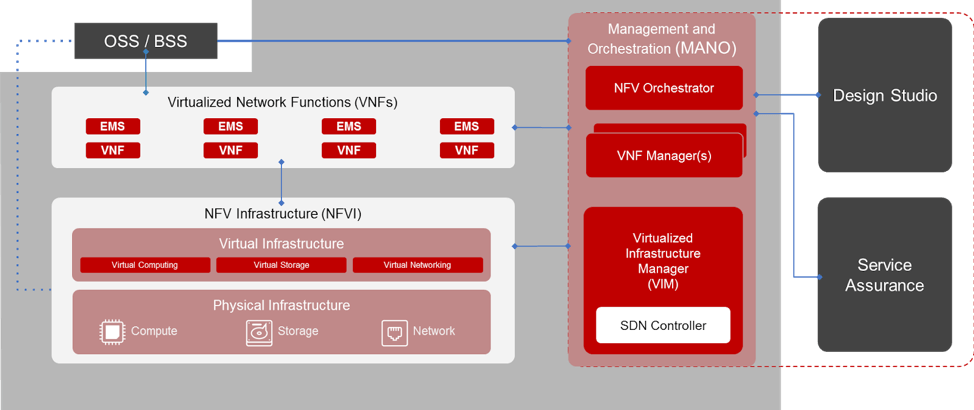

Part 4: Industry Landscape

In this section of the training, we will dive more deeply into the NFV architecture and what role each of the major components plays. This section will also highlight what roles network services and Virtual Network Functions (VNFs) play within NFV, along with how ETSI has impacted the development and maturity of this technology.

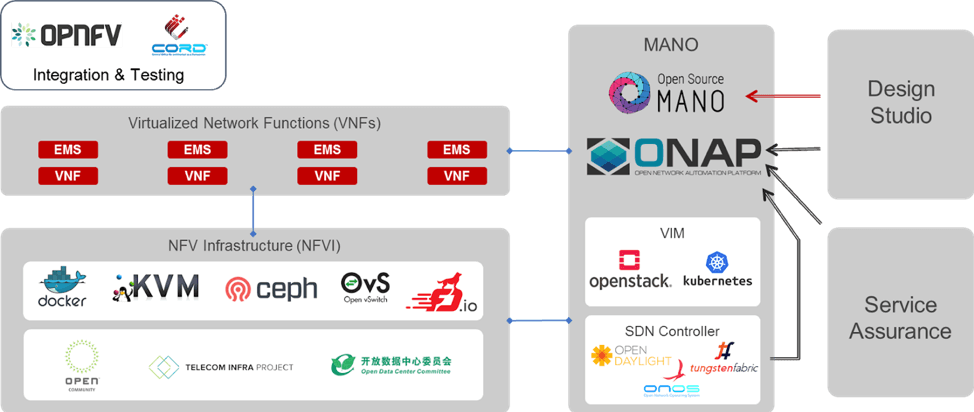

Part 5: Relevant Open-Source Projects

As NFV technology has matured, so have open-source initiatives to improve the technology and interoperability. This section will review the major open-source initiatives and the role they play within the architecture.

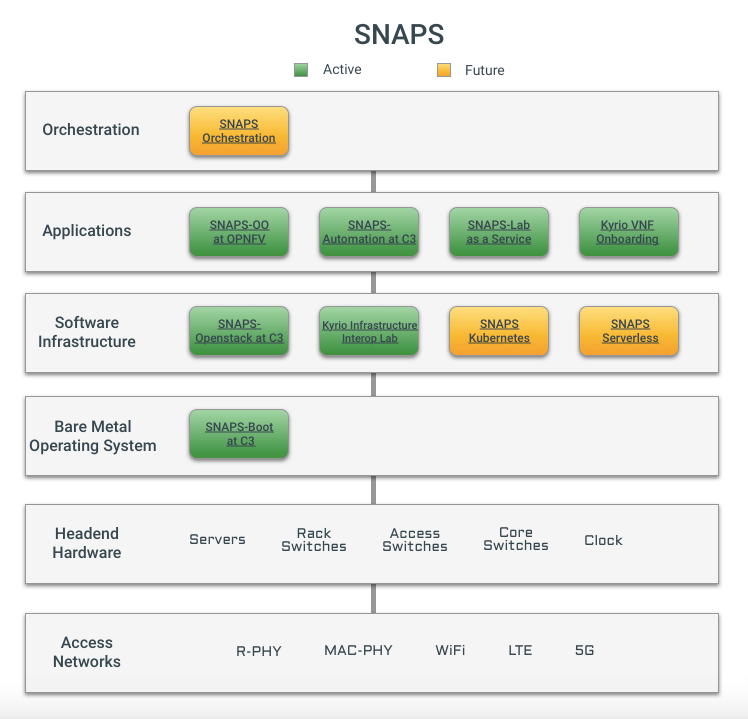

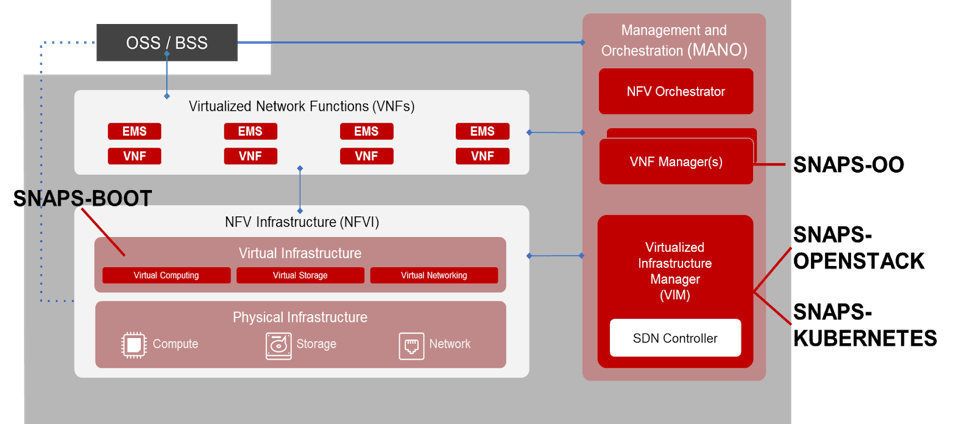

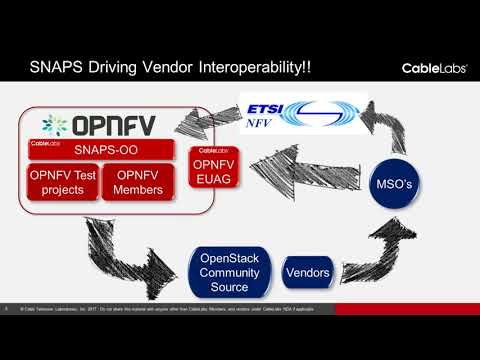

Part 6: CableLabs and NFV

As open source and standards mature and shape the future of NFV, CableLabs is playing a key role in helping shape those groups for the entire cable ecosystem. CableLabs has developed a program called SDN & NFV Application Platform and Stack (SNAPS™). SNAPS is the overarching program that provides the foundation for virtualization projects and deployment leveraging SDN and NFV. CableLabs spearheaded the SNAPS project to fill in gaps in the open-source community and to ease the adoption of SDN/NFV for our cable members.

Part 7: Future Trends

Leveraging NFV, cable is poised for the future with the best access network. In this section, we will review where NFV and SDN can take cable networks, along with what to expect from NFV as the technology matures and grows with production implementations.

If you have any questions about the content, contact me at p.fonte@cablelabs.com. If you would like to request a live training session of the course, contact Amar Kapadia at akapadia@aarnanetworks.com. To review the video series of the training, click below.

Virtualization

CableLabs Announces SNAPS-Kubernetes

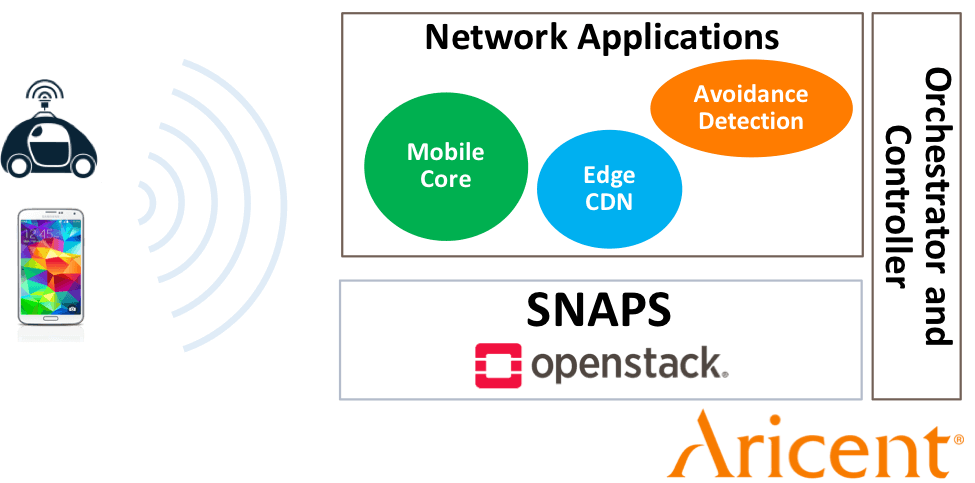

Today, I’m pleased to announce the availability of SNAPS-Kubernetes. The latest in CableLabs’ portfolio of open source projects to accelerate the adoption of Network Functions Virtualization (NFV), SNAPS-Kubernetes provides easy-to-install infrastructure software for lab and development projects. SNAPS-Kubernetes was developed with Aricent and you can read more about this release on their blog here.

In my blog 6 months ago, I announced the release of SNAPS-OpenStack and SNAPS-Boot, and I highlighted Kubernetes as a future development area. As with the SNAPS-OpenStack release, we’re making this installer available while it's still early in the development cycle. We welcome contributions and feedback from anyone to help make this an easy-to-use installer for a pure open source and freely available environment. We’re also releasing the support for the Queens release of OpenStack—the latest OpenStack release.

Member Impact

The use of cloud-native technologies, including Kubernetes, should provide for even lower overhead and an even better-performing network virtualization layer than existing virtual machine (VM)-based solutions. It should also improve total cost of ownership (TCO) and quality of experience for end users. A few operators have started to evaluate Kubernetes, and we hope with SNAPS-Kubernetes that even more members will be able to begin this journey.

Our initial total cost of ownership (TCO) analysis with a virtual Converged Cable Access Platform (CCAP) core distributed access architecture (DAA) and Remote PHY technology has shown the following improvements:

- Approximately 89% savings in OpEx costs (power and cooling)

- 16% decrease in rack space footprint

- 1015% increase in throughput

We anticipate that Kubernetes will only increase these numbers.

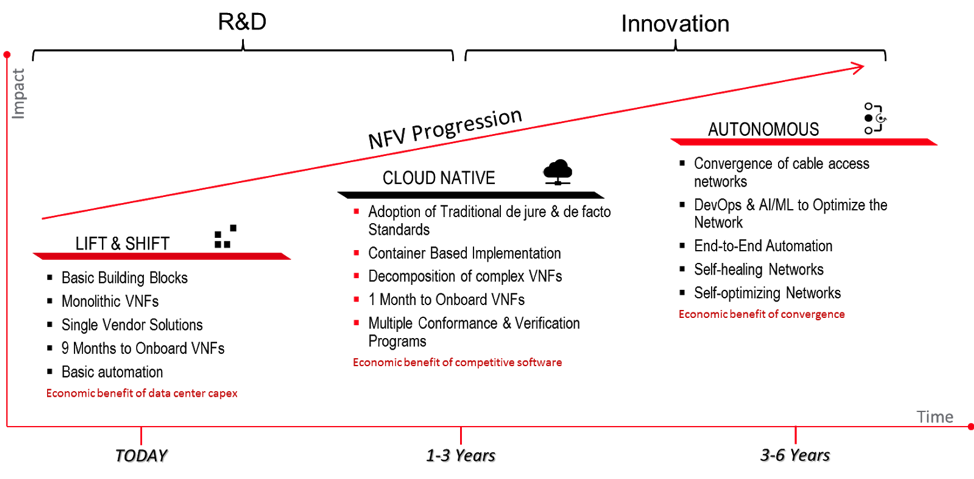

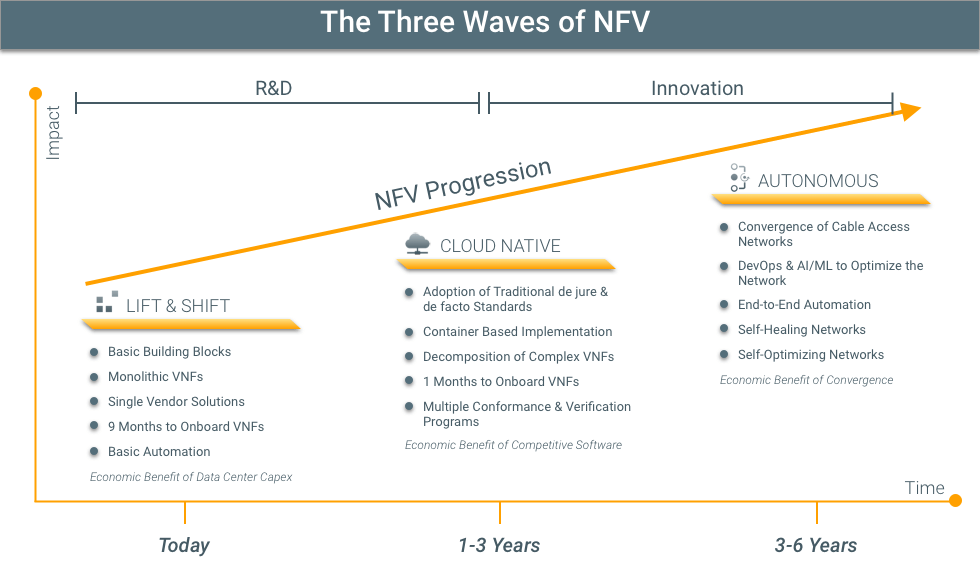

Three Waves of NFV

SNAPS-Kubernetes will help deliver Virtual Network Functions (VNFs) that use fewer resources, are more fault-tolerant and quickly scale to meet demand. This is a part of a movement coined “cloud native.” This the second of the waves of NFV maturity that we are observing.

With the adoption of NFV, we have identified three overarching trends:

- Lift & Shift

- Cloud Native

- Autonomous Networks

Lift & Shift

Service providers and vendors typically support the Lift & Shift model today. These are large VMs running on an OpenStack-type Virtualized Infrastructure Manager (VIM). This is a mature technology, and many of the gaps in this area have closed.

VNF vendors often brag that their VNF solution runs the same version of software that runs on their appliances in this space. Although achieving feature parity with their existing product line is admirable, these solutions don’t take advantage of the flexibility and versatility that can be achieved by fully leveraging virtualization.

There can be a high degree of separation between the underlying hardware and operating system from the VM. This separation is great for portability, but it comes at a cost. Without some level of hardware awareness, it isn’t possible to take full advantage of acceleration capabilities. An extra layer of indirection is included, which can add latency.

Cloud Native

Containers and Kubernetes excel in this quickly evolving section of the market. These solutions aren’t yet as mature as OpenStack and other virtualization solutions, but they are lighter weight and integrate software and infrastructure management. This means that Kubernetes will scale and fail over applications, and the software updates are also managed.

Cloud native is well suited for edge and customer-premises solutions where compute resources are limited by space and power.

Autonomous Networks

Autonomous networks are the desired future in which every element of the network is automated. High-resolution data is being evaluated to continually optimize the network for current and projected conditions. The 3–6-year projection for this technology is probably a bit optimistic, but we need to start implementing monitoring and automation tools in preparation for this shift.

Features

This release is based on Kubernetes 1.10. We will update Kubernetes as new releases stabilize and we have time to validate these releases. As with SNAPS-OpenStack, we believe it’s important to adopt the latest stable releases for lab and evaluation work. Doing so will prepare you for future features that help you get the most out of your infrastructure.

This initial release supports Docker containers. Docker is one of the most popular types of containers, and we want to take advantage of the rich ecosystem of build and management tools. If we later find other container technologies that are better suited to specific cable use cases, this support may change in future releases.

Because Kubernetes and containers are so lightweight, you can run SNAPS-Kubernetes on an existing virtual platform. Our Continuous Integration (CI) scripts use SNAPS-OO to completely automate the installation on almost any OpenStack platform. This should work with most OpenStack versions from Liberty to Queens.

SNAPS-Kubernetes supports the following six solutions for cluster-wide networking:

- Weave

- Flannel

- Calico

- Macvlan

- Single Root I/O Virtualization (SRIOV)

- Dynamic Host Configuration Protocol (DHCP)

Weave, Calico and Flannel provide cluster-wide networking and can be used as the default networking solution for the cluster. Macvlan and SRIOV, however, are specific to individual nodes and are installed only on specified nodes.

SNAPS-Kubernetes uses Container Network Interface (CNI) plug-ins to orchestrate these networking solutions.

Next Steps

As we highlighted before, serverless infrastructure and orchestration continue to be future areas of interest and research. In addition to extending the scope of our infrastructure, we are focusing on using and refining the tools.

Multiple CMTS vendors have announced and demonstrated virtual CCAP cores, so this will be an important workload for our members.

Try It Today

Like other SNAPS releases, SNAPS-Kubernetes is available on GitHub under the Apache Version 2 license. SNAPS-Kubernetes follows the same installation process as SNAPS-OpenStack. The servers are prepared with SNAPS-Boot, and then SNAPS-Kubernetes is installed.

Have Questions? We’d Love to Hear from You

- Reach out on IRC: Server: Freenode Channel #cablelabs-snaps

- Contribute to the documentation, backlog and code on GitHub

- Send an email message directly to snaps@cablelabs.com

- Tweet to @RandyLevensalor

Subscribe to our blog to learn more about SNAPS in the future.

Virtualization

Deployment of OpenStack using CableLabs’ SNAPS on Aparna µCloud 4015

OpenStack software controls large pools of compute, storage and networking resources throughout a datacenter, managed through a dashboard or via the OpenStack API. OpenStack works with popular enterprise and open source technologies making it ideal for heterogeneous infrastructure and it is a popular platform for service providers to run their NFV software. Given the large number of services provided by OpenStack, the deployment methods can be complex—even with excellent documentation provided by the OpenStack consortium.

SNAPS from CableLabs

To reduce the complexity of deployment of OpenStack, CableLabs introduced a set of scripts and a process called SNAPS and has placed these resources in an open source domain. SNAPS allows any organization to deploy a release of OpenStack on a set of compute, storage and network devices with two scripts/processes—as long as these devices meet OpenStack’s minimal requirements.

SNAPS OpenStack deployment scripts involve downloading the latest source/scripts from CableLabs’ GitHub repository and making modifications to a well-documented YAML file that provides details about the controller and compute nodes along with networking information:

- The SNAPS Boot—This document specifies the steps and configuration required for OS provisioning of bare metal machines via SNAPS boot.

- The OpenStack Distribution Deployment—This document specifies the steps and configuration required for OpenStack installation on servers configured by SNAPS Boot.

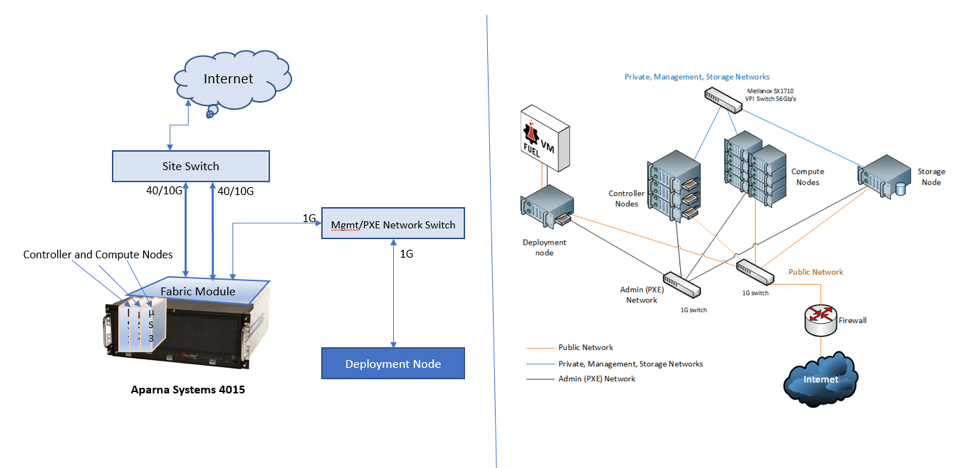

Aparna Systems’ µCloud 4015

Ideal for SNAPS OpenStack deployment, Aparna Systems’ µCloud 4015 (Micro Cloud)[1] is an ultra-converged, open, compact and high-density hardware platform consisting of compute, storage and network devices ideally suited for deployment at the edge of the network. A µCloud 4015 system:

- Can host up to 15 Intel Xeon (8, 12 or 16 core) µServers with attached storage for two SATA/NVME SSD drives

- Includes the provision of two fabric modules to provide connectivity between µServers as well as to the outside world

To demonstrate the advantages that an integrated and converged system such as the µCloud 4015 can provide, Figure 1 shows Aparna Systems deployment configurations in contrast withed with discrete servers.

Figure 1: Comparison of Ultra-Converged µCloud 4015 System vs. Discrete Component System

In a system with discrete servers, switches and storage modules, setting up interconnections and managing a fresh installation (as well as any additions to the system) can take significant time. By contrast, the number of connections that the µCloud 4015 requires are merely those needed for the network: two for OpenStack data and management networks and two for the fabric management port and preboot execution environment (PXE) network.

Hardware Requirements of OpenStack Installation Using SNAPS

Any OpenStack installation requires servers running as controller, compute and storage nodes (called host nodes), switches to connect these nodes as per the network requirements and a configuration/deployment node to manage the installation. All of these have certain minimum requirements (shown below).

Host Nodes

SNAPS OpenStack requires a minimum of three nodes for a basic configuration:

- One controller node and two compute nodes, each with at least 16 GB of memory

- 80 GB hard disk (or SSD)

- Two mandatory and one optional network interfaces

These nodes must be network boot enabled and Intelligent Platform Management Interface (IPMI) capable. Our test configuration includes three PXE boot enabled µServers, each with an Intel Xeon D1541 processor, 64 GB of memory and standard IPMI interfaces.

Networking

SNAPS OpenStack deployment requires three network interfaces: management, tenant and data. The tenant interface is an internal interface between the deployed nodes in the system and doesn’t require an external connection from the fabric module to the external world. However, the other two interfaces—management and data—must be connected to the external world.

Two 40G/10G ports of the fabric module are connected (either in the breakout mode or straight connection) to an external switch, which in turn lets the data and management interfaces be connected to the external world, as Figure 1 shows. The single-root I/O virtualization (SR-IOV) feature of the Intel Xeon D processors is used to create virtual interfaces from the single 10G interface to the fabric module of each of the µServers. PXE networking is enabled by using the fabric management (1G) network.

Configuration/Deployment/Build Node

According to the SNAPS guide, a server machine with 64bit Intel/AMD architecture with 16GB RAM and one network interface is required. This machine must be able to reach the host nodes via IPMI. An external machine matching or exceeding these criteria is used.

A µCloud 4015 can be used as a fully configured and prebuilt OpenStack system by using one of the µServers as a build/configuration node. This mode is also verified in the SNAPS OpenStack installation scripts.

SNAPS OpenStack Deployment—Adaptation Summary

To install OpenStack using SNAPS scripts, changes to the deployment process were necessary. However, one of the main criteria in using SNAPS scripts to deploy OpenStack is to avoid any system changes to the configuration/deployment node, as the same server could be used for different purposes in a lab environment. This has been achieved in Aparna Systems’ deployment by implementing all the changes and adding services on the fabric module of the µCloud 4015 system. These particular changes—including enabling BMC network access from an external configuration server and PXE boot changes—can be accessed on the CableLabs GitHub.

In addition, SR-IOV configuration changes are applied on each of the µServers to enable multiple virtual interfaces with a single physical 10G interface from the µServer to the fabric module. These are used in data, management and tenant networks of the OpenStack deployment.

Once SNAPS boot is completed, OpenStack deployment is achieved by modifying the “deployment.yaml” file with the IP addresses of the controller and compute nodes (and additional information), and running the script with appropriate parameters. This process is well documented at the SNAPS GitHub repository.

CableLabs Support Success

The support provided by the CableLabs team during this process has been immensely helpful in resolving issues that are specific to the µCloud 4015 deployment. The SNAPS team also gathered some valuable feedback from this adaptation exercise that could be useful for the enhancement of the scripts for future versions. Interested in learning more about the SNAPS platform in the future? Don't forget to subscribe to our blog or contact CableLabs Lead Architect Randy Levensalor.

[1] CableLabs does not endorse or certify the Aparna platform and similar platforms are available from other vendors.

--

The author, Ramana Vakkalagadda, is Director of Software Engineering at Aparna Systems.

Virtualization

Container Workloads: Evolution of SNAPS for Cloud-Native Development

Application developers drive cloud-platform innovation by continuously pushing the envelope when it comes to defining requirements for the underlying platform. In the emerging application programming interface (API) and algorithm economy, developers are leveraging a variety of tools and already-built services to rapidly create new applications. Edge computing and Internet-of-Things (IoT) use cases require platforms that can be used to offload computing from low-power devices to powerful servers. Application developers deliver their software in iterations where user feedback is critical for product evolution. This requires building platforms that allow developers to develop new features rapidly and deploy them in production. In other words, to adopt DevOps.

In the telecommunications world, network function virtualization (NFV) is driving the evolution of telco clouds. However, the focus is shifting towards containers as a lightweight virtualization option that caters to the application developer’s requirements of agility and flexibility. Containerization and cluster-management technologies such as Docker and Kubernetes are becoming popular alternatives for tenant, network and application isolation at higher performance and lower overhead levels.

Container is an operating system level virtualization that allows execution of lightweight independent instances of isolated resources on a single Linux instance. Container implementation like Docker avoids the overhead and maintenance of virtual machines and helps in enabling portability and flexibility of applications across public and private cloud infrastructure.

Microservice architectures are enabling developers to easily adopt the API and algorithm economy. It has become imperative that we start to look at containers as an enabler for carrier-grade platforms to power new cloud-native applications.

Edge computing and IoT require containers

Edge Computing and IoT are introducing new use cases that demand low-latency networks. Robotics, autonomous cars, drones, connected living, industrial automation and eHealth are just some of the areas where either low latency is required, or a large amount of data needs to be ingested and processed. Due to the physical distance between the device and public clouds, the viability of these applications depends on the availability of a cloud platform at the edge of the network. This can help operators and MSOs leverage their low-latency access networks—their beachfront property—to enable such applications and create new revenue streams. The edge platforms require cloud-native software stacks to help “cloud-first” developers travel deep inside the operators’ networks and make the transition frictionless.

On the other hand, the devices also require client software, which can communicate with the “edge.” The diversity of such devices such as drones, sensors or cars makes it difficult to install and configure software. Containers can make life easier since they require a version of Linux operating system and container runtime to launch, manage, configure and upgrade software to any device.

The role of intelligence and serverless architectures in the carrier-grade platform

Let’s consider the example of a potential new service for real-time object recognition. By integrating artificial intelligence (AI) and machine learning (ML) algorithms, operators can enhance the edge platform so developers can create applications for pedestrian or obstacle detection in autonomous driving, intrusion detection in video surveillance and image and video search. The operator’s platform that hosts such applications needs to be “intelligent” to provide autonomous services. It requires the ability to host ML tools and support event-driven architectures where computing can be offloaded to the edge on-demand. Modern serverless architectures could be a potential solution for such requirements, but containers and cloud-native architectures are a near-perfect fit.

Are containers ready for carrier-grade workloads?

Containers as a technology have existed for over a decade. Linux containers and FreeBSD Jails are two early examples. However, it was not easy to network or manage the lifecycle of containers. Docker made this possible by simplifying container management and operations, which led to the ability to scale and port applications through containers. Today, the Open Container Initiative of the Linux Foundation is defining the standards for container runtime and image formats. APIs provided by container runtimes and additional tools help abstract low-level resource management of the environment for application developers. Container runtimes can download, verify and run containerized application images.

The production applications are typically composed of several containers that can independently scale. To manage such deployments, new software ecosystems have emerged that primarily orchestrate, manage and monitor applications across multiple hosts. Kubernetes and Docker Swarm are examples of such solutions, commonly called container orchestration engines (COE).

Some of the key challenges for carrier-grade deployments of container-based platforms are:

- Complex networking with several alternatives for overlay and underlay networks within a cluster of containers

- Lack of well-defined resource-management procedures like isolating containers with huge pages, CPU pinning, GPU sharing, inter-POD, node-affinity, etc.

- Complex deployment techniques are required to deploy multi-homed PODs

- Large ecosystems for securing container platforms as it is not easy to deploy and manage large container security solutions

SNAPS and Containers

SNAPS, which is short for SDN/NFV Application Development Platform and Stack, is an open-source platform developed by CableLabs. The platform enables rapid deployment of virtualized platforms for developers. SNAPS accelerates adoption of virtual network functions by bootstrapping and configuring a cloud platform for developers so they can focus on their applications. Aricent is involved in the SNAPS-OpenStack and SNAPS-Boot projects and contributed to the platform development with CableLabs.

An obvious next phase is to enable containerized platforms. A key first step was already achieved in the SNAPS-OpenStack project where Docker containers are used for executing many OpenStack components. The next obvious step is to create a roadmap for enabling containers for application developers. A cursory look at the cloud-native landscape reveals that this ecosystem is huge. There are several options available for DevOps, tooling, analytics, management, orchestration, security, serverless, etc. This can create confusion for developers regarding what to use and how to configure these components. They will have to “learn” the ecosystem, which will delay their own application development. The future roadmap for SNAPS is to enable developers by bootstrapping a secure and self-service container platform with the following features:

- Container orchestration and resource management

- In-built tooling for monitoring and diagnostics

- A reference microservices architecture for application development

- Easy management and deployment of container networking

- Pre-configured and provisioned security components

- DevOps-enabled for rapid development and continuous deployment

These are exciting times for developers. The availability of platforms and technologies will drive innovation throughout the developer community. The SNAPS community is focused on ensuring that the best-in-class developer platforms are created in the spirit of open innovation. The SNAPS platform roadmap adopting cloud-native ecosystem is going to provide developers an easy-to-use platform. We are looking forward to a larger participation for the developer and operator community. As a community, we must solve the key challenges and create a resilient platform for containerized application platform for network applications.

Have Questions? We’d love to hear from you:

- Send an email directly to snaps@cablelabs.com

- Contribute to the documentation, backlog and code on GitHub

- Reach out on IRC: Server: Freenode Channel #cablelabs-snaps

--

The author, Shamik Mishra, is the Assistant VP of Technology at Aricent. SNAPS, CableLabs’ SDN/NFV Application Development Platform and Stack Project, was developed leveraging the broader industry’s open source projects with the help of the Software Engineering team at Aricent. CableLabs selected Aricent for this specific project because of their world-class expertise in software-defined networks and network virtualization. In a little less than a year, CableLabs and Aricent worked closely to extend CableLabs’ initial code base to the full SNAPS platform. The SNAPS platform has now been released to open source to enable the wider industry to collaboratively build on our work and to use it to test new network approaches based on SDN and NFV.

Virtualization

Kyrio NFV Interop Lab: Powered by SNAPS

On Dec. 14, 2017, CableLabs released two new open source projects, SNAPS-Boot and SNAPS-OpenStack. SNAPS, which is short for SDN/NFV Application Development Platform and Stack, is an open source platform with the following objectives:

- Speed development of cloud applications

- Facilitate collaboration between solution providers and operators

- Ensure interoperability

- Accelerate adoption of virtual network functions and platform components.

In this post, we explore some of the synergies between the SNAPS projects and the Kyrio NFV Interop Lab.

Background: Delivering on the NFV Promise

The Kyrio NFV Interop Lab is designed as an open, collaborative system integration environment where multiple solution providers can work together in a neutral setting to develop concept systems and then showcase them to the operator community.

At last year’s Summer Conference, we displayed proof-of-concept systems demonstrating orchestrated deployment of SD-WAN with firewalling and LTE to WiFi call hand-off over a D3.1 R-PHY access network connected to a virtual CCAP Core and a virtual mobile core.

These technologies are fundamental enablers for converged networks composed of virtualized network functions running on virtual network cores. The SD-WAN, firewall and mobile calling use cases represent a significant opportunity for operators to offer efficient, flexible and agile services to their customers.

The systems were envisioned and designed by Kyrio NFV Lab sponsoring partners, integrated at CableLabs, and demonstrated at the CableLabs Summer Conference. They remain on display in the Kyrio NFV Lab in order to provide operators with ongoing access to the systems and to enable solution providers to continue development of new functions and features. Further system details are available in this webinar.

Running on Open Source: The Way of the Future

Kyrio NFV Lab systems are designed by lab sponsors using a variety of hardware and software components. However, open source software and generic commercial-off-the-shelf (COTS) hardware are the preferred environment for operators. To that end, SNAPS has been developed to provide a cloud environment that is freely available to operators and developers, based on and synchronized to OPNFV OpenStack, one of the world’s largest open source projects delivering cloud software.

Project code is publicly available and located here:

SNAPS-Boot: Automates the imaging and configuration of servers that constitute a cloud.

SNAPS-OpenStack: Automates the deployment of the OpenStack VIM on those servers.

Together they provide a powerful method for creating a standard development and testing environment.

For details on project objectives, timelines and participation contact Randy Levensalor, the SNAPS project lead.

The Mobile Call Hand-off system mentioned above was built on a beta version of SNAPS, based on Newtown OpenStack. New systems in the Kyrio NFV Lab are running on the public release of SNAPS, based on Pike OpenStack. OpenStack synchronization is a key benefit for operators, solution developers and interop testing.

Kyrio NFV Lab: Taking the Next Steps

New system development planned for the next two quarters include orchestration of multi-vendor software firewalls and orchestration of a virtual CCAP Core.

Latest generation Intel COTS servers have arrived featuring dual Xeon 6152 CPUs (44 cores/host), 364 GB RAM, four 1 TB SSDs, plus two 250 GB SSDs and multiple 40/10 Gbs NICS.

Evaluation is underway to determine data throughput under various BIOS settings, using select versions of Linux. Work is also underway to measure power consumption baselines under various load conditions.

A stable, well-characterized hardware/software platform is the foundation of the Kyrio NFV Lab’s work toward evaluation of SDN/NFV component interoperability, and Virtual Network Function on-boarding. The main questions operators will ask when considering trial or deployment of a virtual application will be:

- “Does it work as designed?”

- “Does it interoperate with other elements in my environment?”

- “How easy is it to deploy?”

These are the questions that the Kyrio NFV Lab, working over the SNAPS platform, will consider on behalf of the operator community. The faster we can answer “Yes”, “Yes” and “Very”, the faster the ecosystem will advance, the faster operators will adopt, and the faster customers will have access to newer and more reliable services. Stay tuned for progress updates from the Kyrio NFV Interop Lab - powered by SNAPS.

For further information or Kyrio NFV Lab programs and participation opportunities:

Email: Robin Ku, Director Kyrio NFV Lab

For further information on SNAPS and open source software development:

See Broadband Technology Report’s article and Randy Levensalor's blog post "CableLabs Announces SNAPS-Boot and SNAPS-OpenStack Installers"

Email: Randy Levensalor, Lead Architect Application Technologies

For CableLabs members:

Attend the Inspir[ED] NFV workshop February 13-15, in Louisville CO, for business and technical track sessions and access to all demo systems.

Virtualization

NFV License Management: The Missing Piece of the Puzzle

Ever experienced the annoyance of trying to install or reinstall licensed software on your PC only to find that you lost the license key? Imagine the challenge of managing software licenses in a large complex organization such as a telecommunications operator with hundreds, if not thousands or even tens of thousands, of licenses spread across many critical systems. How many licenses are in use at any given moment? How many are expired or technically in breach of commercial agreements with vendors?

I could go on, but you get the gist. Software license management in the telecommunications environment is about to become an order of magnitude more complex as NFV emerges from the shadows to become the technology of choice for future telecommunications network infrastructures!

Physical network appliances, purchased with a packet of software licenses, wrapped up in a commercial agreement with a single vendor and fixed for several years are about to be displaced by racks of servers running thousands of ephemeral instances of Virtual Network Functions (VNFs) and other types of critical software originating from a myriad of diverse sources - and changing minute by minute according to changing demands on the network.

Welcome to the brave new world of NFV.

How Software Licensing Underpins the Economic Viability of NFV

As one of the leaders of the group of network operators who introduced the NFV concept in 2012, I have been aware since the very beginning of the critical role that software licenses will play in the economics of NFV. Once a rack of servers is installed, software licensing becomes a significant recurring cost, as anyone who purchases software will know all too well. It might seem obvious, but there shouldn’t be any technical barriers to the implementation (and enforcement) of any type of commercial licensing agreement between network operators and software providers.

The ability to negotiate and concurrently implement different software licensing regimes with different software providers is a very important competition dynamic for NFV. Interoperability for automated license management transactions between software providers and network operators will be crucial and we want to level the playing field for innovative small software vendors to engage with the cable industry by specifying standardized approaches.

Leveling the Playing Field for Small Software Vendors

I hadn’t thought much more about NFV License Management until the summer of 2015 when I was approached at a Silicon Valley conference by the marketing director of a small independent software vendor. He said his company was very concerned that they might be excluded from NFV procurement contracts because network operators would not be motivated to implement proprietary license management arrangements with more than a few predominantly large players. I was very concerned about this because the whole point of NFV was to open the telecommunications ecosystem to small innovative software players. A vibrant and open telecommunications ecosystem is something I feel passionately about, and I resolved to do something about it.

Why do we need standards for NFV License Management?

Today there is huge diversity of license management mechanisms across the software industry which is reflected in the product offerings from Virtual Network Function (VNF) providers. Each network operator and VNF provider has a different licensing and enforcement process and the rate of change for software is increasing. Clearly, this will make service provisioning and license renewal operations more complex, error-prone and time-consuming. How will VNF providers know that their software is being used according to the license terms? And network operators need to ensure that any failure in license acquisition or enforcement does not lead to service outage.

These issues can be resolved by establishing a standard NFV license management architecture which, in addition to facilitating software vendors creating their own, independent, commercial licensing terms, would have many benefits, including:

- Avoiding the need to customize the license management procedure for each VNF type and VNF provider.

- Simplifying acquisition of VNF license usage information, this is particularly important when dynamically scaling VNF instances to support service demand.

- Reducing licensing errors which might otherwise lead to service outages.

- Massively simplified license management operations which are independent of the underlying VNF solution. This, in turn, may result in savings from the ability to optimize actual usage, reducing the waste of digital assets like software licenses.

- Enabling a competitive ecosystem for NFV software providers.

A guiding principle is to minimize the impact on the existing NFV specifications by identifying the minimum features needed to implement any commercial license management framework typically residing in a separate or higher layer system (e.g. OSS/BSS). I think of this as identifying and specifying the minimum set of operations necessary to be executed by the NFV Management & Orchestration (MANO) system to acquire VNF software licenses and monitor their usage.

Addressing the NFV License Management Challenge

I encouraged discussion on this topic in the ETSI NFV Network Operator Council and there were notable contributions from BT, Korea Telecom and others which raised global awareness.

Peter Willis at BT summed up the network operator requirements for NFV license management very nicely in an influential contribution:

- All VNFs should use the same methods, mechanisms and protocols.

- Processes should be fully automated requiring no manual intervention and scalable to 10’s of Millions of VNF instances.

- There should be no common mode failure mechanisms.

- Networks should be able to bootstrap in all possible scenarios.

- Customers should not lose service due to administrative errors (i.e. VNFs should default to running).

- All commercial VNF licensing models should be supported without requiring VNFs to be re-written or upgraded.

- Peter provided some interesting examples: Perpetual, pre-pay, post-pay, pay-per-use, pay-per-GByte, pay-per-Gbit, pay-by-maximum-instances, pay-per-day, pay-per-month, pay-per-minute, etc.

- VNF “usage” accounting should be independent of “billing” (i.e. it should be possible to turn “usage” data into a “bill” using a third-party application).

- “Usage” data should be authenticated & auditable (a key concern for VNF providers).

With the network operators fully on board, an ETSI NFV Work Item was initiated to study the topic and to publish a set of recommendations that the industry could sign off on. Abinash Vishwakarma at NetCracker volunteered to lead the work which started in the autumn of 2016.

Recommendations Published

I am really pleased that just as everyone was heading home for the holidays, ETSI NFV delivered the Report on License Management for NFV. This work took months of collaborative effort and is a very important step for the industry. It documents the features required to be implemented within the NFV Architectural Framework to support NFV License Management. These features will enable any combination of commercial license management regimes without implementing proprietary license management mechanisms.

The ETSI NFV work is complemented by work in TM Forum on NFV License Management addressing the higher layer requirements.

Next Steps

The next step will be to specify the necessary features within the ETSI NFV Architectural Framework and associated APIs that may be required to support License Management. This work is targeted to be completed in time for Release 3 of the ETSI NFV specifications in the summer of 2018.

Meanwhile, I am in dialogue with software providers to encourage them to get involved in this critical next stage of ETSI NFV work and to begin developing product road-maps to support NFV license management with the features and scalability required for telecommunications-grade operations.

What is CableLabs doing in this space?

CableLabs has been working on SDN and NFV for over four years. We have studied the impact of NFV in the cable environment, including the home environment and the access network. We are also making a significant contribution to the collaborative industry effort on NFV. We hold leadership positions in ETSI NFV and our NFV & SDN software stack – SNAPS is part of OPNFV. We actively encourage interoperability for NFV and SDN solutions and CableLabs’ subsidiary Kyrio operates SDN-NFV interoperability labs at our Sunnyvale-CA and Louisville-CO locations, which enable vendors and operators to work together to validate interoperability for their solutions.

ETSI NFV has created the foundation standards to deliver carrier-grade virtualization capabilities for the global telecommunications industry. You can find more information at ETSI NFV Industry Specification Group. To stay current with what CableLabs is doing in this space, make sure to subscribe to our blog.

--

Don Clarke is a Principal Architect at CableLabs working in the Core Innovation Group. He chairs the ETSI NFV Network Operator Council and is a member of the ETSI NFV leadership team.

Virtualization

CableLabs Announces SNAPS-Boot and SNAPS-OpenStack Installers

After living and breathing open source since experimenting in high school, there is nothing as sweet as sharing your latest project with the world! Today, CableLabs is thrilled to announce the extension of our SNAPS-OO initiative with two new projects: SNAPS-Boot and SNAPS-OpenStack installers. SNAPS-Boot and SNAPS-OpenStack are based on requirements generated by CableLabs to meet our member needs and drive interoperability. The software was developed by CableLabs and Aricent.

SNAPS-Boot

SNAPS-Boot will prepare your servers for OpenStack. With a single command, you can install Linux on your servers and prepare them for your OpenStack installation using IPMI, PXE and other standard technologies to automate the installation.

SNAPS-OpenStack

The SNAPS-OpenStack installer will bring up OpenStack on your running servers. We are using a containerized version of the OpenStack software. SNAPS-OpenStack is based on the OpenStack Pike release, as this is the most recent stable release of OpenStack. You can find an updated version of the platform that we used for the virtual CCAP core and mobile convergence demo here.

How you can participate:

We encourage you to go to GitHub and try for yourself:

Why SNAPS?

SNAPS (SDN & NFV Application Platform and Stack) is the overarching program to provide the foundation for virtualization projects and deployment leveraging SDN and NFV. CableLabs spearheaded the SNAPS project to fill in gaps in the open source community to ease the adoption of SDN/NFV with our cable members by:

Encouraging interoperability for both traditional and prevailing software-based network services: As cable networks evolve and add more capabilities, SNAPS seeks to organize and unify the industry around distributed architectures and virtualization on a stable open source platform to develop baseline OpenStack and NFV installations and configurations.

Network virtualization requires an open platform. Rather than basing our platform on a vendor-specific version, or being over 6 months behind the latest OpenStack release, we added a lightweight wrapper on top of upstream OpenStack to instantiate virtual network functions (VNFs) in a real-time dynamic way.

Seeding a group of knowledgeable developers that will help build a rich and strong open source community, driving developers to cable: SNAPS is aimed at developers who want to experiment with building apps that require low latency (gaming, virtual reality and augmented reality) at the edge. Developers are able to share information in the open source community on how they optimize their application. This not only helps other app developers, but helps the cable industry understand how to implement SDN/NFV in their networks and gain easy access to these new apps.

At CableLabs, we pursue a “release early” principle to enable contributions to improve and guide the development of new features and encourage others to participate in our projects. This enables us to continuously optimize the software, extend features and improve the ease of use. Our subsidiary, Kyrio, is also handling the integration and testing on the platform at their NFV Interoperability lab.

You can find more information about SNAPS in my previous blog posts “SNAPS-OO is an Open Sourced Collaborative Development” and “NFV for Cable Matures with SNAPS”

Who benefits from SNAPS?

- App Developers will have access to a virtual sandbox that allows them to test how their app will run in a cable scenario, saving them time and money.

- Service providers, vendors and enterprises will be able to build more exciting applications, on a pure open source NFV platform focused on stability and performance, on top of the cable architecture.

How we developed SNAPS:

We leverage containers which have been built and tested by the OpenStack Kolla project. If you are not familiar with Kolla, it is an OpenStack project that maintains a set of Docker containers for many of the OpenStack components. We use these scripts to deploy the containers because the Kolla-Ansible scripts are the most mature and include a broad set of features which can be used in a low latency edge data center. By using containers, we are improving the installation process and updating.

To maximize the usefulness of the SNAPS platform, we included many of the most popular OpenStack projects:

Additional services we included:

Where the future of SNAPS is headed:

- We plan to continue to make the platform more robust and stable.

- Because of the capabilities we have developed in SNAPS, we have started discussions with the OPNFV Cross Community Continuous Integration (XCI) project to use SNAPS OpenStack as a stable platform for testing test tools and VNFs with a goal to pilot the project in early 2018.

- Aricent is a strong participant in the open source community and has co-created the SNAPS-Boot and SNAPS-OpenStack installer project. Aricent will be one of the first companies to join our open source community contributing code and thought leadership, as well as helping others to create powerful applications that will be valuable to cable.

- As an open source project, we encourage other cable vendors and our members to join the project, contribute code and utilize the open source work products.

There are three general areas where we want to enhance the SNAPS project:

- Integration with NFV orchestrators: We are including the OpenStack NFV orchestrator (Tacker) with this release and we want to extend this to work with other orchestrators in the future.

- Containers and Kubernetes support: We already have some support for Kubernetes running in VMs. We would like to evaluate the benefit of running Kubernetes with or without the benefit/overhead of VMs.

- Serverless computing: We believe that Serverless computing will be a powerful new paradigm that will be important to the cable industry and will be exploring how best to use SNAPS as a Serverless computing platform.

Interactive SNAPS portfolio overview:

Have Questions? We’d love to hear from you

- Reach out on IRC: Server: Freenode Channel #cablelabs-snaps

- Contribute to the documentation, backlog and code on GitHub

- Send an e-mail directly to snaps@cablelabs.com

- Tweet to @RandyLevensalor

Don’t forget to subscribe to our blog to read more about NFV and SNAPS in our upcoming in-depth SNAPS series. Members can join our NFV Workshop February 13-15, 2018. You can find more information about the workshop and the schedule here.

Virtualization

NFV for Cable Matures with SNAPS

SNAPS is improving the quality of open source projects associated with the Network Functions Virtualization (NFV) infrastructure and Virtualization Infrastructure Managers (VIM) that many of our members use today. In my posts, SNAPS is an Open Source Collaborative Development Resource and Snapping Together a Carrier Grade Cloud, I talk about building tools to test the NFV infrastructure. Today, I’m thrilled to announce that we are deploying end-to-end applications on our SNAPS platform.

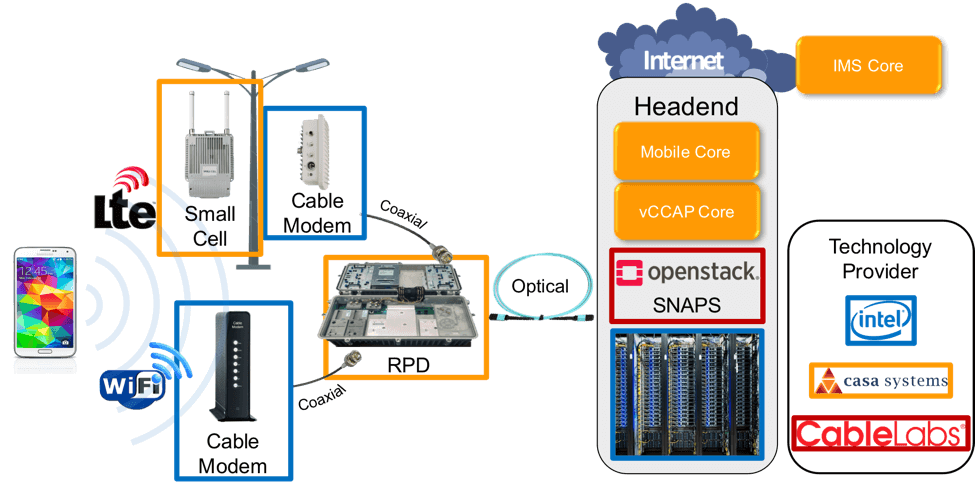

To demonstrate this technology, we recently held a webinar “Virtualizing the Headend: A SNAPS Proof of Concept” introducing the benefits and challenges of the SNAPS platform. Below, I’ll describe the background and technical details of the webinar. You can skip this information and go straight to the webinar by clicking here.

Background

CableLabs’ SDN/ NFV Application Development Platform and OpenStack project (SNAPS for short) is an initiative that attempts to accelerate the adoption of network virtualization.

Network virtualization gives us the ability to simulate a hardware platform in software. All the functionality is separated from the hardware and runs as a “virtual instance.” For example, in software development, a developer can write an application and test it on a virtual network to make sure the application works as expected.

Why is network virtualization so important? It gives us the ability to create, modify, move and terminate functions across the network.

Why SNAPS is unique

- Creates a stable, replicable and cost-effective platform: SNAPS allows operators and vendors to efficiently develop new automation capabilities to meet the growing consumer demand for self-service provisioning. Much like signing up for Netflix, self-service provisioning allows customers to add and change services on their own, as opposed to setting-up a cable box at home.

- Provides transparent API’s for various kinds of infrastructure

- Reduces the complexity of integration testing

- Only uses upstream OpenStack components to ensure the broadest support: SNAPS is open source software which is available directly from the public OpenStack project. This means we do not deviate from the common source.

With SNAPS, we are pushing the limits of open source and commodity hardware because members can run their entire Virtualized Infrastructure Manager (VIM) on the platform. This is important because the VIM is responsible for managing the virtualized infrastructure of a NFV solution.

Webinar: Proof of Concepts

We collaborated with Aricent, Intel and Casa Systems to deploy two proof of concepts that are reviewed in the webinar. We chose these partners because they are leading the charge to create dynamic cable and mobile networks to keep up with world’s increasing hunger for faster, more intelligent networks tailored to meet customers' needs.

Casa and Intel: Virtual CCAP and Mobile Cores

CableLabs successfully deployed a virtual CCAP (converged cable access platform) core on OpenStack. Eliminating the physical CCAP provides numerous benefits to service providers, including power and cost savings.

Casa and Intel provided hardware and Casa Systems provided the Virtualized Network Function (VNFs) which ran on the SNAPS platform. The virtual CCAP core controls the cable plant and moves every packet to and from the customer sites. You can find more information about CCAP core in Jon Schnoor’s blog post “Remote PHY is Real.”

Advantages of Kyrio’s NFV Interop Lab

For the virtual CCAP demo, the Kyrio NFV Interop Lab provided a collaborative environment for Intel and Casa to leverage the Kyrio lab and staff to build and demonstrate the key building blocks for virtualizing the cable access network.

The Kyrio NFV Interop Lab is unique. It provides an opportunity for developers to test interoperability in a network environment against certified cable access network technology. You can think of the Kyrio lab as a sandbox for engineers to work and build in, enabling:

- Shorter development times

- Operator resources savings

- Faster tests, field trials and live deployments

Aricent: Low Latency and Backhaul Optimization

With Aricent we had two different proof of concepts. Both demos highlighted the benefits of having a cloud (or servers) at the service provider edge (less than 100 miles from a customer’s home):

- Low latency: We simulated two smart cars connected to a cellular network. The cars used an application running on a cloud to calculate their speed. If the cloud was too far away, a faster car would rear end a slower car before it was told to slow down. If the cloud was close, the faster car would slow down in time to prevent rear-ending the slower car.

- Bandwidth savings: Saving data that will be used by several people in a closer location can reduce the amount of traffic on the core network. For example, when someone in the same neighborhood watches the same video, they will see a local copy of the video, rather than downloading the original from the other side of the country.

The SNAPS platform continues CableLabs’ tradition of bringing leading technology to the cable industry. The collaborations with Intel, Aricent and Casa Systems were very successful because:

- We demonstrated end-to-end use cases from different vendors on the same version of OpenStack.

- We identified additional core capabilities that should be a part of every VIM. We have already incorporated new features in the SNAPS platform to better support layer 2 networking, including increasing the maximum frame size (or MTU) to comply with the DOCSIS® 3.1 specification.

In addition to evolving these applications, we are interested in collaborating with other developers to demonstrate the SNAPS platform. Please contact Randy Levensalor at r.levensalor@cablelabs.com for more information.

Don’t forget to subscribe to our blog to read more about how we utilize open source to develop quickly, securely, and cost-effectively.

Virtualization

Hosting ETSI NFV in Mile High City

The global telecommunications industry is undergoing an unprecedented transformation to software-based networking driven by the emergence of Network Functions Virtualization (NFV). Last week in downtown Denver, CableLabs welcomed over 130 delegates from all over the world to the 19th plenary session of the ETSI NFV Industry Specification Group. With over 300 member companies including 38 global network operators, ETSI NFV is the leading forum developing the foundation international standards for NFV.

In 2013 ETSI NFV published the globally referenced Architectural Framework for NFV and over the past four years has been working intensively to specify the interfaces and functionality in sufficient detail to enable vendors to bring interoperable products to the market.

Recently I calculated that over 90,000 individual-contributor hours have been spent in the ETSI NFV face-to-face plenary meetings to date - and this doesn’t include working group interim face-to-face meetings and conference calls. In a typical week, there are at least seven different working group calls timed to enable participation by delegates located around the globe.

ETSI NFV has openly published over 60 specifications which define the functional blocks needed to deliver carrier-grade network performance in the telecommunications environment. Taking a page out of the open source playbook, ETSI NFV maintains an Open Area where draft specifications which are still being worked-on can be downloaded to enable the wider industry to see where the work is heading, and for developers to begin writing code.

Why is the ETSI NVF work critical?

The ETSI NFV work enables telecommunications operators to use cloud technologies to implement resilient network solutions able to deliver the rigorous service levels which underpin critical national infrastructures. The ETSI NFV work also enables domain-specific standards bodies such as 3GPP, Broadband Forum, IETF, MEF etc. to call out common foundation specifications which will enable their solutions to co-exist on the same virtualization platform. Open source communities also need to reference common specifications to ensure their solutions will be interoperable. The need for open source communities to reference the ETSI NFV work to avoid fragmentation is a topic I’ve become quite vocal about in recent contributions to international conferences.

Encouraging interoperability within an open ecosystem has been a key objective for ETSI NFV since it was launched. To drive this forward, ETSI NFV recently completed specifications which detail the REST APIs between key elements of the NFV Architectural Framework. Additional specifications, including the APIs exposed to Operations Support Systems (OSS), will be completed by the end of this year. This is a key piece of the puzzle to realize our vision for NFV. Bruno Chatras at Orange who chairs this work has blogged on this so I won’t cover it in detail here. Suffice to say; this new direction will massively accelerate progress on NFV implementation and interoperability.

Tutorial and Hands-on Demo Session

As many of the world’s key experts on NFV were present for the ETSI NFV plenary sessions we organized an NFV tutorial and hands-on demo session on Monday afternoon which I called a ‘SpecFest’. This enabled local technology people to meet and interact with the experts. I think the term ‘Hackfest’ is overused and I wanted to promote the idea that demonstrations of running code centered on adherence to detailed specifications could also be exciting. We sent invites to local cable operators, startups and Colorado University at Boulder to help broaden awareness of the ETSI NFV specification work. I wasn’t disappointed, over 70 delegates turned up and over 50 participants joined remotely. The tutorial and demo materials are freely available for download. The event exceeded our expectations, not only in the level of participation but also Nokia stepped up with the first public demonstration of running code implementing the ETSI NFV specs run live from their center in Hungary. It was truly a ‘SpecFest’!

Joining up with Open Source

Coincidently there was an OpenStack Project Teams Gathering (PTG) meeting taking place in Denver, and it was too good an opportunity to miss to join up the two communities for a mutual update. The OpenStack Glare Project intends to implement interfaces based on the ETSI NFV specifications, and ETSI NFV has just completed a gap analysis in relation to OpenStack. The opportunity to get together to share technical perspectives, build the relationship and figure out how to collaborate more closely, was timely. The CableLabs NFV software platform is centered on OpenStack and we are committed to open standards, so this type of collaboration is something we are very keen on.

Excellent Progress on ETSI NFV Releases

The ETSI NFV working groups met in parallel throughout the week with over 230 contributions to work through. Release 2 maintenance is close to being completed and new features for inclusion in Release 3 went forward for more detailed analysis including Network Slicing, License Management, Charging and Billing, Policy Management, etc. Excellent progress was made on specifying the Network Service Descriptor (NSD) and Virtual Network Function Descriptor (VNFD) with TOSCA. This augments the REST APIs I mentioned earlier.

As a member of the ETSI NFV leadership team, I’ve been a keen advocate for ETSI NFV to strictly focus on work that is high value to the industry. Quality is critically important, we want to avoid work that burns time but doesn’t move us towards our goals. With this in mind, I was pleased to see new work items approved that address genuine gaps. These included Connection-based Virtual Services led by Verizon, NFV Identity Management and Security led by BT and NFV Descriptors based on YANG led by Cisco, amongst others.

The next ETSI NFV plenary will be held in Sophia-Antipolis December 5-8, 2017 and we are planning ahead for the second ETSI NFV Plugtests to be held at the ETSI Center for Testing and Interoperability (CTI) January 15-19, 2018. The first Plugtests were over-subscribed, so participating companies will need to register early!

CableLabs was extremely proud to host this event and we’d like to thank Amdocs, Aricent, arm, Broadband Forum, Intel and MEF for their sponsorship.

What is CableLabs doing in this space?

CableLabs has been working on SDN and NFV for over 4-years. We have studied the impact of NFV in the home environment and developed insights which smooth the way for virtual provisioning in the Access Network. We are a leading contributor to ETSI NFV and our NFV & SDN stack – SNAPS is part of OPNFV. We are keen to encourage interoperability for NFV and SDN solutions, CableLabs subsidiary Kyrio operates SDN-NFV interoperability labs at our Sunnyvale-CA and Louisville-CO locations which enable vendors and operators to work together.

--

ETSI NFV has created the foundation standards to deliver carrier-grade virtualization capabilities for the global telecommunications industry. You can find more info at ETSI NFV Industry Specification Group. Don't forget to subscribe to our blog to find out more about ETSI NFV in the future.

Don Clarke is a Principal Architect at CableLabs working in the Core Innovation Group. He chairs the ETSI NFV Network Operator Council and is a member of the ETSI NFV leadership team.